|

Jack Ganssle's Blog This is Jack's outlet for thoughts about designing and programming embedded systems. It's a complement to my bi-weekly newsletter The Embedded Muse.

Contact me at jack@ganssle.com. I'm an old-timer engineer who still finds the field endlessly fascinating (bio).

This is Jack's outlet for thoughts about designing and programming embedded systems. It's a complement to my bi-weekly newsletter The Embedded Muse.

Contact me at jack@ganssle.com. I'm an old-timer engineer who still finds the field endlessly fascinating (bio). |

Software Process Improvement for Firmware

January 21, 2019

Software Process Improvement (SPI) is the task of figuring out what a team does right, what needs to be improved, and then making specific suggestions to help the group generate better code, hopefully on a shorter schedule.

My interest is exclusively embedded systems, so my SPI work has been on firmware and finding ways to help firmware teams improve. The issues faced by embedded engineers are different from those writing spreadsheets or developing web pages, for the work we do is always tied to hardware.

It's fun and gratifying to help teams out. It can be frustrating as well. The first thing I almost always find is that there are few metrics collected. The team has no quantitative sense about how they're doing. Some tell me things are peachy and they're world-class; others complain of utter chaos. Sometimes two different members of the same team will give me both answers! But it's extremely rare that anyone can give hard numbers.

Engineering without numbers is not engineering - it's art.

Without numbers, there's no way to compare a team's work to industry benchmarks. Is the team better than most? Average? Or sub-par?

I advise all developers, regardless of how well you think you're doing, to collect metrics. Do this religiously, all the time. Engaged in SPI? Collect numbers. Not doing SPI? Collect numbers. Capers Jones and others publish plenty of comparative data.

Long ago I took a series of classes on selling. As an engineer I found that pretty dreary! But one thing stuck: find a prospective customer's pain point, and address that. Today that resonates as almost every company approaching me for help asks due to some pain they have. The two most common pain points: bugs that surface in the field and create (generally expensive) havoc, or a consistent inability to come close to meeting schedules.

Neither is easy to fix.

The bug problem is the more tractable of the two. There's a line of thinking that we should not call these "bugs"; rather, some prefer "errors." For the majority of problems are indeed mistakes that we have allowed to slip into the code, mistakes that could have been avoided in the first place.

One of the biggest sources of errors/bugs is the poor elicitation of requirements. Two companies I worked with last year operated under regulatory requirements due to the safety-critical nature of their code. They had to conform to various IEC standards. Both did produce voluminous quality reports that showed such compliance. Both generated these ex-post-facto, long after their products shipped, which defied the intent of the standards. I view this as writing all the code, making it work (more or less), and then commenting it.

Requirements are hard to discern. Sometimes really hard. But that's no excuse to shortchange the process. The fact that they are hard means more effort needs to be expended. We'll rarely get them 100% correct, but careful engineering is our job, and part of that is getting the requirements mostly correct.

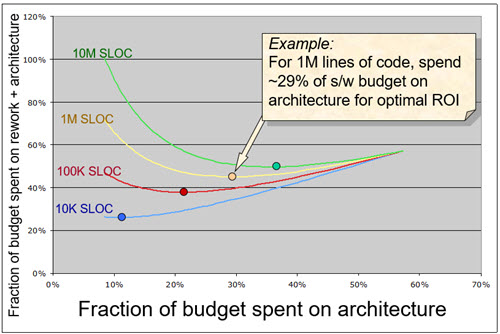

Then there's design. While many in this industry have deprecated design, we need it now more than ever. And we have a pretty good idea about this. NASA has shown:

So, when it comes to bugs/errors, a big chunk of those come from pre-coding work, or rather, a lack of work. Almost always the SPI effort uncovers a significant shortfall in these areas.

Then there's the implementation phase: writing and testing the firmware. It seems the software zeitgeist today is to write a lot of code, fast. But, did you know the average team spends 50% of the schedule debugging? I guess the other half of the schedule should be called "bugging."

Better: slow down and write great code. Spend much less time debugging it. I could go on at great length here but won't. However, if there's anything we've learned in the last half-century of the quality movement it's that quality must be designed in. You can't bolt it on. A focus on fixing bugs will never lead to great code. Get it right first. Then fix the (few) inevitable bugs.

The agile community focuses on test, and that focus is admirable. I wish more teams could be so relentless about test. But we know that testing is just not adequate; study after study shows that unless one is using coverage, test will exercise only about half the code. Test, yes! But teams should think in terms of many filters, each filter finding some proportion of the bugs. The first filter is having each developer review his/her own code pre-compile, looking for problems. The compiler's syntax checker is another filter. Then there's Lint. Static analyzers. And so much more. Jones' work lists over 60 such filters. It would be insane to use them all, but it's sort of daft not to pick an appropriate (considerably smaller) subset.

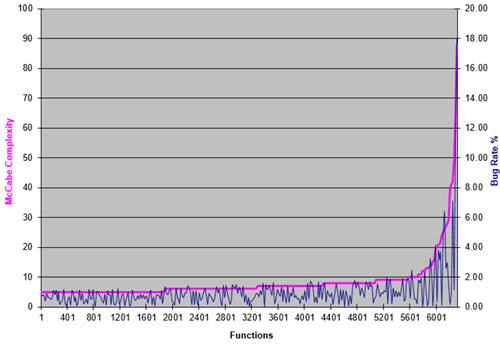

And there are metrics! An example is complexity. The following is from a client's product which comprised about 6000 functions.

It's clear that complex functions were much buggier than simpler ones. We manage what we measure, and this is one of several quantitative assessments that are important.

Earlier I mentioned that the second big issue is an inability to meet schedules. I'll cover this in another post.

But to end this post with two pieces of advice: Collect metrics. And study software engineering, constantly. There's a lot we know as a profession. Alas, that knowledge often doesn't get applied in actually doing the work.

Feel free to email me with comments.

Back to Jack's blog index page.

If you'd like to post a comment without logging in, click in the "Name" box under "Or sign up with Disqus" and click on "I'd rather post as a guest."

Recent blog postings:

- Non Compos Mentis - Thoughts on dementia.

- Solution to the Automotive Chip Shortage - why use an MCU when a Core I7 would work?

- The WIRECARE - A nice circuit tester

- Marvelous Magnetic Machines - A cool book about making motors

- Over-Reliance on GPS - It's a great system but is a single point of failure

- Spies in Our Email - Email abuse from our trusted friends

- A Canticle for Leibowitz - One of my favorite books.

- A 72123 beats per minute heart rate - Is it possible?

- Networking Did Not Start With The IoT! - Despite what the marketing folks claim

- In-Circuit Emulators - Does anyone remember ICEs?

- My GP-8E Computer - About my first (working!) computer

- Humility - On The Death of Expertise and what this means for engineering

- On Checklists - Relying on memory is a fool's errand. Effective people use checklists.

- Why Does Software Cost So Much? - An exploration of this nagging question.

- Is the Future All Linux and Raspberry Pi? - Will we stop slinging bits and diddling registers?

- Will Coronavirus Spell the End of Open Offices - How can we continue to work in these sorts of conditions?

- Problems in Ramping Up Ventilator Production - It's not as easy as some think.

- Lessons from a Failure - what we can learn when a car wash goes wrong.

- Life in the Time of Coronavirus - how are you faring?

- Superintelligence - A review of Nick Bostrom's book on AI.

- A Lack of Forethought - Y2K redux

- How Projects Get Out of Control - Think requirements churn is only for software?

- 2019's Most Important Lesson. The 737 Max disasters should teach us one lesson.

- On Retiring - It's not quite that time, but slowing down makes sense. For me.

- On Discipline - The one thing I think many teams need...

- Data Seems to Have No Value - At least, that's the way people treat it.

- Apollo 11 and Navigation - In 1969 the astronauts used a sextant. Some of us still do.

- Definitions Part 2 - More fun definitions of embedded systems terms.

- Definitions - A list of (funny) definitions of embedded systems terms.

- On Meta-Politics - Where has thoughtful discourse gone?

- Millennials and Tools - It seems that many millennials are unable to fix anything.

- Crappy Tech Journalism - The trade press is suffering from so much cost-cutting that it does a poor job of educating engineers.

- Tech and Us - I worry that our technology is more than our human nature can manage.

- On Cataracts - Cataract surgery isn't as awful as it sounds.

- Can AI Replace Firmware - A thought: instead of writing code, is the future training AIs?

- Customer non-Support - How to tick off your customers in one easy lesson.

- Learn to Code in 3 Weeks! - Firmware is not simply about coding.

- We Shoot For The Moon - a new and interesting book about the Apollo moon program.

- On Expert Witness Work - Expert work is fascinating but can be quite the hassle.

- Married To The Team - Working in a team is a lot like marriage.

- Will We Ever Get Quantum Computers - Despite the hype, some feel quantum computing may never be practical.

- Apollo 11, The Movie - A review of a great new movie.

- Goto Considered Necessary - Edsger Dijkstra recants on his seminal paper

- GPS Will Fail - In April GPS will have its own Y2K problem. Unbelievable.

- LIDAR in Cars - Really? - Maybe there are better ideas.

- Why Did You Become an Engineer? - This is the best career ever.

- Software Process Improvement for Firmware - What goes on in an SPI audit?

- 50 Years of Ham Radio - 2019 marks 50 years of ham radio for me.

- Medical Device Lawsuits - They're on the rise, and firmware is part of the problem.

- A retrospective on 2018 - My marketing data for 2018, including web traffic and TEM information.

- Remembering Circuit Theory - Electronics is fun, and reviewing a textbook is pretty interesting.

- R vs D - Too many of us conflate research and development

- Engineer or Scientist? - Which are you? John Q. Public has a hard time telling the difference.

- A New, Low-Tech, Use for Computers - I never would have imagined this use for computers.

- NASA's Lost Software Engineering Lessons - Lessons learned, lessons lost.

- The Cost of Firmware - A Scary Story! - A hallowean story to terrify.

- A Review of First Man, the Movie - The book was great. The movie? Nope.

- A Review of The Overstory - One of the most remarkable novels I've read in a long time.

- What I Learned About Successful Consulting - Lessons learned about successful consulting.

- Low Power Mischief - Ultra-low power systems are trickier to design than most realize.

- Thoughts on Firmware Seminars - Better Firmware Faster resonates with a lot of people.

- On Evil - The Internet has brought the worst out in many.

- My Toothbrush has Modes - What! A lousy toothbrush has a UI?

- Review of SUNBURST and LUMINARY: An Apollo Memoir - A good book about the LM's code.

- Fun With Transmission Lines - Generating a step with no electronics.

- On N-Version Programming - Can we improve reliability through redundancy? Maybe not.

- On USB v. Bench Scopes - USB scopes are nice, but I'll stick with bench models.