|

||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||

| Contents | ||||||

| Editor's Notes | ||||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar: here, here and here. Jack's latest blog: Why Did You Become an Engineer? |

||||||

| Quotes and Thoughts | ||||||

"What makes an expert isn't so much what they know, It's that they've done similar things so many times wrong They know what not to do." - Wayne Mitzen |

||||||

| Tools and Tips | ||||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Mohd Lee responded to comments in Muses 355, 356 and 357 about asynchronous sampling, where the discussion focused on the problem of multiple reads of an input whose value is changing. A common problem is reading a 16-bit timer with an 8-bit MCU. Mohd wrote:

Thor wrote:

|

||||||

| Freebies and Discounts | ||||||

Courtesy of the fine folks at Keysight, this month's giveaway is one of their brand new DSOX-1204G bench 200 MHz 4-channel scopes:

Enter via this link. Also, at the end of the scope's review (next article) there are links for a contest Keysight is running, where they will be giving away a number of these and lots of other test equipment. |

||||||

| Review: Keysight's New DSOX1204G Scope | ||||||

Keysight has a couple of new additions to their DSOX1000 series of low-cost oscilloscopes. The new models bring four channels to bear with up to a 200 MHz bandwidth. That doubles both specs compared to their earlier units in this line. The company kindly sent two of their DSOX1204G units for evaluation and as contest offerings. One is the contest offering this month and the other next month. See the picture above. First, the specs:

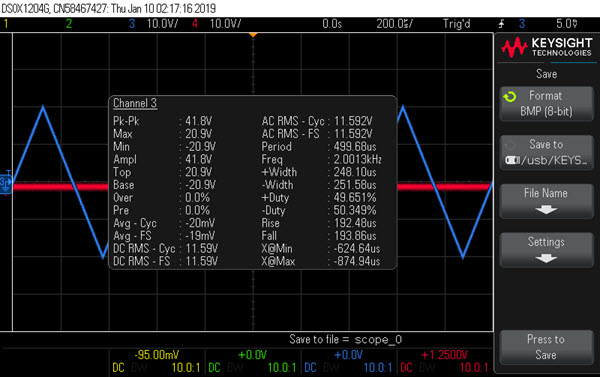

The base price for the 4-channel version at 70 MHz is $998, or $1204 with the waveform generator. The 200 MHz version I tested had all the options and goes for $2214. First, the screen: It's crisp, easy to read, and there's no waveform jitter like you see on some of the Chinese units (the unit does sport a "made in China" sticker). The writing is small (as is the case on pretty much all scopes today) but very clear. I do have to squint to read the time base units: is it µs or ns? Some of that is probably attributable to soon-to-be-fixed cataracts. If you've used one of Keysight's InfiniiVision scopes the user interface will be very familiar. There are two ways to implement a four-channel scope: have four separate sets of vertical controls or use one set, with buttons to select which channel the controls effect. Keysight chose the latter. A LED changes color to indicate the selected channel, and that color scheme is unified throughout the scope, including the color of the trace on the screen. The unit is small and very portable at 314 mm x 165 mm x 130 mm and 3.2 kg. Today it's hard to differentiate scopes as so many have similar features. Pretty much all do a decent job of sucking in and displaying signals, so I'll point out the DSOX1204G's differentiating features. First, a "Quick Action" button can be configured to save to a USB stick, clear the display, and a few other things. For me, the only value I see is to set this to "Measure All", which pops up an ocean of measurements:

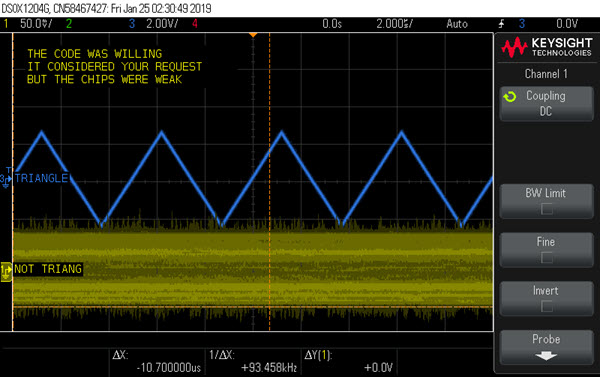

The "Display" button sets the usual things like persistence and the grid. But it also allows one to attach labels to waveforms and put annotations on the screen. I like this a lot as it makes documenting a screen shot much easier. Remember scope cameras, huge, bulky Polaroid beasts that were positioned over the screen? We'd use a sharp pointer to scratch labels on the resulting picture.

The user manual leaves a lot to be desired. For instance, it's pretty much silent on what a "pattern" trigger is. All of the controls do have excellent context-sensitive help, and pressing the "Trigger Type Pattern" soft switch was illuminating. A pattern trigger is the logical AND of a set of the input channels. Legal values are 1, 0, X, and a rising or falling edge. This is not a mixed signal scope: there are no digital inputs. However, an interesting and surprising feature is the ability to group two or more of the channels into an "analog bus." The scope decodes the channels into ones and zeroes. In the following example, channel 4 is the MSB and 3 is the LSB; the white bus at the bottom shows the digital values. Here I'm using analog waveforms, which is silly but fun. More likely you'd be scoping logic levels:

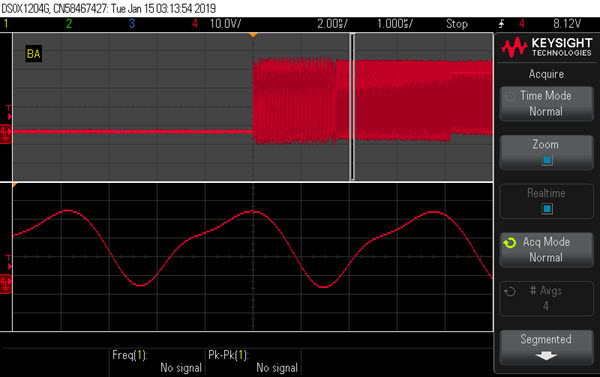

Very cool, and a sweet way to get logic analyzer-like capability. Like pretty much all digital scopes, the DSOX1204G has a zoom mode that is often under-appreciated:

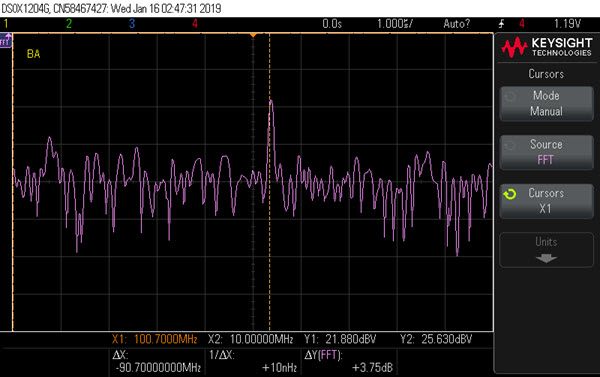

The top trace was acquired at 1 µs/div. Something weird is going on but it's hard to see what. The bottom trace is zoomed to 2 ns/div and shows more detail. The sample rate is 2 GSa/s - nominally. With a slow time base that will decrease, of course. With more than one channel enabled that falls to 1 GSa/s. With all four on, the rate doesn't drop further. While the sample rate is displayed on the right-side of the screen, it's often covered up by other menus. Pressing the "Back" button one or two times will reveal it, but this parameter is so important I wish it were never hidden. The scope supports segmented memory, a very powerful and useful feature. I described this in some detail in Muse 315 so will gloss over it here, but this feature allows one to split the memory buffer into up to 50 chunks; each trigger event fills one chunk. That's useful if you want to capture multiple instances of something that happens slowly compared to the horizontal rate. I don't use that feature often, but sometimes it's a lifesaver, although, in practice, it's pretty unusual for a scope to save a life. Like all digital scopes the DSOX-1204G has a number of math operations: add, subtract, multiply, divide, FFT and, nicely, a low-pass filter. The filter's bandwidth is variable from 1 Hz to 200 MHz. While each channel does have the usual bandwidth limiter, this feature lets you fine-tune the displayed signal. I haven't seen this in inexpensive scopes before. Suggestion to Keysight: add a mode where the signal, after going through the low-pass filter, comes out the waveform generator's BNC - that would be a nifty tool for the lab. It also has the mask testing feature that I described in Muse 358. A waveform generator is standard in the 1204G model. Rise time for square waves and pulses is spec'd at 18 ns which is what I measured. That's fairly typical for low-cost generators but does mean that a 10 MHz square wave is rather siney-looking. Usually a scope's FFT (Fast Fourier Transform) selection is buried in a menu. On the DSOX1204G it gets its own button. One fun thing to do with an FFT is to survey the radio spectrum. I ran a meter of wire as an antenna into one of the scope's channels. The following picture shows the FFT with a center frequency of 100 MHz with a 20 MHz span, mostly covering the commercial FM band. Sure enough, the cursor is on a peak at 100.70 MHz, the carrier frequency of the strongest Baltimore station we get out here in beautiful downtown Finksburg:

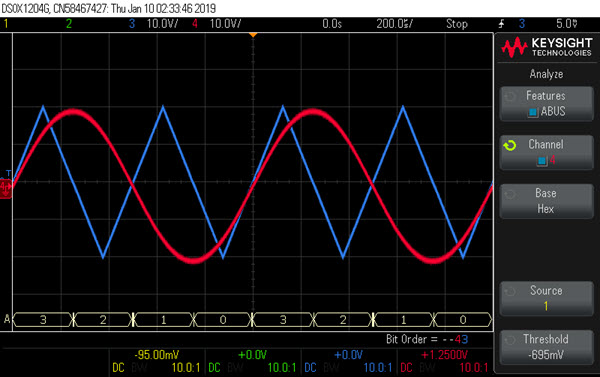

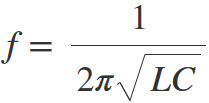

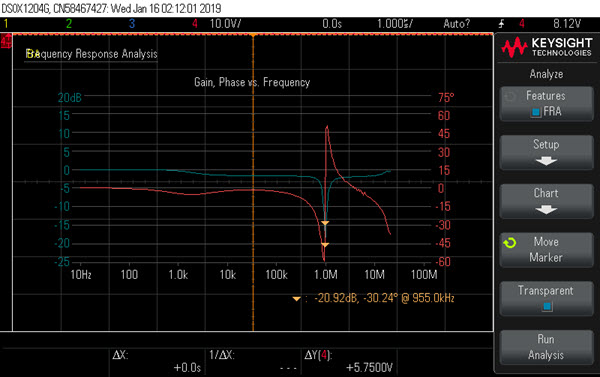

One feature that really stands out is the scope's "frequency response analysis" (FRA). Having an FFT is somewhat akin to getting a spectrum analyzer (SA) for free. With the FRA the unit is like a SA with a tracking generator. The waveform generator's sine wave is swept in frequency between two values while monitoring the input and output of an AC network. A Bode plot of amplitude and phase shift is then displayed. A little electronics is in order: Feed AC through a capacitor or inductor and you'll find the waveform phase-shifted and attenuated. The amount of these distortions is highly frequency dependent. Wire an inductor and capacitor in series or parallel and at some frequency the network becomes resonant. If in parallel the LC's combined reactance (AC resistance) goes to a high value. That frequency is given by:

I wired a 75 uH inductor in parallel with a 470 pF capacitor and had the scope create a Bode plot of the network:

The blue trace is the amplitude of the signal through the network; the red is the phase shift. Orange numbers under the graph show that, at the marker, the signal is 21 dB down from nominal, with a 30-degree phase shift, and the resonance frequency is 955 KHz. Moving the marker just one pixel to the right changed the frequency to 1 MHz; my spectrum analyzer showed a minimum at 978 KHz. Now, these numbers are off from the computed 847.7 KHz resonance frequency, but the inductor is rated ± 10% and the cap ±20%. In English: the series LC circuit blocks the signal at all frequencies except near resonance. A parallel LC would show an opposite effect. The tracking generator on a SA sweeps continuously, smoothly. I watched the Keysight's waveform with another scope and found it changes frequency in discrete quanta. A control selects how many of these points should be used. Start and end frequencies are also selectable in decade ranges. In the image above there are 50 points per decade (the max allowed), with frequencies from 10 Hz to 20 MHz (the min and max, respectively). Why must one of the channels must monitor the waveform into the network? After all, the scope knows what frequency it's generating. The reason is one could probe various stages of a multiple pole filter and the input to a stage of interest could be phase shifted from the scope's waveform generator. The waveform generator's max is 20 MHz, which means no Bode plots for higher frequencies. That constraint is understandable... but it sure would be cool to be able to profile wider. Regardless, if you're working on LF or HF gear this is a killer feature. It can connect to a network, of course, and it has its own web server which apparently lets you control everything; I didn't try this. I consider a 100 MHz scope about the minimum for embedded work and two channels barely adequate. The DSOX-1204G with a 200 MHz bandwidth and four channels will fulfill most developers' needs. If you don't have a mixed-signal scope the analog bus is very useful in digital work, and if you're working with sub-20 MHz RF, the FRA is very valuable. All in all, this is an extremely nice scope at an attractive price point. | ||||||

| More on Artificial Intelligence | ||||||

Last issue's thoughts about AI generated gobs of comments from readers. I was surprised by how many are skeptical about the future of AI as it is currently envisioned. It's true that there has been a lot of hype and a lot of hope about making machines "intelligent" for a very long time. In 1970 when looking at colleges my dad took me to his alma mater, MIT, where we visited Marvin Minsky's AI lab. Minsky was working on "strong AI," which mostly bombed, but I still have a bit of lust in my heart for his PDP-12. (I didn't apply to MIT: everyone I saw was studying, not a smile was to be found). Today goals are more modest and successes more common. My son works in data science and uses AI very successfully to make sense of enormous data sets. Though the field dates to the 1950s, now there's - almost suddenly - much more capability being deployed, like huge clusters of GPUs in data centers. Contrast that to a single 12-bit PDP-12 in 1970. I'm very enthusiastic for the coming capabilities, but temper that with concerns about AI drawing incorrect conclusions about something truly important, and the inevitable political/legislative issues that will arise. The European Union's new General Data Protection Regulations already seem to address how misuse of automated reasoning may lead to big fines. Big, meaning possibly billions of dollars. One of the issues I see with AI as it is today is the difficulty of understanding why an AI device makes a decision. John Lagerquist sent a link to an article about work being done to gain visibility into how an AI network generates conclusions. Jakob Engblom sent this article which examines where AI is/should be going. It's a bit wordy but thought-provoking. An interesting point the author makes is that today AI is mostly being used in areas where mistakes are not a big deal. That will surely change. Luca Matteini wrote:

Michael Covington contributed:

Stjepan Henc works on automotive systems:

|

||||||

| More on Debouncing | ||||||

| Several people responded to the last Muse's article on debouncing.

Ray Keefe shared a debouncing story:

This is Ashleigh Quick's approach:

I emailed Ashleigh and asked where the 32 and 64 ms numbers came from:

|

||||||

| Jobs! | ||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||

| Joke For The Week | ||||||

Note: These jokes are archived here. A haiku:

|

||||||

| Advertise With Us | ||||||

Advertise in The Embedded Muse! Over 28,000 embedded developers get this twice-monthly publication. . |

||||||

| About The Embedded Muse | ||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |