|

||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||

| Contents | ||||||

| Editor's Notes | ||||||

|

A lot of prospective entrepreneurs contact me for advice. I recommend they read the first half of The E-Myth by Michael Gerber. Gerber's contention, which I've observed countless times, is that too many owners of small businesses are completely focused on working in the business - that is, doing the consulting, making the products, and generally engaging in the main business activity. Instead, one should (at least some of the time) focus on working on this business. That might include finding ways to increase productivity. Reduce taxes owed. Deliver faster. But unless one is actively making the business better, decay and entropy will prevail. This is what my Better Firmware Faster seminar is about. Take a day with your team to learn to be more efficient and deliver measurably-better code. It's fast-paced, fun, and uniquely covers the issues faced by embedded developers. Information here shows how your team can benefit by having this seminar presented at your facility. Latest blog entry - The Cost of Firmware - A Scary Story! |

||||||

| Quotes and Thoughts | ||||||

"In theory, there is no difference between theory and practice; In practice, there is." - Chuck Reid |

||||||

| Tools and Tips | ||||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. In the last issue I mentioned hiccup mode in a power management IC. A number of readers wrote about this, and it's not a new thing. Ned Freed had some references:

Readers contributed ideas about fixed-point math last issue, and John Lagerquist had some thoughts:

Daniel Wisehart had some thoughts about metastability in Muses 356 and 357:

Peter Hermann has created two amazing sets of resources/links about Ada. |

||||||

| Freebies and Discounts | ||||||

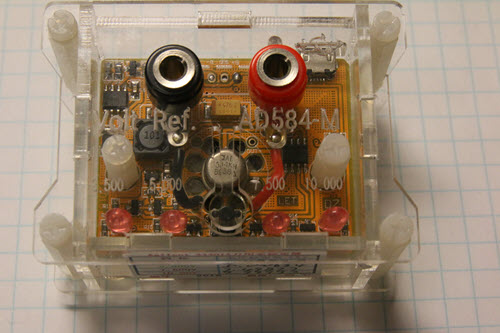

Steve Sibert won the Owon oscilloscope in last month's contest. Five years ago I reviewed Voltagestandard's DMMCheck, a nifty and very accurate voltage and resistance standard for checking the accuracy of a DMM. Alas, this product is no longer available (though they have other, better, options). However, I found a Chinese version on eBay, which I'll review in the November 19th Muse. It's also this month's giveaway.

Enter via this link. |

||||||

| On Cheap Scope Probes | ||||||

In the last Muse I reviewed Owon's nifty $106 VDS1022I USB oscilloscope. It comes with a pair of probes. I could swear I've seen these probes before; turns out, they are available from a wide number of sources, all with the same P2060 part number. Prices are $20 for a pair on Amazon, or half that in quantities of three sets or more from other sources. They appear to be manufactured by a number of Chinese companies. I imagine scope companies buy these in enormous quantities for practically nothing.

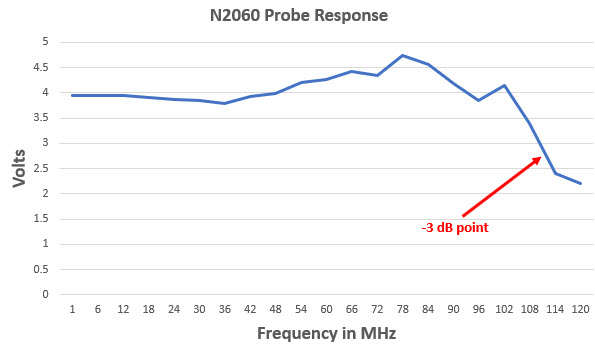

Since these probes seem to be so ubiquitous, I decided to probe them a bit. The ground leads sport alligator clips that a strong alligator might be able to open. Sliding the plastic cover off them it becomes clear that the metal clips themselves don't need much force to actuate, but the plastic is so hard you'll be able to give up that gym membership. As is common, the tip can be removed to expose a sharp pin. It takes a lot of force to pop the tip off. The tip's hook is a stamped metal affair rather than a curl of stiff wire as on more expensive models. I really don't care for this as the metal is somewhat bigger than a wire, so on a crowded board can interfere with other nodes. Most of the inexpensive Chinese probes I've used have the same construction, though. I used an Agilent/Keysight N2890A 500 MHz probe as a baseline. An HP 8640B RF generator created sine waves sent through each probe to an Agilent/Keysight MSO-X-3054A 500 MHz oscilloscope. At each frequency I adjusted the RF generator's gain so the signal presented to the scope over the 500 MHz probe was 4.00 volts. To avoid single-point errors I used the scope's statistics measurements to average values over at least 10,000 samples. The chart shows the probe's frequency response. I was surprised that this 60 MHz-rated thing's -3 dB point was about 110 MHz. That's pretty impressive.

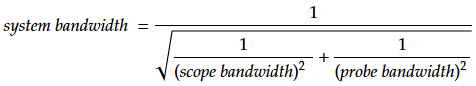

Since 100 MHz versions are available, my guess is that all of these probes are the same, with different labels for each model. To ensure the odd peak at about 78 MHz wasn't due to a resonance with the 500 MHz reference probe, I removed that and there was no change in amplitude from the N2060. Tip capacitance at 10:1 is rated at 13-17 pF. Let's assume worst case. Since Xc = 1/(2piCf), at 60 MHz the capacitive reactance of the probe is 156 ohms. What does that mean in practice? If you're measuring a 3 volt signal at 60 MHz, the probe alone will require (3 volts)/(156 ohms), or 19 mA from the probed node. Can that node provide 19 mA? In many cases the answer is "nope." A 74AUC08 AND gate can source only 9 mA, and that's when operating at its maximum power supply voltage. It gets worse at lower voltages. The result: In some cases probing a node will cause the system to crash. For more on these issues see this. (There are a lot of X1 probes without an X10 option floating around. Digi-Key lists 11 models, with the best having an awful 39 pF tip capacitance. And, strangely, that's an $89 probe. If there are any X1 models in your lab, gather them up and donate them to Goodwill.) Oddly, the probe's bandwidth is rated 60 MHz regardless of 1:1 or 10:1 settings. I found that the 3 dB point in 1:1 was at 13 MHz, though this was a positive 3 dB. Rise time is rated at 5.8 ns, but I measured it at 2.2 ns. Not bad for a 60 MHz probe! But measuring rise time with a scope can be problematic as you must factor in the scope's characteristics. According to the irreplaceable High-Speed Digital Design (Howard Johnson and Martin Graham) the composite rise time is: Tc=(T12 + T22 + ...)1/2 Where Tc is the composite rise time, and the other T's are rise times of each component, such as the scope and the probe. Given that my scope is rated at 700 ps the probe's is really about 2.1 ns. Little enough difference for a not-terribly-speedy probe, but interesting. As a rough sanity check, High-Speed Digital Design shows that most of the energy is below a knee frequency. That's: Fknee=0.5/Tr Where Tr is the rise time in nanoseconds. Connecting the N2060 probe to a spectrum analyzer showed a spectrum where, above 250 MHz, there wasn't much energy, vaguely confirming the 2.1 ns rise time. Going to X1 the spec claims no change in rise time, but I measured 11.5 ns I was prepared to laugh off these probes as only adequate for hobbyists. Surprise! Given the price point, they are surprisingly good. For comparison I looked at Tektronix's passive probes. Again, I was surprised, having spent a great deal of money on these over the years. A 50 MHz 10x probe is only $27. Step up to 100 MHz and the cost roughly doubles. Better probes cost more; for 500 MHz figure a buck a MHz or more. At 1 GHz, the buck/MHz value still applies. More exotic active versions can run tens of thousands. An aside: The scope I tested in the last Muse has a rated 25 MHz bandwidth, but there are faster models at 60 and 100 MHz. I wonder if the 60 MHz unit comes with 60 MHz probes? If so, the real system bandwidth would be:

Or 42 MHz, if one were to use the probe's 60 MHz spec. With the probe's measured 110 MHz performance, total system bandwidth would be 53 MHz. Another aside: Microsoft has removed the equation editor from Office as they've lost the source code! Apparently, a security bug forced them to do a binary patch to it, but then another eight vulnerabilities were found, so they decided to eliminate the feature. I sure miss it. And, in this day and age, losing source code is pretty much a mortal sin. |

||||||

| More on Test | ||||||

Jerry Trantow replied to my comments on test and bug filters in the last Muse:

I asked him about the VERIFY() macro as I couldn't find it in Writing Solid Code. He replied:

In my opinion the assert() macro, or something like it, is one of the most underutilized features of the C language. Wise developers are pessimists, and assume things will go wrong. That they will make mistakes. That unexpected things will happen. They instrument their code to catch these problems. Jerry kindly sent a copy of the code for his ASSERT() and VERIFY(). Unfortunately, I can't run it in the Muse as it is fairly wide, in terms of number of columns, which messes up all of the newsletter's formatting. But you can get the code here and the required header here. John Carter wrote:

Everyone needs a Shirley. Shirley (I can't remember her last name) was an assembly-line worker at the company I worked for in the 1970s. We discovered she had an uncanny knack for breaking systems. If there was a bug in the code, she found it. We moved her to our applications lab where, for a number of years, her role was to exercise new products. She pressed all of the wrong buttons and input data that should never have been used. None of the engineers had her ability to do exactly the wrong thing. She was also great at using the products correctly, so was a wonderful asset in both demonstrating systems to customers as well as showing us so-called smart guys our boneheaded errors. |

||||||

| This Week's Cool Product | ||||||

Not really a product, but it turns out TI has some nice design tools available here. Need to design a power supply? One of the tools will do pretty much all of the work for you. I suspect these have been around for some time, but only recently found out about them. Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||||

| Jobs! | ||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||

| Joke For The Week | ||||||

Note: These jokes are archived here. Exam questions: Computer Science: Write a fifth-generation computer language. Using this language, write a computer program to finish the rest of this exam for you. Electrical Engineering: You will be placed in a nuclear reactor and given a partial copy of the electrical layout. The electrical system has been tampered with. You have seventeen minutes to find the problem and correct it before the reactor melts down. Pre-Med: You will be provided with a rusty razor blade, a piece of gauze, and a full bottle of Scotch. Remove your appendix. Don't suture until your work as been inspected. You have 15 minutes. Public Speaking: Twenty-five hundred riot-crazed aborigines are storming the classroom. Calm them. You may use any ancient language except Latin, Hebrew, or Greek. Biology: Create life. Estimate the differences in subsequent human culture if this life form had developed 500,000 years earlier, with special attention to the effect, if any, on the English parliamentary system circa 1750. Prove your thesis. Civil Engineering: With the boxes of toothpicks and glue present, build a platform that will support your weight when you and your platform are suspended over a vat of nitric acid. Psychology: Based on your knowledge of their early works, evaluate the emotional stability, degree of adjustment, and repressed frustrations of each of the following: Alexander of Aphrodisias, Ramses II, and Gregory of Nicea. Support your evaluation with quotations from each man's work, making appropriate references. It is not necessary to translate. Chemistry: You must identify a poison sample which you will find at your lab table. All necessary equipment has been provided. There are two beakers at your desk, one of which holds the antidote. If the wrong substance is used, it causes instant death. You may begin as soon as the professor injects you with a sample of the poison. Sociology: Estimate the sociological problems which might be associated with the end of the world. Construct an experiment to test your theory. Mechanical Engineering: The disassembled parts of a howitzer have been placed in a box on your desk. You will also find an instruction manual, printed in machine language. In ten minutes a hungry Bengal tiger will be admitted to the room. Take whatever action you feel appropriate. Be prepared to justify your actions. Political Science: There is a red telephone on the desk beside you. Start World War III. Report at length on its socio-political effects, if any. Religion: Perform a miracle. Creativity will be judged. Metaphysics: Describe in detail the probable nature of life after death. Test your hypothesis. General Knowledge: Describe in detail. Be specific. Extra Credit: Define the universe, and give three examples. |

||||||

| Advertise With Us | ||||||

Advertise in The Embedded Muse! Over 28,000 embedded developers get this twice-monthly publication. . |

||||||

| About The Embedded Muse | ||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |