| ||||

|

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded" in the subject line your email will wend its weighty way to me. |

||||

| Quotes and Thoughts | ||||

|

"I have approximate answers, and possible beliefs, and different degrees of uncertainty about different things. But I am not absolutely sure of anything and there are many things I don’t know anything about. I don’t feel frightened not knowing things." - Professor Richard Feynman |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. |

||||

| Fast Delivery | ||||

|

What's the fastest way to get a firmware project out the door? Ship junk. Unprogrammed flash. One company I know grades developers on the size of the program; a cynic there could do very well by dumping Moby Dick (1,172,046 bytes by my count) into memory. The system wouldn't work too well, or at all, but the project will beat all development records. The second fastest way is to ship insanely-high quality code. Deming, Juran and the subsequent quality movement taught the manufacturing world that quality and speed go together. Quality stems from fabulous design that requires no rework; no rework means projects go out the door faster. Alas, in the firmware world that message never resonated. Most projects devote half the schedule to debugging (which implies the other half should be named "bugging"). Typical projects start with a minimum of design followed by a furious onslaught of coding, and then long days and nights wrestling with the debugger. Capers Jones1 studied 4000 late software projects and found that bugs are the biggest cause of schedule slippages. Benediktsson2 showed that one can get the very highest level of safety-critical software for no additional cost, compared to the usual crap, by using the right processes. Bottom line: quality leads to shorter schedules. Here are some tips for accelerating schedules: 1) Focus relentless on quality. Never accept anything other than the best. Don't maintain a bug list; rather fix the bugs as soon as they are found. Bug lists always infer a bug deferral meeting, that time when everyone agrees on just how awful the shipped product will be. This is the only industry on the planet where we can (for now) ship known defective products. Sooner or later the lawyers will figure this out. A bug list produced in court will imply shoddy engineering... or even malpractice. 2) Requirements are hard. So spend time, often lots of time, eliciting them. Making changes late in the game will drastically curtail progress. Prototype when they aren't clear or when a GUI is involved. Similarly, invest in design and architecture up front. How much time? That depends on the size of the system, but NASA3 showed the optimum amount (i.e., the minimum on the curve) can be as much as 40% of the schedule on huge projects. 3) Religiously use firmware and code inspections. I have observed that it's about impossible to consistently build world-class firmware unless it is all done to a reasonable standard. Happily, plenty of tools are available that will check the code against a standard. And couple this practice to the use of code inspections on all new code. Plenty4 of research shows that inspections are far cheaper - faster - than the usual debugging and test. In fact, testing generally doesn't work5 - it typically only exercises half the code. There are, however, mature tools that will greatly increase test coverage, and that will even automatically create test code. 4) The hardware is going to be late. Plan for it. When it finally shows up it will be full of problems. Our usual response is to be horrified that, well, it's late! But we know on the very first day of the project that will happen. Invent a solution. One of the most interesting technologies for this is virtualization: you, or a vendor, builds a complete software model of the system. It is so complete that every bit of your embedded code will run on the model. Virtualization products exist, and vendors have vast libraries of peripheral models. I was running one of these on my PC, but it was a Linux-based tool, so ran VMWare to simulate the Linux environment. The embedded system was based on Linux, so the system was simulating Linux simulating Linux - and it ran breathtakingly well. 5) Run your code through static analyzers every night. That includes Lint, which is a syntax checker on steroids. Lint is a tough tool; it's one that takes some learning and configuration to reduce false positives. But it does find huge classes of very real, and very hard-to-find bugs. Also use a static analyzer, one of those tools that does horrendous mathematical analysis of the code to infer run-time errors. In one case one of these tools found 28 null pointer dereferences in a single 200 KLOC infusion pump that was on the market. 6) Buy everything you can. Whether it's an RTOS, filesystem or a protocol stack, it's always cheaper to buy rather than build. And buy the absolute highest quality code possible. Be sure it has been qualified by a long service life, or even better by being used in a system certified to a safety-critical standard. Even if your product is as unhazardous as a TV remote control, why not use components that have been shown to be correct? 7) That last bit of advice applies to tools. Buy the best. A few $k, or even tens of $k, for tools is nothing. If a tool and the support given by the vendor can eek out even a 10% improvement in productivity, at a loaded salary of $150k or so it quickly pays for itself. 8) Use proactive debugging. OK - those last two words are my own invention, but it means assuming bugs will occur, and therefore seeding the code with constructs that automatically detect the defects. For example, the assert() macro can find bugs for as little as one thirtieth6 of the cost of conventional debugging. 9) Include appropriate levels of security. Pretty much everything is getting hacked. Even a smart fork today has Bluetooth and USB on board; that fork could be an attack vector into a network. Poor security means returns and recalls, or even lawsuits, so engineering effort will be squandered rather than invested in building new products. At the least, many products should have secure boot capabilities. Never have embedded systems been so complex as they are today. But we've never had such a wide body of knowledge about developing the code, and have access to tools of unprecedented power. It's important we exploit both resources. 1) Jones, Capers. Assessment and Control of Software Risks. Englewood Cliffs, N.J. Yourdon Press, 1994 2) Benediktsson, O. Safety critical software and development productivity, The Second World Congress on Software Quality, Yokohama, Sept 25.-29, 2000 3) Dvorak, Dan and a cast of thousands. Flight Software Complexity, 2008 4) Wiegers, Karl. Peer Reviews in Software , 2001, and about a zillion other sources. 5) Glass, Robert. Facts and Fallacies of Software Engineering, 2002. 6) L. Briand, Labiche, Y., Sun, H. Investigating the Use of Analysis Contracts to Support Fault Isolation in Object Oriented Code, Proceedings of International Symposium on Software Testing and Analysis, pp. 70-80, 2002. |

||||

| Back to the Building Blocks | ||||

|

Last issue's article about a brain-dead initiative from the US government to improve software elicited some feedback. Peter House wrote:

Charles Manning (and some others) thought the article was for an April 1 issue! But he wrote:

|

||||

| Assumptions | ||||

|

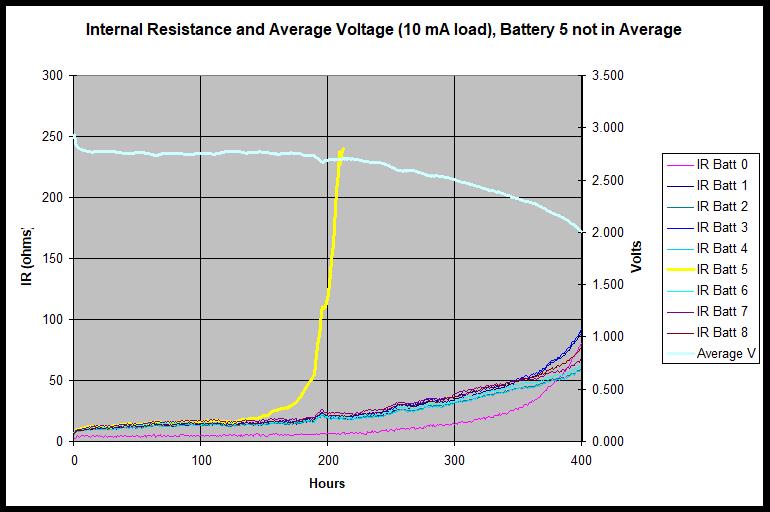

An article I can no longer find about ultra-low power design contains this gem about CR2032 batteries: "A part of the coin cell’s energy is exhausted through self‐discharge; another part is not viable due to the increased source impedance near end‐of‐life. Both are assumed to take 20% of the capacity away." Huh? Who assumes that? Why would an engineer base anything important on an assumption? If some critical parameter is an assumption, at the least cite chapter and verse so the thoughtful engineer can examine the evidence himself. The best engineers are pessimists. They assume one thing: everything will go wrong. That network of 5% resistors will all come in clustered at the worst possible end of the tolerance band. The transistor's beta will be just exactly at the datasheet's min value. The op amp's gain-bandwidth product will be lower than the rated min due to PCB parasitics. Datasheets in many cases are getting worse. Far too many list typical specs in lieu of min or max. If worst case is listed that's often just for extreme cases, like at some greatly elevated temperature. While that's important data, we need to understand how components will work over the range of conditions our systems will experience in the field. Firmware people should share the same curmudgeon-ness. C's sqrt() can't take a negative value, so it makes sense to check or guarantee the argument is legal. Weird stuff happens so we liberally salt the code with asserts(). We should use C's built-in macros to insure floating point numbers are, well, floating point numbers and not NaNs or some other uncomputable value. As for that assumption that "source impedance" and self-discharge account for 20% of the capacity, that's simply wrong. I have gobs of data points about CR2032s. In these ultra-low power applications coin cells don't behave like anything I have seen published. Everyone uses "internal resistance," rather than impedance, to describe their reduced ability to supply current as they discharge, but that's merely a proxy for some complex chemistry, since a load on the battery causes the voltage to continue to drop the longer the load is applied. The following chart shows experimental results on nine Panasonic CR2032s. All are from the same batch; all are from a very reputable, high-volume distributor (Digikey). The data - real, experimental results, not assumptions - are interesting and puzzling. What the heck is going on with battery five? It dies at half the rated capacity, mirroring the behavior of a CR2025. But it is visually identical to the other eight, has the same markings, and is the same size. Is this just a dud or is it something we can expect? The batteries are being discharged at a 0.5 mAh rate so should die (vendors rate "dead" at 2.0 volts) at about 440 hours, though they do not specify what this means. Is it measured under any load? The charted data is the battery voltage about a millisecond after connecting a 10 mA load, which is probably a light load for an MCU which has woken up and is doing work. If there's any comm involved (think the "Internet of things") expect much higher current levels, even if for just a very short period of time. Consider the internal resistances (IR). At the 300 hour point, well short of the 440 we expect, the average battery is at 2.5 volts with an IR of about 40 ohms. Ultra-low current embedded systems can easily take tens of mA for a few ms when awake; if the system pulls just 25 mA (15 more than the 10 being pulled in the charted data) the voltage will drop below 2.0 at 300 hours. The battery is effectively dead long before its rated capacity is used. Systems are getting extraordinarily complex. If we don't question our assumptions that gadget may be built on quicksand, sure to fail if our guesses prove to be incorrect. In this era of crowdsourcing it's easy to rely on hearsay - or Internet-say. But a question lobbed onto the net usually elicits a dozen conflicting answers, each of which is claimed to be authoritative. As Joe Friday didn't say: "Just the facts, ma'am." |

||||

| Failure of the Week | ||||

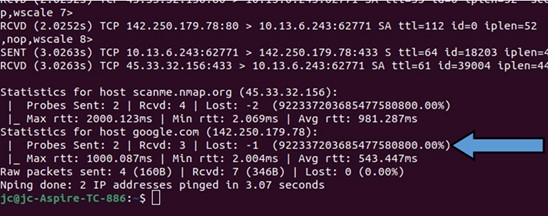

Jean0Christophe Matthae send this, noting "At the end of the output, statistics computations are a little off. Probably a sign error like "Lost = Sent - Rcvd", with strange display resulting from unexpected signed/negative values when only unsigned ones were rightfully expected..." David Cherry sent this delightful bit of spam: Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

|

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad.

|

||||

| Joke For The Week | ||||

These jokes are archived here. From Harold Kraus: So, Monday, I fielded a call from a Corps of Engineers manager I know from Scouting. He was trying to help a ranger with an RFID problem. They are monitoring RFID-tagged ferrets living in prairie dog holes (wild, American black-footed ferrets, not the tame European polecats). A ring antenna around the hole reads their ID code when they pop in and out of their hole. They wanted to record the ferrets' activity each day. It wasn't working. Listening to the description, I was thinking that the problem was amateur cold solder joints. But, inspired, I asked, "Is there more than one tagged ferret around?" "Yes." "There's your problem. You, see? More than one tagged ferret causes packet collisions on the RFID transceiver. You need to enclose the area with a screened cage with just one ferret inside each day. That way the reader will only get one packet at a time. You can get these screened cages at Granger. Just call them up and ask for a ferret day cage." |

||||

| About The Embedded Muse | ||||

|

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |