|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

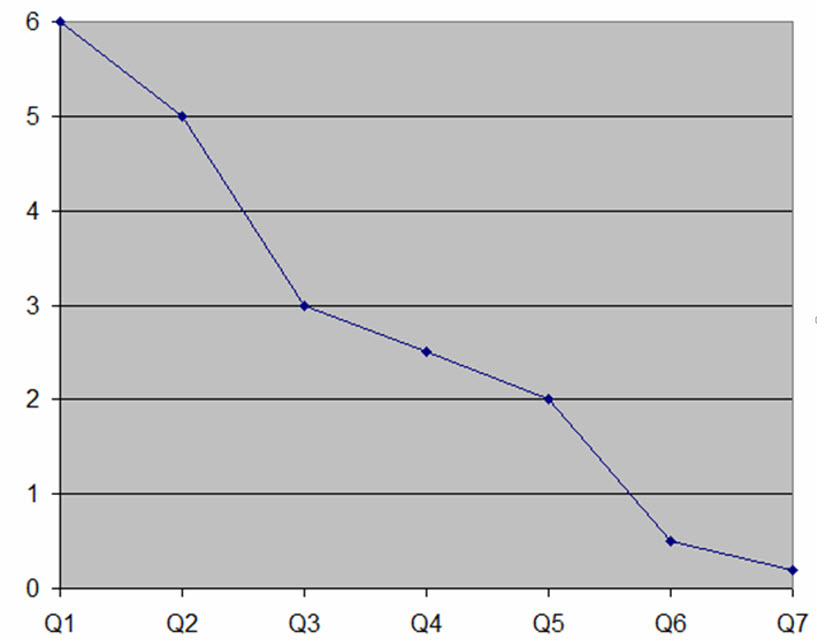

The average firmware team ships about 10 bugs per thousand lines of code (KLOC). That's unacceptable, especially as program sizes skyrocket. We can - and must - do better. This graph shows data from one of my clients who were able to improve their defect rate by an order of magnitude (the vertical axis is shipped bugs/KLOC) over seven quarters using techniques from my Better Firmware Faster seminar. See how you can bring this seminar into your company. |

||||

| Quotes and Thoughts | ||||

"When in doubt, leave it out." - Joshua Bloch |

||||

| Tools and Tips | ||||

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Here's a nice article about dealing with capacitance and resistance in designing I2C circuits. Last issue I discussed Logiswitch's bounceless switches. Frequent correspondent Luca Matteini wrote this:

Neal Somos is using supercapacitors to measure current of a circuit:

This paper has some good thoughts about this approach. And I reviewed a number of tools for measuring current here. |

||||

| Freebies and Discounts | ||||

Brad Hoskins won the Zeroplus Logic Cube logic analyzer last month. This month we're giving away the Siglent SDS1102CML two-channel, 100 MHz bench scope that I reviewed here and here.

Enter via this link. |

||||

| Sound Static Analysis for Security Workshop | ||||

Last month the Sound Static Analysis for Security Workshop was held at NIST in Gaithersburg, MD. Though it was a two-day event I could only attend the first day. The focus was strongly geared to Ada and SPARK developers, and, as the name suggests, the theme was about using static analysis tools to prove program correctness. Here are some random thoughts from the event: There was a bit of discussion about Frama-C, which is an open source IDE that has various plug-ins for analyzing C code for correctness. Have you used it? Any feedback? David Wheeler summarized what he desires from tool vendors. I heartedly agree with all of these suggestions:

One of the odd things about software is that testing is so problematic. If you build a bridge and run a ten-ton truck over it, you'll have high confidence that a motorcycle won't cause the thing to collapse. This isn't true with software. Mr. Wheeler pointed out that a simple addition of two 64-bit integers has 2128 possible inputs. To test that comprehensively (obviously this is a contrived case) would require 1.7 quadrillion years on 1 million 8-core 4 GHz CPUs, given 5 cycles/test. If a test finds a vulnerability, we know we're vulnerable. But if it doesn't find a vulnerability, we know very little. Just as test can't prove the absence of errors, test can only prove something is insecure. Tests can't show whether a system is secure. We too often disparage legacy systems. Mr. Wheeler pointed out that if a system works, it's a legacy system. If your job is to make working software, then your job is to create legacy code. He quoted Joel Spolsky's "Things you should never do" which, paraphrased, includes: While Joel's advice is generally good, I have been asked to audit some big firmware projects that were mired in trouble, and in a few cases have advised the companies to abandon the code and start over. Sometimes millions of dollars had been invested in a complete debacle that clearly had no possibility of success. In every case the problems stemmed from early architectural mistakes. In a swipe at the C community, Mr. Wheeler stated "the biggest threat to security is the C/C++ standards bodies," as they continue to support undefined and unspecified behaviors. The presentations are here. |

||||

| When I'm (No Longer) 64 | ||||

I recently incremented to official senior-citizen age, and find myself reflecting on a long career in electronics. My first paying job in this industry was as an electronics tech working on, among other things, Apollo ground support equipment in high school in 1969. That company later realized that it needed to integrate microprocessors into the products; as a result I was promoted to engineer at 19 while in college just about the time the embedded industry started. So I've been incredibly lucky to have worked exclusively in the embedded world from its birth. In the early 70s the computational power offered by microprocessors was often not enough to handle our workloads, so we often "embedded" a minicomputer along with an 8008. Data General's Nova was a cheap mini; a memory-less Nova 1200 was about $24k, but the 8008 chip alone cost some $2500 (all figures in 2018 dollars). It took a lot of extra circuitry to make a working computer from those early micros. With a 800 KHz clock these small CPUs were not speed demons, but with tightly-coded assembly language we built near-infrared analyzers that sucked in 800 data points a couple of dozen times per second, solved floating point polynomials, and even computed least-squares curve fits. Using paper-tape "mass storage" it took 3 days to reassemble and link the code, so it was routine to patch machine code and rebuild rarely. Program memory was 1702 EPROMs, each 24-pin chip storing only 256 bytes. 4 KB of memory required 16 of these EPROMs. 8008s gave way to 8080s, then to Z80s, 8088s, 68Ks, 1802s, and a huge range of other processors. The 8080 could address 64 KB, but no one could afford to buy that much RAM or EPROM. And no one believed we'd ever need so much. Today, of course, half a buck will buy a fast 32 bit microcontroller with more on-board memory than we had in our entire early-70s lab. Looking back over 45+ years of embedded one can only be astonished at how much has changed. We used to draw schematics with a pencil on D-sized vellum (22 x 34 inches), and every engineer had an electric eraser for making changes. PCB layout was on mylar using black tape to mark traces. My dad was a mechanical engineer who used mylar in the 60s and 70s as it is much more dimensionally-stable than paper. But in the 50s there was no mylar, so he and his colleagues created stable drawings on starched linen(!). There was no air conditioning at Grumman where he worked, but they couldn't sweat, because a drop of moisture would bleed the starch from the linen. CAD today has given us so much more capability. When FPGAs showed up in the 80s the tools captured schematics and (after crashing far too often) then produced routed binaries. VHDL and Verilog now make it practical to build enormously-complex circuits on these programmable parts; doing so via schematics would be all but impossible. Analog-to-digital converters weren't ICs; they were sizable potted modules that cost a fortune. 12 bits was about the best one could buy, mostly from Analog Devices, Analogic and Burr-Brown. Now Burr-Brown is gone and Analogic only makes medical equipment. Who would have dreamed that we'd all have the Internet in our pockets? Who would have dreamed we'd have an Internet? Who would have imagined that a desktop OS would take tens of millions of lines of code? In 1971 who would have dreamed we'd have personal computers within a few years? The 8008 was built using 10,000 nm geometry. TSMC's next fab, possibly for a 3 nm node (alas, there's an awful lot of smoke and mirrors around node sizes today) is rumored to cost $20 billion. At one point gate oxide thicknesses were 1.2 nm, or about 5 silicon atoms. (Hafnium oxide's much better dielectric constant relaxed this somewhat). But isn't that incredible? In 1981 my business partner and I built a system that had to run for a month off a decent-sized lead-acid battery. Now some systems can run for a decade off a coin cell. When microprocessors first came out, no one would have imagined a computer-controlled toothbrush. Or 100 micros in a car. Products that respond to voice commands. And a thousand other embedded thingies that surround us. Some people claim electronics is a whole new field every two years. Yet the fundamentals are all the same. Maxwell's Laws are still laws (as much as anything in physics is a "law".) Resonance, reactance, impedance and circuit theory hasn't changed. The math we use has remained stable. Yes, lots does change, and fast. Today's engineer needs the age-old truths while keeping up with breakneck speed of technology. And that is a lot of fun. Going forward, it's clear that no matter how much computing power we have, more will be needed. For my whole career Moore's Law has been derided. When we hit 1000 nm, process technology seemed at a dead end. Also at 250, 180, etc. Remember the seemingly unsurmountable travails at 45 nm? Now, as we approach atomic scales, it does seem an end of a sort is near. But 3D flash is now a thing, so I'm not ready to bet on an electronics brick wall. To me, more interesting than the latest process node are MCUs, which tend to be built on older, fully-depreciated, lines. In the past MCUs were suitable only for the smallest applications, but now they sport plenty of memory and high-speed clocks. Some vendors (notably Ambiq) sell MCUs that operate in the subthreshhold FET region, which we were taught to avoid as temperature has as much influence on current flow as does voltage. (One of those age-old truths that still holds sway: the Shockley diode equation.) But at these low voltages extraordinary-low-current operation is possible. That's pretty cool. Sensor technology has evolved in amazing ways. A gyro used to be a big, expensive, power-hungry mechanical contraption. Now it's a 50 cent IC. Sensors are evolving rapidly and will drive all sorts of new ideas. What's somewhat amazing to me is that software technology hasn't changed a lot. Yes, in the 1980s we transitioned from assembly language to C, and some to C++ later. But this is still basically a C world. Better languages exist. While C is fun, I fear it won't scale to the problems of tomorrow. But great work has been done to tame the language (think MISRA, CERT C, etc.). Tools have improved a lot. Compilers are reliable and efficient. Old timers will remember struggling with getting a compiler to run reliably. Static analyzers were not even the stuff of dreams once, but today are effective at finding large classes of bugs. Debugging tools, augmented by on-chip resources, offer a lot of capability. It seems to me the von Neumann fetch/execute model that has worked so well for so long is an impediment to progress. If the goal is to transform data, all of those fetches burn up a ton of memory bandwidth. I have no idea where this will go, but the human brain can pass the Turing Test, and it doesn't seem there's any ISA at work. So we already have a model of what the future could bring. Certainly Google's Tensor Processing Unit gives one pause. What's the future of the embedded engineer? More specialization, I'm afraid. As things become more complex we can't master everything. Teams will continue growing in size. Deeply-embedded people will be rarer as the scope of problems we solve moves more into software and a smaller percentage of the work will be manipulating bits and registers. Yet deeply-embedded people will be desperately needed as those manipulations will get ever-more difficult. A six-pin eight-bit MCU can have a 200 page datasheet. The peripherals, and even bus structure, of a 32 bitter can baffle the brilliant. The siren song of retirement is louder now, but I'm not ready to leave this amazing field. For tomorrow, there will be something unbelievably cool happening. |

||||

| Applying De Morgan's Laws to Firmware | ||||

Hardware engineers have long used De Morgan's laws to simplify logic circuitry, but did you know the laws can be a boon to creating more readable code? The laws are very simple. Given two booleans X and Y: !(X and Y) = !X or !Y and !(X or Y) = !X and !Y How does this apply to firmware? Suppose you have a statement like: if(!(value_exists && !value_overflow)... That's a bit of a head-scratcher. Instead, apply De Morgan to get: if(!value_exists || value_overflow)... Which is, in my opinion, a lot clearer. This is a simple example. On complex expressions De Morgan's laws can be applied recursively to simplify statements. Sometimes we get trapped by our reasoning into a complex expression which the laws will show to be ultimately very simple. For instance: !(X * Y) * (!X + Y) * (!Y + Y) Is really just: !X |

||||

| This Week's Cool Product | ||||

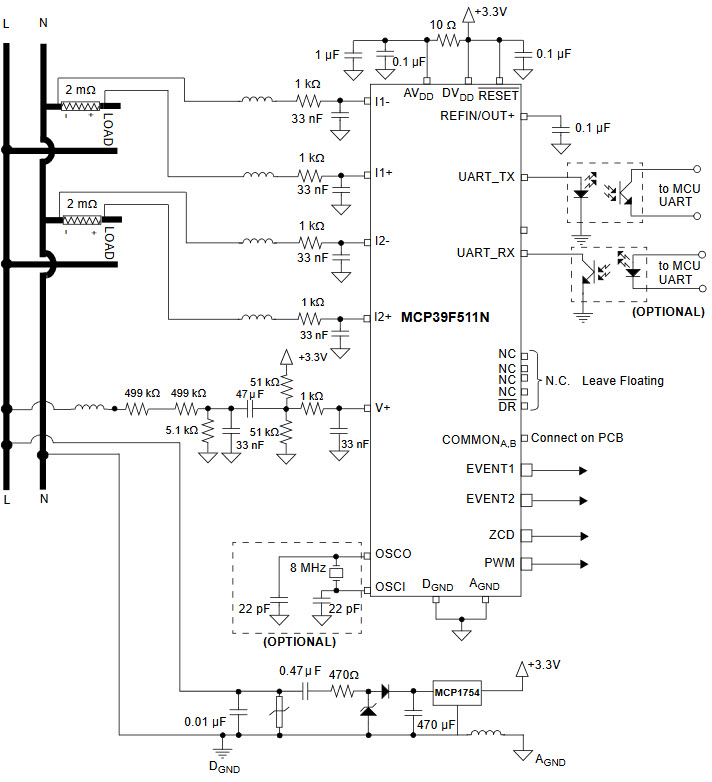

Not new, but I just ran across this: Microchip's MCP39F511N power monitoring chip is "a highly integrated, complete dual-channel single-phase power-monitoring IC designed for real-time measurement of input power for dual-socket wall outlets, power strips, and consumer and industrial applications. It includes dual-channel 24-bit Delta-Sigma ADCs for dual-current measurements, a 10-bit SAR ADC for voltage measurement, a 16-bit calculation engine, EEPROM and a flexible two-wire interface." It monitors the AC mains directly, and computes active, reactive, apparent power, true RMS current, RMS voltage, line frequency, and power factor.

Note the opto-isolators to keep the mains voltage away from the rest of the system, and the fact that the 3.3 volt supply is derived from the AC mains. Two bucks in quantity. Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

Note: These jokes are archived here. I just got fired from my job at the keyboard factory. They told me I wasn't putting in enough shifts. In a related item, Taylor Hillegeist sent this link to the SuperCoder-2000 keyboard. |

||||

| Advertise With Us | ||||

Advertise in The Embedded Muse! Over 28,000 embedded developers get this twice-monthly publication. . |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |