|

||||||||||||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||||||||||||

| Contents | ||||||||||||||||

| Editor's Notes | ||||||||||||||||

|

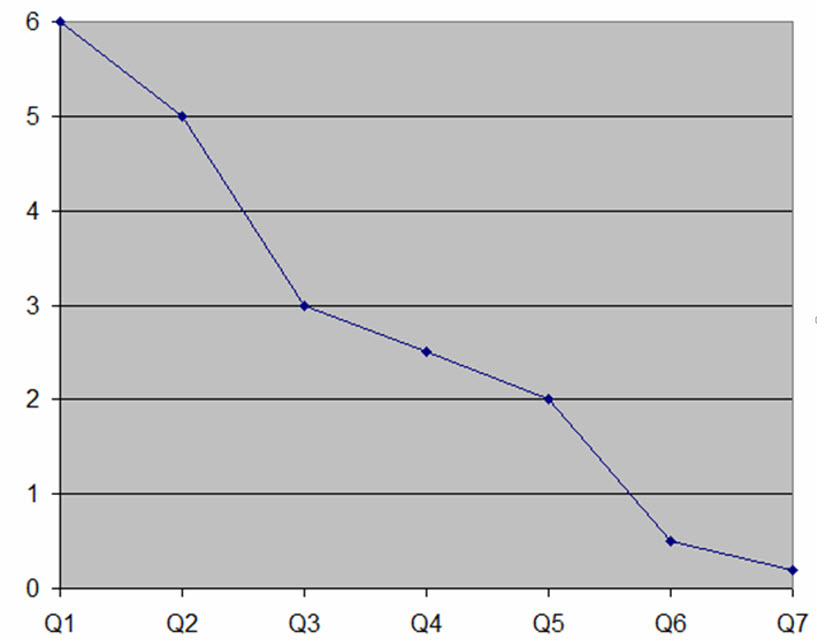

The average firmware teams ships about 10 bugs per thousand lines of code (KLOC). That's unacceptable, especially as program sizes skyrocket. We can - and must - do better. This graph shows data from one of my clients who were able to improve their defect rate by an order of magnitude (the vertical axis is shipped bugs/KLOC) over seven quarters using techniques from my Better Firmware Faster seminar. See how you can bring this seminar into your company. |

||||||||||||||||

| Quotes and Thoughts | ||||||||||||||||

"I believe that economists put decimal points in their forecasts to show they have a sense of humor." - William Gilmore Simms |

||||||||||||||||

| Tools and Tips | ||||||||||||||||

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. In the last issue I asked what is the fastest interrupt response you've had to deal with. Will Cooke wrote:

Kosma Moczek had an explosive situation:

Luca Matteini wrote:

Bruce Casne sent this:

Steve Strobel wrote:

Daniel McBrearty had a different take:

Daniel Wisehart responded to my comments on MISRA-C:

And Keir Stitt had a story with a useful lesson:

I have seen this problem many times in the past. Some engineers make a practice of updating all of the pertinent I/O registers frequently; say, each iteration for a polled loop structure, or via a periodic interrupt or task. |

||||||||||||||||

| Freebies and Discounts | ||||||||||||||||

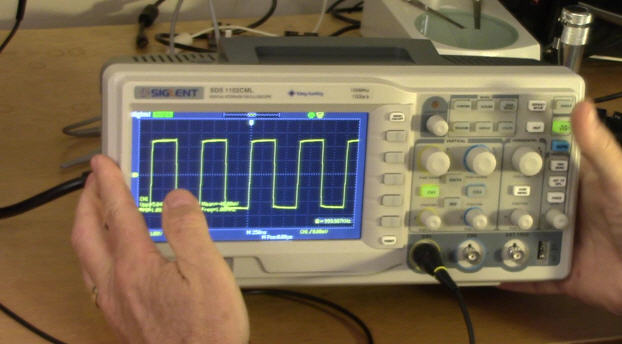

This month we're giving away the Siglent SDS1102CML two-channel, 100 MHz bench scope that I re-review later in this issue.

Enter via this link. |

||||||||||||||||

| Rules of Thumb | ||||||||||||||||

Capers Jones has more empirical data on software projects than anybody, and he shares it with the community in his various books and articles. The books are tomes only a confirmed software-geek could stomach as they are dense with data. I find them quite useful. Over the years he has developed a number of rules of thumb. These are approximations only, but are reasonable first-order ways of getting a grip on a project. Jones doesn't like using lines of code for a metric, and prefers function points. What is a function point? It's a measure of, well, functionality of a part of the software. There are a number of ways to define these, and I won't wade into the pool of competing thoughts that sometimes sound like circular reasoning. One complaint lodged against function points is they tend to be highly correlated with lines of code. I'm not sure if that is a bug or a feature. Regardless, in C one function point is about 130 lines of code, on average. Here are the rules of thumb, where "FP" means function points: Approximate number of bugs injected in a project: FP1.25 Manual code inspections will find about 65% of the bugs. The number is much higher for very disciplined teams. Number of people on the project is about: FP/150 Approximate page count for paper documents associated with a project: FP1.15 Each test strategy will find about 30% of the bugs that exist. The schedule in months is about: FP0.4 Full time number of people required to maintain a project after release: FP/750 Requirements grow about 2%/month from the design through coding phases. Rough number of test cases that will be created: FP1.2 (way too few in my opinion) |

||||||||||||||||

| Re-Review of Siglent's SDS1102CML Oscilloscope | ||||||||||||||||

A few years ago, I wrote a review of Siglent's SDS1102CML 100 MHz, two channel scope. My impression: I liked it. It packs a ton of value for the money.

Since then I do use it time to time, even though it's the least capable of the bench scopes here. I've been curious about its durability. Some early users reported trouble occurring with the power switch - it appears they fixed this as I've had no issues. Two 100 MHz channels for under $300 is hard to beat. Unlike some of the competing entry-level units, each channel has a full set of knobs. Well, "full set" may be overreaching a bit since, like any scope, they comprise gain, position, and enable controls only. But I greatly dislike a single set of shared controls, so the Siglent excels here. The only problem I've run into is that one of the legs no long snaps into position. The display is adequate but doesn't have the resolution of a higher-end model, so letters, while entirely readable, are a little crudely-formed. My Agilent (now Keysight) mixed-signal scope has beautifully sculpted letters and symbols... but it costs $16,000. I also have another Siglent scope, an SDS2304X, which has a great high-resolution display. For $2k it should. The screen resolution does mean that sine waves and other non-square signals appear a little pixelated. Less-than-perfect resolution is a tradeoff to get a decent instrument at a low price. As Mick Jagger said, "you can't always git whatcha want." Occasionally I need a scope on the road. Any of the USB instruments are a logical choice since I already am carrying a laptop. But I find myself bringing the Siglent. None of the USB versions I have matches its 100 MHz bandwidth (though there are plenty of models out there that do). I just find operating a scope with real knobs easier and more enjoyable than manipulating virtual controls via a mouse - or, worse, a laptop's trackpad. So, after four years with the Siglent, I still think it's a terrific deal, and a decent instrument for home labs or even for professional settings where high-performance isn't needed. At this writing it's available on Amazon Prime for just $299.That's an incredible price for a decent bench scope. You can have mine - I'm giving it away at the end of July, 2018. It needs a home where it will get more use. Amazon has a number of reviews of the scope. All but one are positive. My favorite is this: Looks really cool sitting on my desk. Docked one star because the instructions aren't clear enough on how to make the screen show the squiggly lines like in the photo. |

||||||||||||||||

| This Week's Cool Product | ||||||||||||||||

Switches bounce. Sometimes a lot. I have a Chinese FM transmitter that is almost impossible to turn off as pressing the on/off button while the unit is functioning almost always results in a quick off-then-on cycle. When I ask engineers about their debouncing strategy the answer is usually a variation on "I delay x ms", where "x" is a time informed by habit, rumor, or Internet flamewar. Yet in profiling switches I have found some that bounce not at all; others for over 100 ms. Debouncing is an important and interesting subject that I analyzed extensively in this report. The bottom line is that one should dig deeply into the nature of the switch being used. Alternatively, one can now buy bounce-free momentary-contact switches. Logiswitch sells switches with bounceless outputs. They come in a variety of styles. The company sent me samples, and all seem extremely well made and suitable for use in a NASA blockhouse; these are not cheap controls for your $29 toaster.

Typical switches offered by Logiswitch Examining the switches it appears these are all SPDT going to an IC that presumably acts as a SR flip flop, which is guaranteed bounceless. But they're a bit more sophisticated than that. There's a hardware acknowledge cycle. When your system detects the switch closure, it responds with an ACK cycle that clears the asserted "switch closed" output from the Logiswitch. That greatly simplifies the code as there's no convoluted logic to wait for the switch to be released (plus all of the debouncing code). There's also an intriguing "toggle" output which changes state every time the switch is pressed. The company also makes ICs that debounce arbitrary SPST switches, and these support the ACK handshake protocol. Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||||||||||||||

| Jobs! | ||||||||||||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||||||||||||

| Joke For The Week | ||||||||||||||||

Note: These jokes are archived at www.ganssle.com/jokes.htm. Have you heard of that new band "1023 Megabytes"? They're pretty good, but they don't have a gig just yet. |

||||||||||||||||

| Advertise With Us | ||||||||||||||||

Advertise in The Embedded Muse! Over 27,000 embedded developers get this twice-monthly publication. . |

||||||||||||||||

| About The Embedded Muse | ||||||||||||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |