|

||||||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||||||

| Contents | ||||||||||

| Editor's Notes | ||||||||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar, for example, here, here and here. |

||||||||||

| Quotes and Thoughts | ||||||||||

Management has three basic tasks to perform:

- Philip Crosby |

||||||||||

| Tools and Tips | ||||||||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Brian Rosenthal wrote: This android app (https://play.google.com/store/apps/details?id=com.yatrim.stlinkp) allows you to plug your ST-LINK/V2 into your phone and download a new program to an STM32 device. It requires an OTG adapter, but beats having to drag your laptop out to the floor for a quick update! In the last Muse I ran my top ten reasons for project failure. Chris Gates added:

|

||||||||||

| Freebies and Discounts | ||||||||||

Dan Neuburger won August's Siglent SDS2304X 300 MHz oscilloscope. September's giveaway is a DS213 miniature oscilloscope that I reviewed in Muse 374. About the size of an iPhone it has two analog and two digital channels, plus an arbitrary waveform generator. At 15 MHz (advertised) it's not a high-performance instrument, but given the form factor, is pretty cool.

Enter via this link. |

||||||||||

| Ultra-Low Power Systems | ||||||||||

At last month's Embedded Systems Conference there were two themes that seemed to run through the show: autonomous driving (not surprising as the ESC was merged with "Drive" - an automotive electronics event), and ultra-low power embedded systems. I still see wild claims being made about many years of operation from small batteries like coin cells. These all suppose the systems are sleeping most of the time, of course, but too many issues are glossed over. Five years ago I wrote a series of articles about this, now collected here. Over an 18 month period I discharged about 100 cells to study their behavior in ultra-low power applications, and ran numerous other experiments to learn what one can reasonably expect. Here are the key takeaways:

My report covers hardware and software considerations for building these sorts of systems. |

||||||||||

| Convolutions | ||||||||||

Raw data tends to be pretty raw. The real world is a noisy place; everything from distant lightning strikes to Johnson noise corrupts what we'd hope would be pristine data read from an ADC. It's common to average some number of ADC readings to reduce the noise and better approximate the actual data we're trying to measure.

Averaging reduces Gaussian noise by the square root of the number of samples. Ten improves the signal-to-noise ration by about a factor of three; 100 samples yields an order of magnitude improvement. There are two problems with averaging: It's slow (though read on for a better algorithm), and the average presumes all of the data, no matter how old, should contribute equally to the result. Sometimes a more complex filter will better fit the data. When I went to college we learned about convolutions in, of all places, circuit theory. The definition is: " />The math baffled me and I couldn't see any reason we'd use such a thing. And yet in my day job we were massaging data using the Savitzky-Golay algorithm to fit data to a polynomial (see Muse 254), which, though I didn't realize it at the time, is nothing more than a convolution. It turns out convolutions are simplicity itself disguised in academic rigor meant to torment university Juniors. Suppose you're reading an ADC and averaging the most recent N samples. That's the same as multiplying the entire universe of input data by zero, except for the most recent N, which are multiplied by 1 and averaged. In math-speak, the input data is convolved with a function whose value is zero except for the most recent N samples which are 1. The data slides through the N-points of the average. But does that make sense? It supposes that old data is just as representative as the latest readings. This is fine in many circumstances, say for data that changes slowly. It will slow the systems response and smear rapid changes. There are many alternatives, the most common, perhaps, is the weighted moving average. Multiply the most recent reading by, say, 5, the next most recent by 4, and so on. This is the Finite Impulse Response filter (FIR), which smooths data using only recent readings. Another option is the Infinite Impulse Response (IIR) filter, which includes all of the data collected to date. An example might use a weight of 5 for the most recent, 2.5 for the next, 1.25 after that, and so on. With simple averages one sums the data and divides by the number of samples. The weighted moving average's divisor is the sum of the various weights. So, how does one implement moving and weighted moving averages in code? The simplest approach is to save a circular buffer of the most-recent N data points and do a multiply-accumulate of the entire buffer each time a result is needed. That's computationally expensive. A better approach for the simple moving average is to compute: AN+1 = (XN+1 + N * AN)/(N+1) Where AN+1 is the new moving average, N is the number of samples, XN+1 is the latest reading, and AN is the previous average. This is a simple, fast, calculation and one does not need to store old data. (For a proof of this non-intuitive result see this). (The division can sometimes be eliminated, if absolute units aren't needed). Convolutions get more interesting in picture processing where pixels in two dimensions, say a 3x3 square, are manipulated to sharpen or defocus images. |

||||||||||

| A $50 Vector Network Analyzer | ||||||||||

Pete Friedrichs offered a review of an inexpensive vector network analyzer. Pete is the fellow who wrote a couple of fascinating books about building electronic projects... from scratch. Like making your own capacitors and vacuum tubes. His review: The NanoVNA is a two-port vector network analyzer, 50 kHz to 900 MHz. This thing will compute/plot return loss, swr, complex impedance, filter sweeps, smith charts, and more. The whole instrument is about the size of an Altoids tin. The display is a color touchscreen, additional input is made through a tactile rocker switch. The device is rechargeable and contains its own lithium battery which is recharged through a USB C port. Speaking of USB, the device presents itself to a PC as a comm port. There are a set of instructions that allow the unit to be controlled and queried through plaintext messages. People have already written PC software to control and interrogate the device so as to produce large graphs and perform additional functions like time domain reflectometry. At least one person has written a Labview front end for it. There is a fairly robust user group of this device as evidenced by youtube videos and a user's group on google. Here's the most incredible part…the instrument costs about 50 BUCKS! No, it's not the same as a $XX,XXX dollar Agilent VNA, but it does much of what a "real" VNA will do and it's three orders of magnitude cheaper. In my experience the instrument you have is ALWAYS better than the instrument you'd like but can't afford. In fact, more than one user, with access to a "real" VNA for comparison has reported reasonable agreement between their high-end instrument and the NanoVNA. I purchased one recently and have had endless fun with it. I run Linux, and on my boxes, it shows up as ttyACM0. I have successfully controlled the device through minicomm, and I'm now contemplating writing some software of my own. I submit the above link as an example, not necessarily an endorsement. Actually, the NanoVNA is available all over the Internet from multiple sources. There are at least three versions. The "original" version is claimed to be the best. Details of what comprises the "original" vs "clone" versions—along with modifications that can improve the clones—can be found in the google user group. This is one of those gadgets that is too cheap NOT to buy. In my opinion it's been worth the price of admission just for the hands-on learning. |

||||||||||

| On Comments | ||||||||||

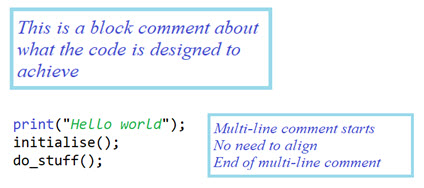

Many, many people commented on commenting function headers from the last Muse. A common theme: Comments are bad because they are often incorrect. As the code changes, the comments don't. We've all been cursed with code like that. My take is that as professionals we are obligated to maintain our comments. To abdicate that responsibility is like the woodworker who never sharpens his tools. Sure, the blade will still cut, leaving a rough surface behind, while filling the shop with smoke. But with enough post-cut sanding he can make something that is somewhat presentable, usually, slowly, with plenty of four-letter words peppering the work. Here are some highlights from readers. Martin Buchanan wrote:

Tom Mazowiesky commented:

John Carter and others had somewhat dissenting opinions:

Caron Williams suggested:

Many people wrote to chastise me about my yearning for an IDE that supports in-code WYSIWYG editing, like we take for granted with word processors. The common theme was that we already have these, in terms of editors that support keyword highlighting, comments in different colors, etc. While those are great features, my dream goes further: I want to embed pictures in source code. People complain that comments can get too long. Well, as the saying goes, a picture is worth a thousand words. How about tables (not crummy ASCII-formatted versions that get scrambled with changing indentation settings)? Maybe even complex objects like spreadsheets. It's easy to see how an external tool could strip this stuff and present the compiler with nothing more than the printable ASCII text, but that would be a kludge. I'd sure like to see the next C standard support XML source files, or something similar, so the compilers themselves can digest files rich with visual information. Clarity is our goal, and sometimes pure text is a forest whose trees hide what could be presented in obvious ways. Tony Whitley had similar thoughts::

|

||||||||||

| Jobs! | ||||||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||||||

| Joke For The Week | ||||||||||

These jokes are archived here. From Scott Anonymous, a real story: Here's another story about code reviews, in light of the comments about 'figuring out what the code is supposed to do', you need comments or external documentation as others have said. In one company I was at, an engineer submitted code for review just an hour or two before the review was scheduled. There was no documentation, and only five comments in the 1 KLOC file. One comment was the less-than-helpful: //#include <math.h> // fix this As one of my co-workers said "I can't review this. This first function has nothing says what it's supposed to do. I don't know if it's a good function for displaying letters on the screen or a bad square root function." Yes, the function name was something like "put_str", but that could have been wrong too! No documentation, remember? |

||||||||||

| About The Embedded Muse | ||||||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |