|

||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||

| Contents | ||||||

| Editor's Notes | ||||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar, for example, here, here and here. The Embedded Systems Conference will be August 27-29 in Santa Clara. I'll be giving one talk, and Jacob Beningo and I will do a "fireside chat" about how this industry has changed over the last 30 years (or more). Jack's latest blog: Data Seems to Have No Value. |

||||||

| Quotes and Thoughts | ||||||

The longer a defect stays in the system, the more it costs to repair. Niels Malotaux |

||||||

| Tools and Tips | ||||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. |

||||||

| Freebies and Discounts | ||||||

This month's giveaway is a slightly-used (I tested it for a review) 300 MHz 4-channel (including 16 digital channels) SDS 2304X scope from Siglent that retails for about $2500.

Enter via this link. |

||||||

| Single-Pixel Errors Confound AI | ||||||

In Muse 372 I wrote about an article in the Communications of the ACM on metamorphic testing. The authors of that paper sent an interesting, and disturbing, letter to the editor in the August 2019 issue of that publication. The letter briefly states how, in their testing, they found that a single-pixel change in an image can completely confuse an AI engine that had been trained to recognize an object, specifically in the domain of self-driving cars. A single-pixel change foxed the AI 24 times out of 1000 LIDAR images tested. A two-pixel change foiled the algorithm in three more images. David Parnas replied to the letter, stating "In safety-critical applications, it is the obligation of the developers to know exactly what their product will do in all possible circumstances. Sadly, we build systems so complex and badly structured that this is rarely the case." While I am enthusiastic about the prospects for AI, I'm remain concerned that, as conceived today, these are black boxes whose operation - transfer functions, if you will - no one understands. Some see this as a feature; after all, the brain guiding a car today is equally opaque. One wonders if chaos theory should be merged with AI. The canonical butterfly flapping its wings in Brazil that leads to chaotic weather in North Dakota seems quite analogous to tiny pixel changes that confound the AI. Random noise guarantees pixel alterations, and it's probably impossible to test for all noise scenarios. A (young) physicist told me that in the future he expects AI will uncover new theories about the universe without any corresponding knowledge of fundamental principles. We may learn a new e=mc2, for instance, from empirical data without ever understanding where the formula came from. Data without insight is not science as I know it. The light is red, but a cop is waving you through the intersection. Or, is that apparent officer really a kid bouncing a ball? While I'm convinced these are solvable problems, since humans solve them every day, it sure seems that level 5 automation is a long way off. |

||||||

| More on Code Inspections v. Static Analysis | ||||||

Jeff Johnson responded to Muse 379's article about code inspections and static analysis:

John Hartman wrote:

Harold Kraus sees a role for both:

|

||||||

| Static Analysis v. Assertions | ||||||

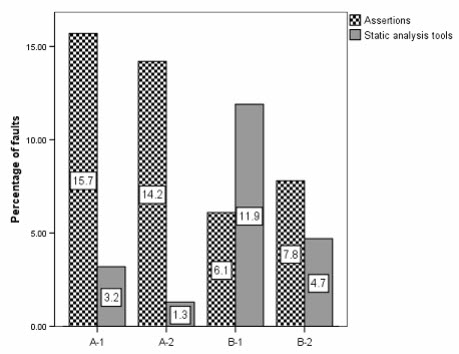

The comments in the previous article were about comparing the value of static analysis to code inspections. What about static analysis v. the use of assertions? Assessing the Relationship between Software Assertions and Code Quality: An Empirical Investigation by Gunnar Kudrjavets, Nachiappan Nagappan, Thomas Ball addresses this question. They looked at the efficacy of both in four projects. The first two (A-1 and A-2) were each a bit over 100 KLOC; B-1 and B-2 were each about 370 KLOC. So these were not those ridiculous academic experiments where four sophomores generated a little code and lots of (suspect) results. They found:

The authors also found that increasing the assertion density in the code led to a greatly-reduced bug rate. The moral: Never rely on a single tool or process for finding errors in the code. Inspections are very effective. Static analysis is very effective. Assertions are very effective. Combined, expect far fewer shipped defects. |

||||||

| This Week's Cool Product | ||||||

Not really a product, but cool nonetheless: Analog Devices' Analog Dialog is a free e-magazine that always has something of interest. We embedded people must interface to the real world, so analog circuits are very important (and analog design is a lot of fun, and challenging in a very different way from digital work). But the publication has more than that; recent issues have included articles on dealing with clock skew, over-the-air firmware updates, and much more. Sure, their components are always featured, but there's deep technical meat we can all use. Subscribe here. Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||||

| Jobs! | ||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||

| Joke For The Week | ||||||

Note: These jokes are archived here. Last issue I ran the (apparently true) contortions needed to reboot a GE light bulb. Several readers sent a video of the process; it's more fun to watch at 2X normal speed. Carl Farrington sent the instructions to recalibrate the gas gauge in a Prius: 1. Park the vehicle so as it is level,front to back and side to side. |

||||||

| About The Embedded Muse | ||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |