|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Public Seminars: I'll be presenting a public version of my Better Firmware Faster seminar outside of Boston on October 22, and Seattle October 29. There's more info here. Or email me. On-site Seminars: Have a dozen or more engineers? Bring this seminar to your facility. More info here. Latest blog: My review of the movie First Man. |

||||

| Quotes and Thoughts | ||||

"Testing shows the presence, not the absence of bugs." Edsger Dijkstra |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Chris Hinds sent a link to a paper he and William Hohl wrote about fixed point math. That may be paywalled, though. Another probably-paywalled paper is an excellent analysis of a fixed-point library for 8-bit AVR parts. Michelle Corradin wrote:

Ian likes SystemC:

|

||||

| Freebies and Discounts | ||||

This month we're giving away an Owon oscilloscope. See a review later in this issue.

The contest closes at the end of October, 2018. Enter via this link. |

||||

| On Test | ||||

This week's quote ("Testing shows the presence, not the absence of bugs" ) should shape our understanding of writing error-free code. Testing is hugely important. But it simply isn't enough. If you've been to my seminar you know I'm passionate about this. You can't test quality into the code, or any other sort of product. Lots of research shows that most test regimes only exercise half the code. Some teams use code coverage, which proves that every line, and in some cases, even every possible condition/decision is tested. Coverage will greatly improve the quality of a product, but generally at considerable cost. Do realize, though, that coverage by itself will not ensure the product is working correctly. A tested line of code is not necessarily a correct line of code. I ran an experiment with LDRA's Unit, which is a product that automatically generates unit tests. Fed a 10 KLOC program, Unit generated 50 KLOC lines of unit tests. And that's just unit tests; system and integration testing would be extra. How many of us generate 5 lines of test code for every line of shippable firmware? The truth is it's extraordinarily difficult to create a comprehensive test suite. I like to think about software development as a continuous process where debugging, or better, de-erroring, takes place during coding and even design; where we're using as many "filters" as possible to ensure defects don't get delivered. Capers Jones' and Olivier Bonsignour's book The Economics of Software Quality lists 65 software defect prevention methods. 65. Not all are useful for embedded firmware, and some conflict with others. But the sheer quantity of methods is both mind-boggling and hopeful; hopeful, in that we do have a body of knowledge of ways to drastically reduce errors. The authors list the efficacy of each. Number one: Reuse using certified sources, which is 85% effective in limiting defects. Number two: formal inspections, which eliminate 60% of all defects before testing even starts. Where do these numbers come from? Not those academic studies where a half-dozen sophomores participate in some experiment which generates a few hundred lines of code. It's from Jones' database of tens of thousands of real projects. I think that a minimal set of filters for use in firmware development would include:

|

||||

Many readers responded to the article in the last Muse about developing embedded systems in the 70s. Luca Matteini wrote:

|

||||

| Review: Owon VDS1022I Oscilloscope | ||||

A while back I purchased an Owon VDS1022I USB oscilloscope for review. It has been cluttering up the bench for too long but I finally found some time to take it for a spin. It's this week's giveaway. The specs are modest:

Other models are available with better features, up to $425 for four channels and 100 MHz bandwidth. I don't know much about Owon, but their website lists some interesting instruments, including these USB scopes as well as bench models. The unit comes nicely packaged in protective foam. Its case is metal with rubber bumpers protecting each end. It weighs nothing but seems reasonably solid. It comes with two probes, rated 60 MHz, with a switch to select X1 or X10 attenuation. They're cheaply made, hardly surprising for this $106 (from Amazon) scope. I'll have more to say on this class of probes in the next issue of the Muse. Yet I found the probes behaved better than expected. Documentation is minimal. There's an 18 page quick-start guide that includes no information whatever about using the product. There's some safety info and tips about compensating the probes. That's about it. The supplied CD has a PDF of the same doc, and no other useful guides. But it does have an 11 page manual about installing the USB driver, a tedious process that requires three reboots of the PC. Those instructions will lead a non-expert astray, at least for the version of Windows 10 I'm using, but anyone with lots of Microsoft experience will be able to successfully load the driver. Installation of the application itself isn't covered but is trivial. There's one catch: the name of the app isn't "Owon" or "scope" or anything meaningful. I had to navigate to the "program files\owon" directory and hunt around for "launcher.exe" which is the program. Turns out, there's no need for a user manual. I have never used scope software that is so intuitive. I have to credit the designers for an extremely clean, simple layout. Unlike some scopes, the app doesn't want to take over your entire screen; it can be parked in a corner, perhaps leaving plenty of room for other virtual instruments' applications.

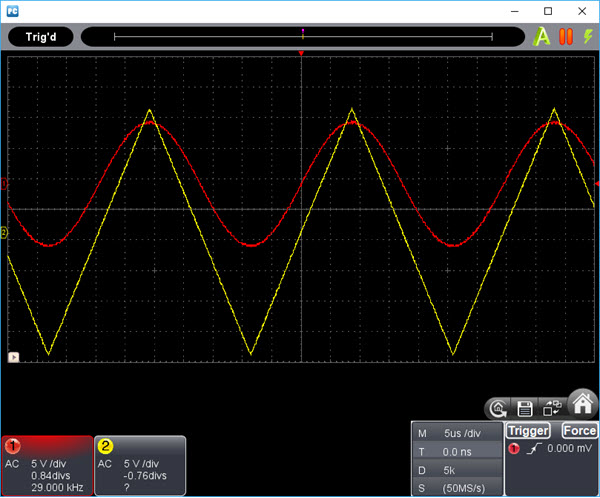

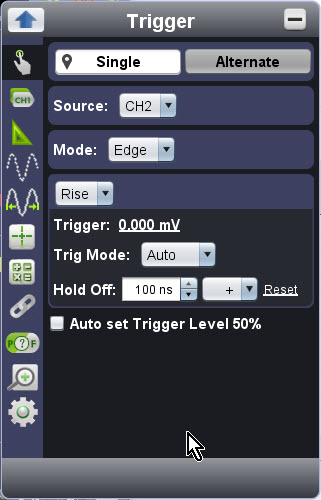

To set vertical gain one clicks on the channel 1 or 2 box on the V/div words. A slider opens and allows selection using the usual 1-2-5 sequence. Under that selection, on channel 1, note the "0.84divs" - that sets the vertical offset from the center. A scroll bar appears, but the feature is all but worthless, as there's considerable delay between scrolling and the trace moving to its new spot. However, an alternative is to click on the "1" or "2" to the left of each trace and drag those, which incurs no delay. The last item in those vertical boxes is the frequency of the signal, which is extremely accurate. That's measured for the triggering channel only. In the vertical box towards the right side of the screen, selection of the time base is exactly like setting the vertical gain, with options ranging from 5 ns/div to 100 s/div. The "T" is the trigger offset from center, which calls up a scroll bar. That suffers from the same ills as the vertical position control, but grabbing the red arrow at the top of the screen and dragging that is quite responsive. "D" is the buffer length, and "S" is the sampling rate. These can only be controlled indirectly by setting the time base. Click on the "Trigger" button and a whole new world pops up:

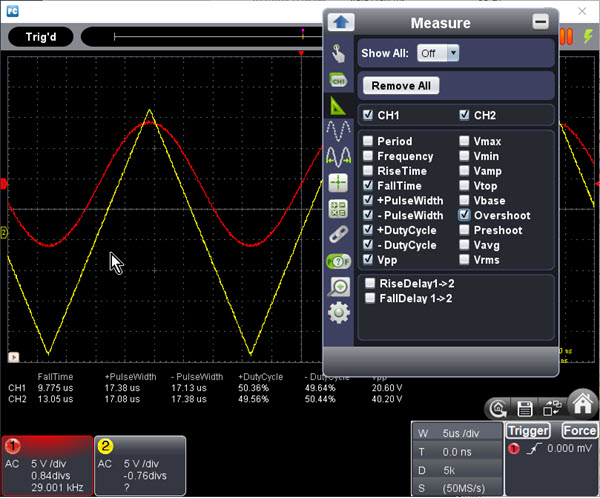

The vertical bar icons select pretty much all operating modes. For instance, click on the sine wave with arrows and a cursor menu appears. The button below that sets display parameters, like persistence, XY mode (for fun with Lissajous figures), vector/dot mode, and brightness. FFTs are faster than any other scope I've used, and seem accurate - the sine peak was just where it was supposed to be. Plenty of measurements are available, and they are updated instantly:

Note that under the waveforms the selected measurements are shown for each channel. Updates are fast. Really fast. Check out this video which shows a sine wave on channel 1 triggering the scope. Channel two is measuring a signal asynchronous to channel 1, so it just blows all over the screen:

Communications was very reliable; left running all night it never experienced a comm glitch. Interestingly, the unit supports mask testing, something I hadn't seen in a low-cost USB scope before. I wrote about this in Muse 358. The scope's -3 dB point was at 33 MHz, better than the advertised 25 MHz, though above 25 MHz the sine wave starts bouncing around a little. Despite the painful installation, I'm impressed by the scope. The $106 version, at 25 MHz, won't get a pro all fired up, but the feature set, and especially the really nice application, outshine the other USB scopes I've used. For work on MCUs at low frequencies I'd snap up one of these in a heartbeat. |

||||

| This Week's Cool Product | ||||

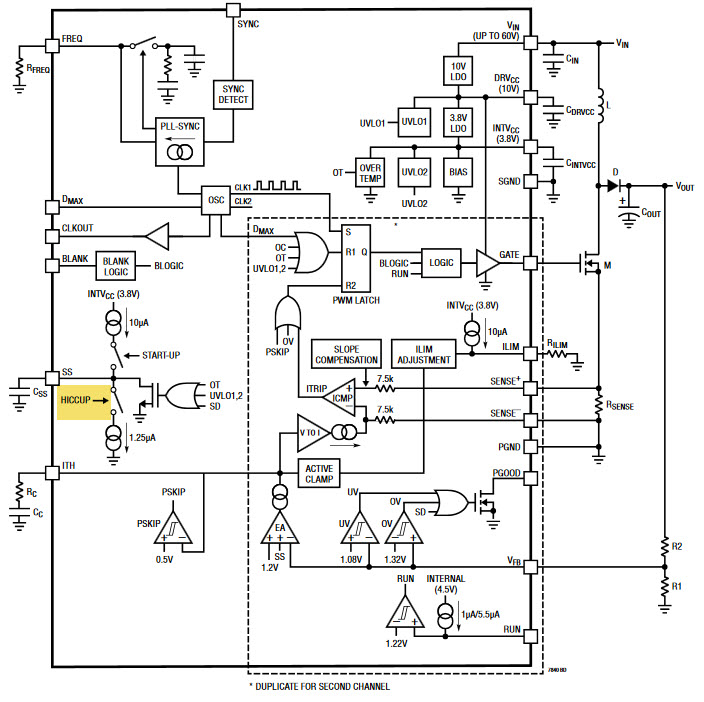

I wasn't sure if this should be in the Joke For the Week section or not, but it's a real thing. Analog Device's LTC7840 regulator has a problem with hiccups. Well, maybe not a problem, but there's a hiccup mode, which apparently protects the device from over-current conditions.

I sure hope no one comes out with a belch-mode device! Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter.

Please keep it to 100 words. There is no charge for a job ad.

|

||||

| Joke For The Week | ||||

Note: These jokes are archived here. How to debug a "C" program: 1) If at all possible, don't; let someone else do it. 2) Change majors. 3) Insert/remove blank lines at random spots, re-compile, and execute. 4) Throw holy water on the terminal. 5) Dial 911 and scream. 6) There is a rumor that "printf" is useful, but this is probably unfounded. 7) Port everything to CP/M. 8) If it still doesn't work, re-write it in assembler. This won't fix the bug, but it will make sure no one else finds it and makes you look bad. |

||||

| Advertise With Us | ||||

Advertise in The Embedded Muse! Over 28,000 embedded developers get this twice-monthly publication. . |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |