|

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. |

| Contents |

|

| Editor's Notes |

Better Firmware Faster classes in Denmark and Minneapolis

I'm holding public versions of this seminar in two cities in Denmark - Roskilde (near Copenhagen) and Aarhus October 24 and 26. More details here. The last time I did public versions of this class in Denmark (in 2008) the rooms were full, so sign up early!

I'm also holding a public version of the class in partnership with Microchip Technology in Minneapolis October 17. More info is here.

I'm now on Twitter.

|

| Quotes and Thoughts |

Mr. Spratt quoted an old Spanish saying, "Lo barato sale caro": That which is cheap comes out expensive. |

| Tools and Tips |

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. |

| Freebies and Discounts |

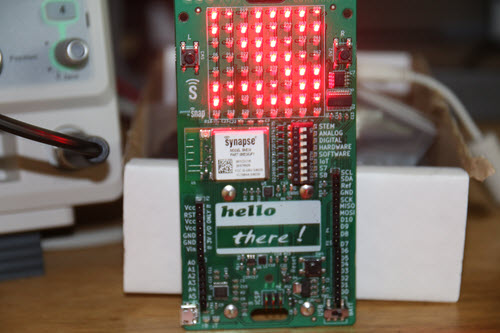

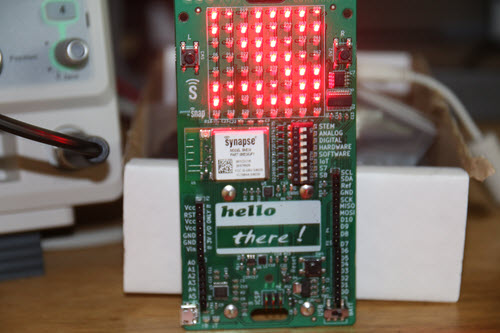

This month's giveaway is an electronic badge from the recent Boston Embedded Systems Conference. Hundreds of these were distributed. They advertise the wearer's interests (set via a DIP switch) and can form mesh networks with badges worn by other attendees. It's a clever bit of engineering and a lot of fun.

Enter via this link. |

| More on Software Malpractice |

There was quite a bit of feedback to the article in the last Muse about engineering malpractice. Whether you hate the legal system or not, I do think that the courts are going to be increasingly involved in product liability. We should be proactive and improving our practices now.

Brian O'Connell wrote:

|

Note this article for your discussion on engineering 'malpractice':

www.asee.org/documents/sections/midwest/2006/Engineering_Malpractice.pdf

Which is similar to words of my employer's legal counsel, where the basis of a civil tort would be negligence + lost opportunity + damages, such that at least two of the three will mean trouble in court, and all three is where your insurance company demands that you seek a pre-trial settlement. And recent changes to product safety assessment regulations contribute to product complexity and increase corporate risk. For example, EU's new Low Voltage Directive requires a formal risk assessment where conditions of 'foreseeable misuse' must be part of your analysis and design intent. So what may be reasonable for an engineering action takes a back seat to what is "reasonable behavior" for any end-use operator. Have seen more than one tort action where the root cause was not equipment failure, but where a 'trained' operator ignored both electrical and written warnings and bad things happened after equipment was forced to continue operations.

Operator misconduct, at least for my industry segment, has not been indicated as a common root-cause failure of equipment. But this will change. The current evolution of product administrative law and product safety standards are pushing any level of a bad outcome resulting from willful human misconduct to be considered equipment failure. Legal risk is a significant contributor to investment in process automation and/or robotics - eliminating the human decision-maker always reduces risk. All hail to the AI overlords...

|

I do recommend reading the article he linked to.

Gary Lynch sent this:

|

Your treatise on software malpractice touched a nerve. I have been designing the stuff for over 30 years and I see a trend away from my definition of quality.

A few years ago I switched to a new microprocessor and a new IDE. Contributors to the IDE user forum (which replaced the technical support department) advised me to always download and install the latest updates. When I wrote in that I couldn't view the special function registers on the debugger, they asked for my version number and said that my version was so old it didn't support viewing the processor's registers. When I replied I had checked the box to notify me of new releases, they said my version was so old that the auto-notification feature didn't work either!

My office mate was a contractor who trolled for new business on his smart phone, which locked up every 2 or 3 calls. I asked whether he didn't find that excessive, but he didn't. One day it disconnected him from a probable job offer from an unknown source. Even after it cost him a job offer, he refused to consider chucking it for a better phone!

We hired a new programmer and he asked me what I used for a bug tracker. I said I had a requirements management system and it could handle the bugs, too. I later learned he expected to deal with dozens to hundreds of bug reports at the same time. In my world view, if I have 2 simultaneous live bugs, it's a bad day.

But with the passage of time, I have been finding fewer and fewer people who understand me when I try to explain this standard. They seem to be trained to believe you have to ship product with known defects and deal with them only when somebody complains. I fear when you and I are gone, no one will remember that it used to be different.

|

Tyler Herring asks some interesting questions:

|

Just an interesting side thought on your piece about software malpractice... If it ever came to be, in a legal sense, I do sort of wonder what kind of change it would drive in the industry at large.

1) Increase in indemnity clauses in contracts or product documents (manuals, terms of use, etc.)?

2) Contractors or software houses getting insurance for malpractice?

3) Increase in code audits/code reviews? Having to document these for legal purposes?

4) Relaxation of project timelines to allow for audits and software cleanup?

5) Does the individual developer assume the liability? His or her boss? The company at large?

6) Does this drive adoption of newer methodologies seen to be "newest best practices"?

7) What the end effect any of the above may have on total number of bugs in the first shipped version?

8) What the first inevitable really, really big court case to establish case law on this would be.

9) What if the end software package has to be signed off on by a Licensed Professional Engineer?

10) How would this affect the "small start-ups" in the software industry?

It does make one wonder.

|

|

| More on Goesintos |

In the last Muse I ran an email from a reader about some cars that lock up their infotainment/navigation system when bad data arrives from the mother ship. I recommended, as so many have for so many years, checking values passed to functions to ensure they make sense. That used to be called checking your goesintos and goesoutas.

Charles Manning wrote:

|

Last week I took my cat into the vets for some surgery. They asked me if I wanted them to perform various tests before doing the surgery.

Me: "Will it change what you do?"

Them: "No" (apart from jack up the bill and give me a pretty printout with stats).

Me: "Then the tests are pointless. No tests."

In some cases I feel that way with gazintas and gazoutas. Like Ada exceptions et al, they're great during development and testing, but what do you do with them in a real live system?

Erlang has a "let it crash" policy. That might be acceptable in some conditions, but is overkill in others. Do you set the whole car on fire because you find a little scratch? Do you send the pilot to his death knowing that exception 17 was triggered on variable xx1130?

One of the most dramatic examples of checking being too draconian is the Ariane rocket destruction. Ada threw an exception when a value went out of bounds. That variable was not even required at the time. It could have been a random generated value and it would not have mattered. But Ada cared so much about one little variable that it blew up the rocket. That would never have happened with C (sure other problems might have).

I've seen systems fail because ancillary logging features failed. A temperature sensor came loose giving wild temperature readings, instead of just ignoring it, an assert fired in the backlight driver and the system crashed.

The other chestnut is a write to a logging system failing, so the whole system is killed by an assert. That's often a terminal problem since the file system is typically not fixed by a reset.

I've been reading some of Nancy Leveson's "Safeware" again (I read it about once a year). She relates a few aircraft crashes where the the plane software was deliberately crashed (assert/exception/whatever) because the software writers never expected the situation to arise. The plane would have recovered except that the gazinta gazouta checks crashed the plane.

So whatever you do with gazinta and gazoutas make sure you back them with a policy that is fitting the conditions you're using them in! |

Charles' comments are important. However, there are plenty of times we can take some reasonable action. Maybe default to a safe mode. In the case of the Toyota referenced by the original submitter, instead of a complete lockup that requires dealer intervention, even a reboot would make more sense. On spacecraft they’ll often default to a “safe” mode, where the code orients the solar panels to the sun to get as much power as possible, and the system goes into a degraded mode that does little more than await instructions from the ground.

Error handling is an incredibly difficult problem.

Steve Leibson noted that there was a gazinta button on an HP Calculator!

|

I just skimmed today's Embedded Muse and got a blast from the past: gazintas.

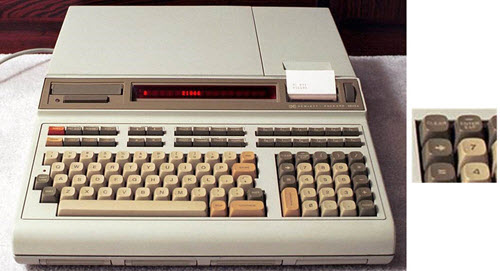

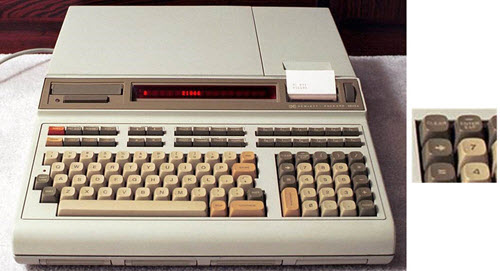

This doesn't relate to the topic in the Muse but HPL, the language I contributed to that ran on the HP9825 used the "gazinta" ( ) as an assignment operator instead of the equals sign. That removed the ambiguity of the assignment function from the comparison function. There was a gazinta key on the keyboard. On the original Chicklet keyboard, it was usually one of the two keys that wore out first. ) as an assignment operator instead of the equals sign. That removed the ambiguity of the assignment function from the comparison function. There was a gazinta key on the keyboard. On the original Chicklet keyboard, it was usually one of the two keys that wore out first.

Here's a photo of the "new" keyboard based on real Cherry mechanical switches. The gazinta key is to the left of the "7" key on the number pad. (The inset to the right shows the key more clearly):

|

|

| The New York City Crash of 2017 |

A New York Magazine article by Reeves Wiedeman details the events that took place when hackers crashed NY City December 4, 2017. It's a worthwhile read that does a good job describing the event and its causes. (And, yes, tenses are mixed up in that sentence.)

For about the last decade security has always been The Next Great Thing. Mostly it's talked about in the context of PCs. But for every PC sold hundreds of embedded devices hit the market. Many have connectivity. That Bluetooth-enabled toothbrush could be a network attack vector. One of my oscilloscopes boots Windows 7. It is never connected to a network, but could be as it has an Ethernet port. It also has never gotten a Windows update, and is probably hundreds of Microsoft patches behind.

Do you make a point of updating the OS in your test equipment? Do you even know what OS is in that gear? Windows, Linux, or maybe some home-brew RTOS?

Most vendors of embedded products release few if any security updates no matter what OS is used. Few customers would be thrilled if they needed to update dozens or hundreds of devices every Patch Tuesday (or whatever schedule an OS vendor uses).

I'm not a fan of the phrase "Internet of Things," because it's used as if IoT is a new, new, exciting technology. The truth is we've been building a network of things for twenty years. Now marketing has caught on and they coined a new TLA for this old idea. But it is true that a lot of enabling technology has come along that makes it cheaper and easier to connect even simple devices to a network.

Most engineers I talk to tell me they can't get a budget for building decent security into their products. The "ship it, ship it!" mantra overrides adding anything that does not offer an obvious benefit to the customer. End users either know little about security or assume that the engineers wisely built it in. Ironically, it has zero value until it's needed.

I doubt this will change any time soon. Probably it will take a disaster, like the crash of 2017, for us to get serious about security. And then you can sure there will be a Huge Federal Program instituted to deal with it. |

| Jobs! |

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter.

Please keep it to 100 words. There is no charge for a job ad. |

| Joke For The Week |

Note: These jokes are archived at www.ganssle.com/jokes.htm.

From Jon Woellhaf:

Three engineers are driving to a meeting when their car starts running poorly and comes to a stop. "I'm sure it's the fuel pump," says the mechanical engineer. "No," says the electrical engineer, "It's undoubtedly the ignition system." "I don't know what the problem is," says the software engineer, "but let's try getting out and getting back in." |

| Advertise With Us |

Advertise in The Embedded Muse! Over 27,000 embedded developers get this twice-monthly publication. . |

| About The Embedded Muse |

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and

contributions to me at jack@ganssle.com.

The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get

better products to market faster.

|

) as an assignment operator instead of the equals sign. That removed the ambiguity of the assignment function from the comparison function. There was a gazinta key on the keyboard. On the original Chicklet keyboard, it was usually one of the two keys that wore out first.

) as an assignment operator instead of the equals sign. That removed the ambiguity of the assignment function from the comparison function. There was a gazinta key on the keyboard. On the original Chicklet keyboard, it was usually one of the two keys that wore out first.