|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. |

||||

| Quotes and Thoughts | ||||

The 3 root causes for schedule slippages: (Capers Jones, Crosstalk June 2002):

|

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Grant Beattie sent a link to a paper about using a SPI as a UART transmitter. |

||||

| Freebies and Discounts | ||||

The folks at ORBcode are generously giving away one of the debuggers for Cortex M devices. I have not tried this, but it looks quite interesting. Enter via this link. |

||||

| The Cost of Producing High-Quality Software | ||||

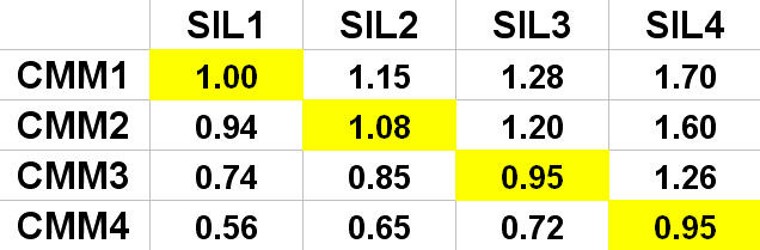

Everyone knows that high-quality code is super-expensive to produce. The space shuttle's code may be the costliest ever, at something like $1,000 per line. But that includes a staggering amount of test and verification. A 2000 paper by O. Benediktsson challenges the notion that great code is always costly. In Safety critical software and development productivity, the author shows that to achieve high Safety Integrity Levels (SIL1), all things being constant, you're going to spend big bucks. But what if all things are not constant? The cited paper includes this chart (I've modified it a bit to make it more readable): CMM means "Capability Maturity Model" and is one way of evaluating the maturity of a software organization. CMM1 means chaos and no defined software process; at CMM4 developers are disciplined and are carefully engineering their products. The numbers in the chart show relative costs. In this case the CMM is used as just a model of process; it is not canonical and there are many ways to refine development processes. The chart shows that one can get great code (SIL4) for the same price as unsafe software, as long as one pursues better process maturity. One of my profoundest beliefs is that we should be constantly learning, always looking for better ways to do our jobs. Stasis is death. 1The notion of SILs is common in the safety world. This paper uses the SILs as defined in the IEC 61508 standard, where SIL1 is the usual crap, and SIL4 is highly-reliable code. |

||||

| Bfloat16 | ||||

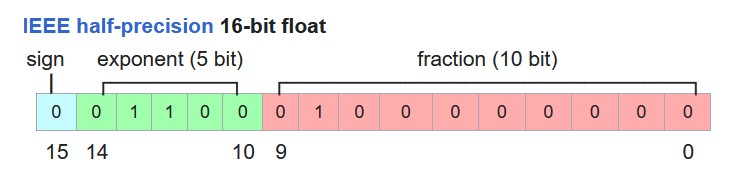

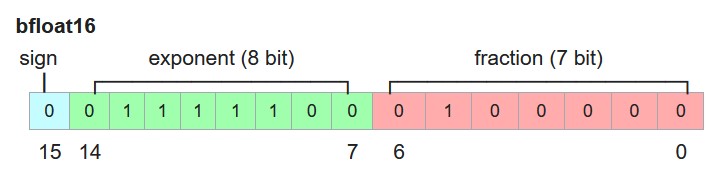

SambaNova Systems has a new AI chip that has 102 billion transistors. Some of us remember when a big part had a few hundred active elements. The part, named the SN40L, has 1040 cores and can zip along at 638 teraflops (though the flops seem to be using bfloat16 and some proprietary 8 bit format). The company also has some method for dealing with sparsity, matrices common in LLMs where many or most of the coefficients are zero, though they are being a bit cagey about this. The chip is not for sale, though, as SambaNova will be building their own servers which will host customer applications. Pretty cool. But, as Arlo Guthrie said in Alice's Restaurant, but thats not what I came to tell you about. There has been a lot of chatter about the bfloat16 standard SambaNova uses lately. It stands for, strangely "Brain Float", and comes from Google. It's a 16-bit floating point format. But don't we already have that? The IEEE standard does, after all, support a half-precision mode that fits into two bytes. It looks like this: Google's bfloat is similar, but gives us a bigger exponent: So bfloat16 can support numbers between 10-38 and 3x1038, a far greater range than ±65504 of IEEE half-precision. Of course, the latter, with more mantissa bits, gives more resolution. Since bfloat16 uses an offset exponent, the formula for a number represented in this format is: (-1)sign x 2exponent-127 x 1.mantissa Nvidia also has a unique floating point format call TensorFloat, but is of less interest to most of us as it uses 19 bits, which might be nice on their silicon, but that doesn't pack well into the memory words most of us use. |

||||

| On Testing | ||||

The real value of tests is not that they detect bugs in the code but that they detect inadequacies in the methods, concentration, and skills of those who design and produce the code. - C.A.R. Hoare 100% test coverage is insufficient. 35% of the faults are missing logic paths. - Robert Glass, Facts and Fallacies of Software Engineering Testing by itself does not improve software quality. Test results are an indicator of quality, but in and of themselves, they don't improve it. Trying to improve software quality by increasing the amount of testing is like trying to lose weight by weighing yourself more often. What you eat before you step onto the scale determines how much you will weigh, and the software development techniques you use determine how many errors testing will find. If you want to lose weight, don't buy a new scale; change your diet. If you want to improve your software, don't test more; develop better. - Steve McConnell, Code Complete Testing is all too often left to the end of the schedule. The project runs late… and what invariably gets cut is testing. – Jack Ganssle Most teams rely on testing as a quality gate to the exclusion of all else. And that’s a darn shame. For one thing, test does not work. Studies show that the usual test regime only exercises about half the code. It’s tough to check deeply-nested ifs, exception handlers, and the like. Alas, all too often even unit tests don’t even check boundary conditions, perhaps out of fear that the code might break. Typical embedded projects devote half the schedule to test and debugging. So does that mean the other half is, well, bugging? Shrinking the bugging part will both accelerate the schedule and produce a higher quality product. We need to realize that bugs get injected in each phase of development, and that a decent process employs numerous quality gates that each finds defects. That starts early with doing a careful analysis of requirements. It continues with the use of developers thinking deeply about their design and code. Tools like Lint and static analyzers expose other classes of problems - before testing commences. Code inspections reveal design errors early, and cheaply, when done properly. Test will surely turn up more problems, but most should have been found long before that time. And a suitable metrics effort must be used to understand where the defects are coming from, and what processes are effective in eliminating the bugs. This is engineering, not art; engineers use numbers, graphs, and real data gathered empirically, to do their work. Sans metrics, we never have any idea how we stack up compared to industry standards. Sans metrics, we have no idea if we’re getting better, or worse. Metrics are the cold and non-negotiable truth that reveals the strengths and weaknesses of our development efforts. (The quality movement – which unfortunately seems to have bypassed software engineering – showed us the importance of taking measurements to understand, first, how we’re doing, and second, to see the first derivative of the former. Metrics are a form of feedback that gives us insight and understanding into a process. Until software engineers embrace measurements quality will be an ad hoc notion achieved sporadically.) While most of us use testing almost exclusively to manage our software quality, there are some teams that use test simply to show that their code is correct. They expect, and generally find, that at test time the code works correctly. And these teams tend to deliver faster than the rest of us. |

||||

| Failure of the Week | ||||

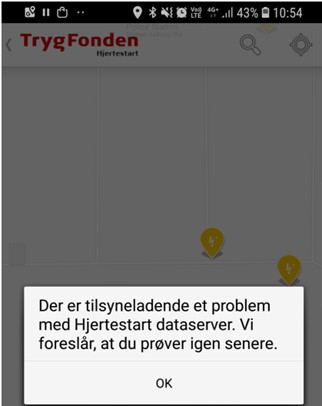

Lars Weje Hangstrup sent this:

Attached screenshot is of a Danish app for locating the nearest public defibrillator. Handy if you see someone having a heart attack. The text reads: Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. Arguing with an Electrical Engineer is liking wrestling with a pig in mud, after a while you realize the pig is enjoying it! |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |