|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. Jon Waisnor poses a question about how we're coping with supply chain issues:

|

||||

| Quotes and Thoughts | ||||

Brooks' law: Adding manpower to a late project makes it later. Highly recommended is his book The Mythical Man-Month. Though dated it is still full of wisdom. |

||||

| Tools and Tips | ||||

| Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. | ||||

| Freebies and Discounts | ||||

This month's giveaway is a STM32F411RE, mbed-Enabled Development Nucleo-64 series ARM® Cortex®-M4 MCU 32-Bit Embedded Evaluation Board. As it has a debug port, it's perfect for learning about working with ARM Cortex M4 MCUs.

Enter via this link. |

||||

| Department of Redundancy Department | ||||

I believe it was the humorist group Firesign Theatre who, in their fun 1970 record album Don't Crush That Dwarf, Hand Me the Pliers, came up with the notion of a Department of Redundancy Department. The DoRD is alive and well in our business. Though too much code has too few comments, it's not uncommon to find developers duplicating comments in code. From some real code: /* This section returns TRUE if A==B */ ret=FALSE; if ( A == B) ret=TRUE; // if A == B return TRUE return ret; The comments are correct and clear. The problem is that if the code changes, the comments have to change in more than one place. Odds are, they won't. At least one of the comments will be wrong, and the poor maintainer will be forced to examine the code to decide which is accurate. A more complex example could induce Excedrin Headache number 19 (i.e., what the heck was this guy thinking?). In a real-world example I ran across some years ago, the source file comprised about 2200 lines of code. 2000 of those were in a header block which described every aspect of the very complex code's operation. Included was a link to a paper describing the algorithm in detail. One could make an argument that the link alone would suffice. Or perhaps an abbreviated outline of the algorithm, with the link. You can be sure that a maintainer would have placed a heavy weight on the page-down key to get to skip the details and get to the code itself. Regular readers know I'm a comment zealot. I prefer to write all of a function's comments before entering any C statements. Given clear comments that show the designer's intent, then there's not a lot of work to filling in the code. Most of our code is a documentation desert. We know that great code will have a comment density (e.g., ratio of lines with comments to non-blank source lines) exceeding 60%1, yet that's rarely achieved. But density alone is a poor metric; those comments must be meaningful, concise and useful, providing information beyond the bare ideas embodied in the C statements. There's an old joke that is funny, in the sense that a train wreck is awful yet eyeball-grabbing: Never include a comment that will help someone understand your code. If they can understand it, they don't need you. Consign that meme to the joke file! 1Measuring Software Quality: A Case Study Thomas Drake, IEEE Computer Nov, 1996 |

||||

| How Teams Shape Up | ||||

How is your team doing? Are there problems? What could you improve? Over the decades I've been called to evaluate many firmware engineering teams around the world. Generally management is unhappy; projects are late and buggy, or engineering is not meeting some corporate goals. Management wants to effect some sort of change, though they're not sure what. They typically don't understand that I can't help unless I have quite a bit of information about the team and its work. Over the years I've developed an outline of questions designed to garner the insight needed to make recommendations for change. I don't do this sort of thing anymore, but you may find these questions useful to get some insight into your own team's behaviors and processes. You'll note there's a lot of overlap between questions asked of team leads and of the team members. I have found that, too often, the team lead/VP/manager does not know what is really going on in the trenches. Analyzing this dichotomy gives quite a bit of insight into the efficacy of an engineering department. For team leads:

For team members:

|

||||

| Failure of the Week | ||||

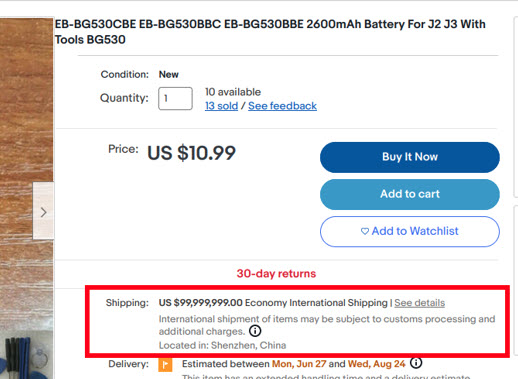

Sergio Caprile found a deal on a battery. "Economy International Shipping" was only $100 million!

From Craig Ogawa:

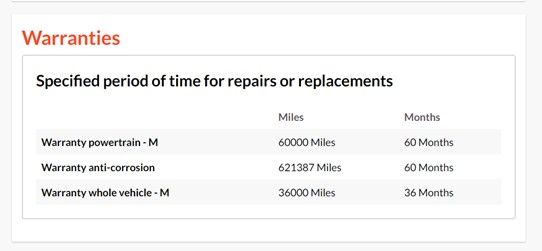

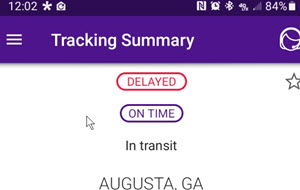

Ian Freislich sent this baffling status update:

Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. From Dave Fleck: Caught my son chewing on electrical wires. I got a charge out of this. I hope others were just as positive. |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |