The story of the microprocessor has been, in no small sense, converting hardware to software. For example, pre-processor it was common to see a bank of cams on a shaft rotated by a slow motor, each cam activating a switch. This would sequence operations in a system. The sequence was "programmed" by changing each cam's angle. Today a little PIC processor would do the same for much less money. Madman Muntz would be proud. (He was famous for coming into the lab and snipping parts out of a circuit; if it still worked the engineers were required to eliminate that component.)

Today software allows applications utterly unimaginable were they to be implemented in hardware. Consider building Microsoft Excel with non-programmable chips. It would be the size of the computer the mice built in Hitch-Hiker's Guide.

One of the designs I'm most proud of was an in-circuit emulator that needed only 17 chips, back when most ICs had little functionality. Competing units had hundreds. In my unit complex code replaced several PCBs of logic. That's the cool thing about embedded: clever firmware can replace hardware.

But sometimes it's wise to add components.

In the 1980s we bid on a government project to take in very high speed tape data. Turns out I mis-bid it. I spent four weeks finding the fastest possible set of what turned out to be five assembly instructions to keep up with the flood of data. Maintenance was a nightmare.

This was a one-off so recurring costs were not important.

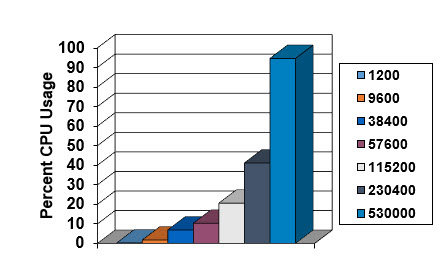

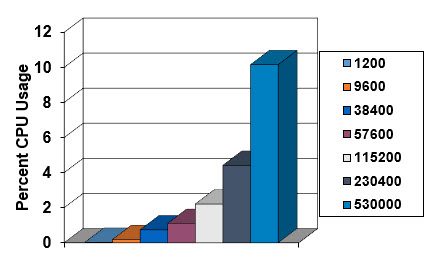

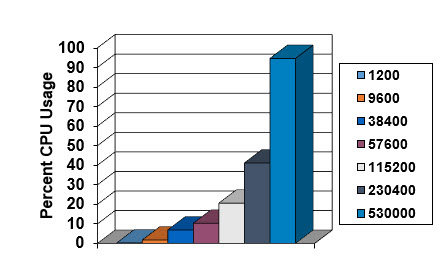

Later we were tasked with handling (what was then) fast serial data. Using an interrupt on each received byte gave this CPU utilization:

That is, the processor was completely consumed with processing these interrupts at 530k baud.

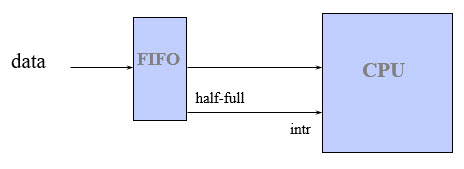

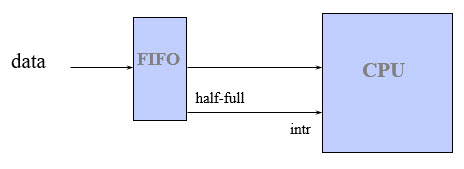

So we tried another solution: adding a 16 byte hardware FIFO:

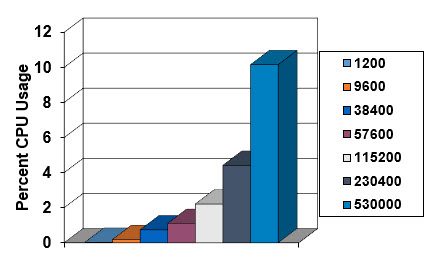

The FIFO was a part from IDT that had the usual fifo-full and fifo-empty status bits. Neither was useful to us as interrupting on fifo-full could mean losing data that arrived before the device was serviced. However, this part had a fifo-half-full output. That interrupt service routine read the entire FIFO before returning. The result:

That $1 part freed up 10x of the CPU, which allowed us to handle faster data. Maintenance costs plummeted as that real-time data didn't impact every aspect of the code. It also shortened development time as we weren't constantly searching for CPU cycles.

As always, in designing embedded systems one must trade off recurring cost, engineering costs, maintenance, feature sets and more. |

Last issue's take on multitasking brought a torrent of replies. Here are a few.

Daniel McBrearty wrote:

|

On multitasking - if we're talking about technical problem solving, I'm exactly like you. I need to focus intensely on the problem, possibly for days or longer at a time. I may become a bit antisocial or wake up at 5am with a new approach that I need to explore immediately. Over the years I've discovered that this really works quite well for me. I actually can't stop until I feel I've "cracked it".

Environments that suffer an excess of email and meetings are often very bad at getting even simple things right first time. A lot of this communication is signaling "I'm being productive" to the tribe.

Conversely, quality design and development often involves long periods of looking as if one is doing absolutely nothing. I'm reminded of a story about a sculptor, hired at great expense, on being asked why they had spent the last week staring at the slab of marble : "I'm working out which bits to remove".

Hardware and software design are I suppose a bit more forgiving, but the idea is very similar. |

Daniel's comment 'signaling "I'm being productive" to the tribe" made me think of a study (which I can't find) where often people in open offices realize everyone can see if they are working, so work hard a seeming to be productive rather than trying to accomplish important goals.

Nick Soroka cited one of my favorite movies:

|

No, young people also need laser focus. Speaking as a recent 20s, now just over 30, I frequently wanted people to be quiet or leave me alone when trying to architect a system/design or get something to work. It got to the point where deadlines were always here, so my boss installed an extra tall cube around my desk. Was kind of embarrassing now that I think about it.

Most of the stuff I have worked on is med-device; 8-bitters reading a few sensors/buttons and driving a motor. Sounds simple but it's amazing how much effort goes into a ground up application. Just look at Microchips QEI module for measuring motor speed. Took me about a week of full effort to get it working. I read many different datasheets in the PIC18 family looking for extra explanation. Seems like some authors are more generous with descriptions than others.

Some young people may claim otherwise, maybe a select few can multitask. But I would push back on most and say they overestimate their ability - or are just lying to themselves. I will say I jump between projects/interests on my own schedule. To some this may appear to be multi-tasking but for me it's taking a break after completing certain milestones. Sometimes it's also frustration with a certain milestone and I decide to take a break by focusing on something else.

I recently watched the movie 'The Gods Must Be Crazy' again and the beginning really made me question technology. Civilized man adapted his environment to suit himself rather than adapt to his environment. In doing so, we created a system where we must spend long hours learning how to live in our adapted environment which is ever increasing in complexity. |

Lynn Koudsi recommends the book Deep Work:

|

My name is Lynn, and I really enjoy your newsletter. I'm 25 years old working as a robotics developer and C/C++ part-time teacher, and I cannot for the life of me multitask. I try to sometimes, but I ultimately fail.

I come from a society that rewards you for hard work, and reproaches you for "laziness" and "poor performance". So naturally, I've always had the attitude of working extra hard, making sure I ace my tests, achieve side projects, be athletic, and later on become the ideal wife and mother. However, after I graduated and went into the job industry, I quickly realized that I can't be all things at once. I would try to plan a blog post while waiting for a build or try to answer as many emails as I can during lunch, and at the end of the day when I look back, I would question why I hadn't achieved everything I wanted to get done. And then I read Deep Work by Cal Newport, and I got all the answers I needed.

Cal goes into psychological, neurological, and philosophical arguments about why we should embrace deep work (focusing on one thing at a time with 100% of our capacity), and why multitasking is actually not productive at all. He also believes in the following formula: high-quality work produced = time spent * intensity of focus.

He further explains that on a physical level, when you work on cultivating a skill, a series of neurons fire in the brain, forming a layer of fatty tissue called myelin that allows the neurons to fire faster. The more you cultivate a skill, the clearer the neurological path of that skill. When you're multitasking and distracted, that path will not be as clearly cut, resulting in poorer performance in that skill, even if you're spending the same time overall trying to master it.

On multitasking, he notes: "... when you switch from some Task A to another Task B, your attention doesn't immediately follow - a residue of your attention remains stuck thinking about the original task. This residue gets especially thick if your work on Task A was unbounded and of low intensity before you switched". He believes that the best work is produced when in isolation of all distractions (like the case you described where your wife leaves the office to you). Since our world is now built on real-time messaging and high-stimulating apps, we're forced to sink more and more time into them, whether it's to regularly check your work email, or to keep on top of news. Unfortunately, society seems to think that that's perfectly fine. But Cal notes that "[e]ven though you are not aware at the time, the brain responds to distractions''. Even if you hear a *ding* of a notification, it's enough to distract the brain and lose focus.

As a result of reading that book, I now have a sign on my desk that reminds me to do "One thing at a time".

Quick note on music while working, I agree with you that if I ever want to listen to music while working on any intellectual work (coding, writing a blog post, or crafting an email) I either listen to jazz/lofi music, or foreign songs that I will not understand. When working on an art project however, I don't mind the English lyrics. |

Scott Sweeting also recommends the work of the author of Deep Work:

|

On the topic of multitasking (or not), Cal Newport, author of “How To Be A Straight-A Student,” has been on this beat for years. He has books, blogs, and podcasts on the subject. I've added his blog to my RSS feed (remember those?):

https://www.calnewport.com/blog/ |

Larry Affelt sent this:

|

To set the record straight, I am an old guy too … wrote my first code on punch cards in the ‘70s, first circuit design and breadboarding was in the late 70’s and early 80’s. I design the entire product: hardware, PCB, and firmware along with all the ancillary documentation, The company I work for makes controllers for major commercial cooking appliance manufacturers. Nowadays they are color touchscreen controllers with connections to cloud systems.

Multitasking is the bane of my existence, unfortunately working for a small company it is inevitable and part of doing business. When I am working on a critical product I will be mostly left alone, with most people waiting to get my attention when I am deep in thought in my lab. I can even close my office door but I rarely do that, I’d rather work late than hold up production flow in my company. Task switching can cause tremendous efficiency losses so I try to keep my workload at one major project with only a few simpler minor ones running in parallel. |

Jon slams open offices:

|

Very interesting to read "Multitasking During Sex"

It reminds me of the scientific instrument company I worked for after graduation. Following a very successful start with a small team in a business centre, they'd built a fancy space-age HQ on the outskirts of town. It was all open-plan, like a cross between aircraft hangar and crashed spaceship. For the sales and admin folks it was great. For engineers it was a nightmare. Despite email and internet, managers refused to allow home working claiming that "bums on seats" were essential = engineers available to firefight spurious production issues and be pestered by salesmen offering customers modifications or impossible specifications.

We ended up having to milk flextime or work weekends to get some quiet hours after the yabbering, clattering office wallahs had gone home on time. Needless to say, talented engineers upped sticks and baled out. I see that the crashed spaceship is now an abandoned curiosity at the back of a business park. |

Bob Dunlop discusses A.D.D:

|

Very interesting topic! In my late 60's I was diagnosed with A.D.D.

Dealing with the black hole temptations of multitasking has been a lifelong challenge which I have only recently been able to identify and, with varying degrees of success, attempt to manage ("control," sadly, is not a term that readily applies). A.D.D. I now recognize was the primary driver behind my seemingly bizarre "career path" which spanned both educational and work experiences. A degree in Microbiology followed by teaching & selling SCUBA, car sales, carpentry, general building contractor, self-trained systems analyst, software engineer (some formal education) for about 15 years and finally a 12 year "transition" to Nursing (driven by the crashed IT market in the SF Bay Area post Y2k - 25% of my accelerated Masters in Nursing classmates had a significant IT background). Now that I'm finally retired, no longer teaching Nursing or working in the field, I am finally able enjoy the blessing of taking the time and making the effort to take stock of what I am doing, why I'm doing it, and why or why not to pursue the next tempting rabbit hole.

One of many items that caught my attention was your reference to what I know as being hyper-focused. I had countless days as a software engineer where I looked up and realized it had been 10-12 hours and I had managed to never address any other promised chore or important task.

Interestingly, having stretches of hyper-focal activity is a somewhat paradoxical aspect of A.D.D. When in that "zone," interruptions were the bane of my existence. My solution when working for Del Monte Foods was to arrive at work at 05:00 with the expectation that I would be free of interruptions until maybe 09:00 or 10:00. After that all bets were off. When I had a hard deadline my solution was somewhat anti-social - a sign on my cubicle in bold print: "Go Away!" Surprisingly, there was never any reprimand or fallout (that I'm aware of) for those signs.

I know I have previously read human factors research regarding the human brain's fundamental inability to multitask regarding consciousness and related intellectual efforts. Of course, the "subsystems" that are autonomic certainly manage all the tasks regarding staying alive, from breathing & heart rate, to low level metabolic functions. I can easily remember my extreme frustration when jolted out of the zone by "what do you want for dinner?" or similar interruption from my wife. This did NOT lead to marital harmony. :) Luckily, and happily, we have both become more aware of each others' needs.

One last remark on multitasking and interruptions - the research done in intensive care and other medical units points to those interruptions as being one of the root causes of medication administration errors by RNs.

Numerous solutions have been attempted to solve the problem with, I believe, varying degrees of success. In my last couple of years in the ICU, the hospitals were instituting a practice of having the RN engaged in medication administration wear a banner (think elementary school crossing guard) and that visual was to inform all others to leave the RN alone. Very frequently ignored (especially by physicians, but also other staff who "needed to talk" to the RN). I don't know if there's been further research regarding the efficacy of the banner guard, or the effects of ignoring the banner by others.

(A book which helped me identify and understand my A.D.D.. and which ultimately led to an actual diagnosis by a professional is "Driven to Distraction: Recognizing and Coping with Attention Deficit Disorder" by Edward M. Hallowell M.D., ISBN 9780307743152 His book literally changed my life.) |

Ethan Goan talks about OCD:

|

I myself have an obsessive personality, and that can largely be derived from me suffering from OCD. When I am working on something, I want to be working on that one thing and that one thing only. I generally prefer silence; even the ramping up of the fans in my desktop can be enough to cause me some mild irritation. Disruptions are not my friend during these times, and similar to yourself I could work for hours on end without noticing time going by at all. I can work under less ideal conditions, but productivity and relative enjoyment starts to tank. All this is to say that multitasking is not a skill I am proficient in.

Contrast that with my friend of mine, a computer scientist and business analyst with ADHD, and we see almost the complete opposite. Their ideal conditions include listening to a podcast about a variety of topics, tapping their feet along to a song they have in their head, and using different coloured generic ballpoint pens to create some really impressive artwork. They not only can do their works whilst engaging in all of these activities, it is when they work best (I honestly have no idea how they do it, but trust me they do).

These anecdotes aren't meant to generalise the how people with OCD, ADHD, or considered in any form as neurodivergent would operate (or to suggest that neurotypical people can't thrive in an environment that breaks the status quo), but simply to highlight that people are different. There is no one best way for everyone to work and learn, and that is something we should celebrate. I feel that good team leaders will recognise and accept the different working environments that people would like and do their best to accommodate for them. My friends work recently gifted him with some more pens to expand their palette, as they recognise that doodling on the job isn't him slacking off, but is a way to help keep them stimulated and enjoy what they are doing. Little things like this can make a huge difference to a persons well-being. Tiny investments like these will no doubt pay huge dividends in the long term, and these investments don't need to be physical, just investing in promoting working environments bespoke for each person. Artistic supplies would be wasted on someone like me devoid of any drawing prowess and simply wants to be left alone, but something like a quiet work environment with minimal interruptions wouldn't go unappreciated. I expect investment in a dedicated office, or at least some noise canceling headphones for someone like me would pay for themselves back in no time. |

Vlad Z sent the following. He uses the word maltitaskers. I'm not sure if that's a typo or a purposeful pun, as "mal" is Latin for "bad." But I like it!

|

IMHO there are no maltitaskers. It's like multitasking on a single CPU: everything is sequential but the processing is sliced and the slices are interleaved. The user thinks that the computer is multitasking, but it's not; one task at a time.

One cannot drive and text, not enough hands and not enough heads. Which is why the cars swerve from lane to lane when people are texting while driving. |

Tony Ozrelic cites an IBM study - does anyone know where we can find it? He writes:

|

re: multiple projects for engineers, I seem to recall a study done by IBM that showed a peak in productivity at two tasks - less or more than that and an engineer's productivity plummeted!

The reasoning given was that when one task was waiting (e.g. a board turn or a review) you could be working on the other task.

More than two tasks became a game of 'OK, where was I on this task?' just as some other task popped up, and so you spent more time re-establishing context than time spent on working on tasks.

At one task, it was argued that unless it was extremely complex, Parkinson's Law ("work expands so as to fill the time available for its completion") would run wild and damage overall productivity.

My practical experience with the maximum of two tasks is that it is a very useful rule of thumb, but management will invariably think that 'more is better' and try to shoehorn more tasks in - at which time I break out the rule and explain why it works so well. |

|

Gentle wife, with always a kind word at hand for nearly any nutty stunt I pull, called me “crazy.” Repeatedly.

But when the Annapolis fire engine rolled downhill with 8 kids and no adults aboard, only a lone Walnut tree in its path kept the kids from being dumped in the Chesapeake. The truck was seriously damaged, and the tree came down. A friend hauled it to my yard. Not to the dump, nor to a firewood processor. The reason, of course, was the same one that caused my wife to utter the “crazy” word: my sawmill.

The mill lives next to the garage, and an ever-increasing stack of sawn lumber is piling up all around the yard. I keep promising I’ll haul it all to the barn, but somehow I never get around to it. Of course, the tool junkie in me sees this as an excuse to get a skid loader to move the tons of wood, but that will require even more wifely persuasion than I can muster. At the moment. Though she did attend a heavy equipment auction with me recently.

My reasons for buying the mill were many and varied. But now there’s a more compelling rational. Turns out, molecules from Poplar trees can be used to greatly increase memory capacities in digital computers. (http://www.huji.ac.il/cgi-bin/dovrut/dovrut_search_eng.pl?mesge127971152105872560).

Yeah, the tech pundits are all agog about the potential benefits. But where will the Poplar come from?

Watch out, TSMC! Ahoy there, Micron! Pretty soon the Finksburg Sawmill will be spitting out thousands of board-feet of Poplar proteins to compete with your wildly-antiquated silicon memories. Think wood chips, not silicon chips.

Surely the VCs will soon start lining up, all anxious to invest in The Next Great Thing: sequestering carbon from trees into iPhone components.

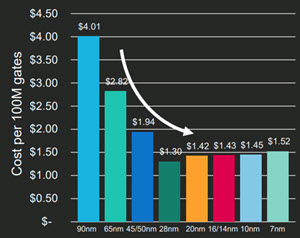

Advanced fab cranking out petabits of memory components. The geometry, measured in meters, is some 109 times bigger and better than the now obsolete 7 nm parts still produced by aging facilities owned by Intel and others. |