|

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

| Contents |

|

| Editor's Notes |

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me.

IBM has announced a 2 nm node. Now, it has been a long time since nodes names meant much, but that works out to over 300 million transistors per square mm. That's around 5 nm square per transistor. 5 nm is about 20 Si atoms. |

| Quotes and Thoughts |

Technology is so much fun, but we can drown in our technology. The fog of information can drive out knowledge. Daniel Boorstin, |

| Tools and Tips |

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past.

John Carter had some thoughts about state machines:

|

State machines are very traditional in our business. Yet I'll make an observation.

Look at the entries in your events vs states table.

* Is it sparse? ie. Is it mostly full of "can't happen / shouldn't happen" entries?

* Can the rows and columns be reordered so the only entries are on the diagonal or near the diagonal?

* Can the rows and columns be reordered so you could divide it roughly into four quadrants where only the on diagonal quadrants have entries and the entries in the off diagonal quadrants "can't happen".

In the last case it's simple. You have two state machines (at least). Your life will be much simpler if you decompose it into two.

In the first two cases, especially the second, your code would be _much_ better expressed as a protothread or a stackless coroutine.

If your answer is no,no,no... then a traditional state machine is the right abstraction for you.

(Another way of visualizing this is if a digraph "dots and arrows" representation of your state machine looks like a pretty neat flowchart.... use stackless coroutines. If the visual representation of dots and arrows is a hideous tangle of every event going from every dot to a bunch of other dots use state machines.)

If your "dots and arrows" picture is connected only by a single dot and some of the dots are on one side and some on the other side and no arrows go from one side to the other.... you have two state machines. |

Greg Havenga had some thoughts about my review of the book Click Here to Kill Everybody:

|

The problem is we can't turn off the connectivity.

Some devices are so cheap that you just take them out of service when they are no longer receiving updates - things like IP cameras and such.

But mfrs are building connectivity into TVs and refrigerators and cars and whatnot. This is where the problem lies. It's dangerous to keep these things running when they are no longer updated.

What needs to happens is:

- Separate the function from the connectivity - I have a smart TV that hasn't been updated in years and I cannot turn off the connectivity - I no longer have it connected to my network, but if you disconnect it then it creates it's own AP and broadcasts a "connect to me" - for everyone within range to see.

Every device with connectivity needs to have a kill switch for that connectivity.

- Every device with connectivity, unless the primary purpose of the device depends on that connectivity, needs to be able to function without it. If you, for some odd reason, buy a connected refrigerator - or TV, or whatnot, you need to be able to kill the embedded connectivity and be able to use the device without it. But in the name of planned obsolescence, manufacturers are not doing this and making products that are only useful if they are connected.

- IMHO, where possible, very device with connectivity needs to have a method of replacing that connectivity - such that you could remove the outdated module and replace it with something newer, not necessarily from the original manufacturer. This one is harder because if there's control involved there could be liability problems, and who would be liable.

But for systems like my TV, I can connect a Roku box to the outside of it through HDMI, it has CEC and I can control the TV through that, what I can't do is tell the embedded controller to nuke it's connectivity function. We need this to just be a thing for connected devices.

- For devices that depend on connectivity, and which have a limited lifespan, we really need to consider disposal / recycling of the device as an integral part of the cost of the device.

- I also have tons of phones, etc., that could be useful if the firmware could be replaced with something more modern - but manufacturers make this impossible - we really need a "right to replace firmware" if the manufacturer doesn't intend to keep supporting it. Of course that has to come with protections and a release from liability for the manufacturer if the user does so, and if another company supplies said firmware, they should take on some of that liability and it should be the user's option to assume that responsibility by supplying their own firmware.

|

|

| Freebies and Discounts |

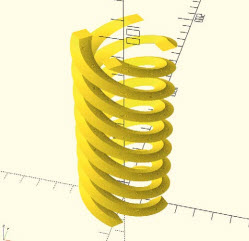

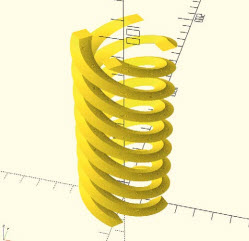

Thanks to Elektor this month's giveaway is a copy of Tam Hanna's book "Technical Modeling with OpenSCAD". OpenSCAD is an open source PC/Mac/Linux solid 3D modeller that seems to be increasingly popular. Tam's book is an easily accessible way to get started using this tool.

Enter via this link. |

| Intel's Protected Mode |

In the last Muse I mentioned Intel's protected mode in their x86 processors. Quite a few readers asked for more information.

A generation now has grown up with some level of exposure to Intel's famed and hated "real mode" architecture. This relic of the 8088 days limits addressing to 20 bits (1 Mb). In real mode all addresses in your program are 16 bit values, which the CPU adds to a "segment" value (stored in one of 4 segment registers) to compute the final 20 bit location. This technique provided some compatibility with older 8 bit systems while giving an albeit awkward way to handle larger address spaces.

In assembly language segment handling is tedious. C compilers mostly insulate the programmer from the confusion of real mode addressing, mitigating some of the impact of this design.

Protected Mode changes everything you ever learned about x86 segmentation while offering direct access to 32 bit addresses. Though segment registers still play a part in every address calculation, their role is no longer one of directly specifying an address. In protected mode segment registers are pointers to data structures that define segmentation limits and addresses. They're now called "selectors" to distinguish their operation from that of real mode.

The selector is a 12 bit number that is an index into the descriptor table (more on that momentarily). Selectors live in the fondly remembered segment registers: CS, DS, ES, SS, and two new ones: GS, and FS. Just as in real mode, every memory access uses an implied or explicitly referenced segment register (selector).

The "descriptor table" data structure contains the segmentation information that, in real mode, existed in segment registers. Now, instead of real mode's 4 lousy segments, you may define literally thousands, allocating one 8 byte entry in the descriptor table to each segment. And, each descriptor defines the segment's size as well as its base address, so the CPU's hardware "protection" mechanism (hence the name) can ensure no program runs outside of memory allocated to it.

Each descriptor contains the segment's base, or start, address (a 32 bit absolute address), the segment size (expressed as a 20 bit number with 4k granularity, or as a 32 bit number), and numerous segment rights and status bits.

Thus, the descriptor tells the processor everything it needs to know about accessing data or code in a segment. Accesses to memory are qualified by the descriptor selected by the current segment register.

You, the poor overworked programmer, creates the descriptor table before switching into protected mode. Since every memory reference uses this table, the common error of entering protected mode with an incorrect descriptor table guarantees an immediate and dramatic crash.

An example is in order. Code fetches always use CS. A protected mode fetch starts by multiplying CS by 8 (the size of each descriptor) and then adding the descriptor base register (which specifies the start address of the descriptor table). The CPU then reads an entire 8 byte record from the descriptor table. The entry describes the start of the segment; the processor adds the current instruction pointer to this start to get an effective address.

A data access behaves the same way. A load from location DS:1000 makes the processor read a descriptor by shifting DS left 3 bits (i.e., times 8), adding the table's base address, and reading the 8 byte descriptor at this address. The descriptor contains the segment's start address, which is added to the offset in the instruction (in this case 1000). Offsets, and segment start addresses, are 32 bit numbers - it's easy to reference any location in memory.

Every memory access works through these 8 byte descriptors. If they were stored only in user RAM the processor's throughput would be pathetic, since each memory reference needs the information. Can you imagine waiting for an 8 byte read before every memory access? Instead, the processor caches a descriptor for each selector (one for CS, one for DS, etc.) on-chip, so the segment translation requires no overhead. However, every load of a selector (like MOV DS,AX or POP ES) will make the processor stop and read all 8 bytes to it's internal cache, slowing things down just a bit.

It's mind blowing to watch these transactions on a logic analyzer. All 32 bit x86 parts are fast (if you use them correctly - the 386EX boots with 31 wait states). The processor screams along, sucking in data and code at a breathtaking rate, and then suddenly all but stops as it reloads a descriptor into its local cache.

It's all a little mind boggling. The CPU manipulates these 8 byte data structures automatically, reading, parsing, caching, and working with them as needed, with no programmer intervention (once they are set up).

Not only does the CPU translate addresses as described. In parallel it checks every memory reference to ensure it behaves properly. If the effective address (base plus offset) is greater than the segment limit (stored in the descriptor), the processor aborts the instruction and generates a protection violation exception. It won't let you do something stupid. You can even specify that a segment is read-only; a write will create the same exception.

It is possible to create a single descriptor table entry that puts the system into "flat" mode, where all 4 Gb of memory is available in a single absolute segment starting at zero. The descriptor gives a base address of zero and a length of 4 Gb. All references to memory then are perfectly linear, emulating the linear addresses pioneered so long ago by Motorola et al. But you lose the hardware protection against illegal accesses to memory. |

| Responses to "Is 90% OK OK?" |

Many readers responded to "Is 90% OK OK?" in the last issue. Here are some of their thoughts.

Philip Johnston replied almost immediately:

|

[Quoting Miro's argument from the last issue]:

For that reason, in programming you either are 100% in control or you aren't. There is no such a thing like being 90% in control and 10% out of control. There is no grey area.

So the main goal of programming is to always maintain control over the machine, even if the face of errors, such as a stack overflow.

Therefore, it is far better to crash early, and quickly regain control to be able to do something about it in your last-line of defense (such as in the exception handler) than to limp along in a crippled state.

I am 100% in agreement with Miro here. Any corruption indicates a failed program - you have no idea what it's going to do, so how can you make any assertion about its correctness from that point forward?

At the same time, the same developers would NOT enable assertions in production. Why NOT? What's the difference?

This is also my experience. I have *never* worked on a system that I did not control which included assertions in production. The tired justifications for this are typically "performance" and "code size", ignoring the likelihood that these assertions may be triggered during field operation no matter how much testing you've done.

Even worse, I rarely find embedded programmers who use assertions at all! There is likely a mix of reasons for this: not having an assert() that works on their platform, not knowing how to make assert() work, or (perhaps most importantly) not having the training / muscle memory that causes them to reach for it by default.

One of my plans for this year is to produce more content involving Design by Contract. I find that a lot of our members are quite interested in improving the robustness and quality of their systems, and I think this is one simple tool to add to our toolkit.

[Quoting Jack's response to Miro]:

However, there are times when we want to fail into a degraded mode. Things are operating properly - in this mode - but perhaps full system functionality isn't there. This is analogous to the "limp home" mode in modern cars.

I don't think that Miro's view is incompatible with failing into a degraded mode. Certainly, you could have an assertion handler do such a thing. However, depending on the time of error that occurred, you will not be able to guarantee that this degraded mode will even operate properly. If critical memory or state needed by the degraded mode has been corrupted, you may very well cause a catastrophic failure anyway. I've certainly experienced this in a low stakes way - an assertion in SPI-NOR code caused us to trigger a panic handler, which then tried to write the coredump to SPI-NOR, which then sent our program off into the weeds without reaching the specified reboot.

One important aspect with the car example is that it is a distributed system. Failure in one component in a distributed system might allow us to enter into a degraded state, where failure in a singular program (e.g., a ventilator that has a single processor) increases the risk of failing to enter into degraded operation mode due to corrupted state.

Clearly, the complexity of error handling and degradation indicates that it is a point we must carefully consider when designing and building our systems. Teams increasingly take this perspective with OTA, since the logic is "as long as we can update our system reliably, we can get out of trouble". Depending on the impact of faults and errors in our system, we can make the same argument for fault/error handling. |

Daniel McBrearty wrote:

|

hats off to Miro.

"Also, there is a lot of interest in ARM MPU/TrustZone and most developers would use them in production code. At the same time, the same developers would NOT enable assertions in production."

Wherever possible, I leave asserts IN. Maybe they cause a fatal error (hopefully there is some way to log this) and maybe in the field the watchdog recovers - some such scheme, depending on the app.

But All Errors Are Fatal is a good rule to live by. Also like very much the "there is no such thing as 90% in control" statement. Very true. |

Harold Kraus quoted gun safety rules:

|

NRA Gun Safety Rule: " ALWAYS keep the gun pointed in a safe direction".

There is a safe direction to point a stack?

I get the sense that depending on the fault mode, the over-expanding stack will eventually hit something, regardless of the direction.

Along this line, the same BSA instructor said also to "always know what is behind what you want to shoot at."

However, within a microcontroller address space, everything in that space is potentially behind what you want to shoot at. |

Charles Manning contributed:

|

Engineering answer: it depends.

Real life is far more complicated. "Doing the right thing" can have unintended consequences and kill people too.

So long as it is the right 90%, then I'd rather a system walk wounded than crashes early.

My go-to example of this is the Ariane 5 crash. A teenie tiny exception in a bit of software that did not matter at the time led to the destruction of the rocket.

Which would you rather have? An ECU have a brief "burp" where it runs rough for a second or two or one that reboots causing the car engine to quit while you're doing 60mph through a bend?

If a log file gets full (or a logging disk gets corrupted) and writing a logging message fails then should you reboot? No, I say - I'd rather have the system limp along and preserve its core function.

If a logging system fails would you rather your car refuses to start (continuous rebooting) or would you rather it just ran without logging?

Sometimes stuff that gets corrupted is inconsequential (eg. logging or a glitch on a UI) and can be recovered from while deliberately crashing the system can often be more fatal than continuing with degraded performance.

"For that reason, in programming you either are 100% in control or you aren't."

No, the control is an illusion. in a modern computer (even $5 microcontrollers), you are NEVER in full control. At the lowest level issues like bus contention between DMA masters and CPU there is bus contention that adds variability. Add caching and variability goes up more. Add variable latencies for components with age and changing temperatures, or, say, flash memory, and control goes down further. Any control you think you have is an illusion caused by running at low speeds.

I have worked on a system where we needed to understand where about 12 ns of jitter was coming from during DMA bus contention (after we'd cleared up around 1 usec of jitter on our own). We first asked the FAE who then referred us to the chip design team who then referred us to the IP core vendors that provided the IP blocks they were using. Once we got the info from them we could stitch together the internal timing of the micro and figured out how it interacted with our circuit. Just changing the RAM address we used for DMA reduced the jitter by about 20ns.

Determining when/what/how to respond to failures is a massive challenge. It is naive to think you can apply some overarching rules all the time.

For example consider a self-driving car that has a GPS for one of its sensors. How should it handle a spoofed position (assuming the GPS receiver knows it is being spoofed):

A) Pull up and park on the side of the road and turn off.

B) Stop, reboot and hope the problem clears.

C) Disregard GPS and continue with other sensors.

D) Just keep using GPS and apply some filtering rejecting massive position jumps and hope it was a "glitch".

All the above have problems. There is no clear solution. |

Bob Paddock had some linker script input:

|

The comments about Stack alignment in Embedded Use 421 brought this comment from my ARM linker script to mind:

/*

* Set stack top to end of RAM.

*

* To prevent obscure problems with printf like library code, when

* printing 64-bit numbers, the stack needs to be aligned to an

* eight byte boundary.

*

* See "Eight-byte Stack Alignment" - http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.faqs/14269.html

*/

The wisdom of using printf and friends is clearly a dubious thing to do in embedded code.

Since the stack is usually placed at the end of RAM the bug goes unnoticed as it is usually 8-byte aligned by virtue of being at the end of RAM.

Now if there is an RTOS involved where each task has its own stack, each of them needs that same alignment.

People are left scratching their head as to crashes involving 64-bit numbers. |

John Strohm remembers some old machines. In the Burroughs B5000 all operations uses the stack rather than explicit operands. It was quite unique and ahead of its time:

|

Us old guys, with grey hair and silver beards, remember someone, I think it was Tony Hoare, saying that doing checkout with subscript checking enabled and turning it off in production is similar to wearing one's life jacket in the harbor and taking it off as soon as you venture out into the ocean proper.

Having sailed a 14' sloop on Puget Sound, having had the wheel on an absolutely beautiful 105' schooner on the Pacific Ocean, out of Cabo San Lucas, I understand that concept rather better than most of my colleagues.

It is worth mentioning that both the Plessey System 250 and Burroughs B5000 mainframes did subscript checking in hardware ALL THE TIME. IT COULD NOT BE TURNED OFF. Both machines were howling screaming successes. It is my understanding that the Burroughs machines live on, in emulators running on modern hardware, and continue to run production code, to this very day. |

Mat Bennion wrote:

|

It reminds me of a conversation I had with a Microsoft employee in a pub about why we didn't use their OS in safety-critical embedded. It's hard to come up with a fair answer – speed, size, bug count are all relative. In the end I settled on "it's not designed to fail" – sometimes it will just stop working. That's usually not acceptable for a safety critical system. Some techniques I use:

- agree what length of time the system can tolerate non-operation. It could be seconds or microseconds: once you know, you can design around it. If you can't achieve it, you need a backup outside of the system (e.g. mechanical or dual-redundant computers).

- work out what state data you need to maintain across the reset (which would solve your dialysis machine problem). This should include "why" you decided to reset so you can decide what to do about it, which is best done after you're back in control (you don't want to return from functions with a corrupt stack)

- give the software some good recovery options – a soft reset might fix corrupt RAM but you need a board-level reset to fix stuck peripherals

- categorise failures (transient RAM corruption vs. permanent RAM device failure) and choose the right response – which could be limp home, or could be "stop operating" (if you know your RAM is broken, probably best to switch off).

- By definition, you can't recover from an unrecoverable error (like faulty RAM) so you need to design the response at a system level – e.g. dual redundancy.

|

John Carter had some comments that intersect thoughts on assertions and the Schiaparelli anomaly:

|

With the tale of the Hard Landing, trying to think through what I would do...

When you're low on fuel, heading towards a large rock full of gravity at 150m/s and accelerating.... I'm not convinced there are any "software degraded modes leading to a safe landing".

It's a bit like saying, whoops, "I have accidentally veered over the solid white line into the stream of oncoming traffic, I better engage a safe degraded mode."

There isn't one.

The only safe thing to do is not do that.

Parallel systems make sense in the realm of infinite h/w and s/w and test budgets. Unmanned space craft? Very very tight budgets on mechanical size and weight, parallel h/w, development and test resource.

It would have to be a completely independently written system, handing control of sensors, historical sensor data and control outputs over in very short amount of realtime, by a reliable supervisor. Each parallel system would presumably receive half the review and test and fix resource a single controller would have.

Yet, the error seems amenable to "test and fix". "pre-flight simulation already showed that values larger than 150deg/sec were possible"

ie. The root cause was failure to fix an error reported by test.

Halving the resource to do that would double the probability of that class of error. (The base assumption of parallel systems is that the defects in the second system will be uncorrelated with the first. We know this is not quite true.).

ie. Thus the root cause seems to be a project management one, not a software one. ie. One of the lessons not learnt from the Vasa. https://faculty.up.edu/lulay/failure/vasacasestudy.pdf

What would I do? I'd put a loud fatal error check in the controller on the designed for limits. If testing showed we could hit that limit, there would be a loud fatal error, that under test pin pointed where the designed for limit was exceeded, permitting rapid fix and retest.

Half the reluctance on the part of project managers to fix "unlikely" errors, are tests that fire sporadically and don't pinpoint the error location.

A key design criteria for tests should be that they are repeatable and are good at localizing the defect. |

Paul Carpenter wrote:

|

Hmmm my immediate responses are

a) Cost V Benefit - If unit is often power cycled, on for minimum time and

does non-harmful thing is it just better to overspecify RAM and create

large gap between stack and ANYTHING lese.

b) "quis custodiet ipsos custodes", who bothers checking the MMU and error

catching hardware is VALID. You can get bit flips in ANY register or

signal path at wrong time. So how much do you want to check see (a)

I have seen many bad examples of stack placement in many architectures some

classics

Stack above RAM vector table (oh dear our interrupts are screwed)

Stack at bottom of RAM not address 0

(The ARM Non Existant Memory vector did NOTHING)

Stack above overlay code area

Generally I find most MMU are good at somethings but bad at others they are

great for OSes to protect "user" processes from each other and any

kernel/driver level. They are bad at protecting a process from itself as

they are generally compiled with its own stack as part of the contiguous

process image.

On simpler suystems where you compile one image for the whole system with

limited RAM there is often no MMU, but there may be protection like

NON-existent memory traps (rarely used), sometimes there are stack limit

traps rarely used. my issue with these is often we have Stack overflow

traps but rarely stack underflow traps. The other issue rarely caught

is no barriers to stop stack being overwritten by anything else.

If you get corruption usually in a heap area which process, state machine

or other part of your code OWNS the corrupted variables? How do you recover

from this situation? Especially with every new() or array allocated as local

array in a function/block.

You basically will always have issues, but how many checks and safeguards

do you need?

Just loading some new fangled marketing spiel named software or feature

is rarely the answer. Too often I see the hallowed saviour being a new

version of a library, but also needs hardware verification as well. |

|

| Another Recommended Book on Failures |

In the last issue Louis Mamakos suggested Space Systems Failures. Joe Loughry had another suggestion:

|

Louis Mamakos wrote about one of my favorite books, "Space Systems Failures: Disasters and Rescues of Satellites, Rockets and Space Probes" in TEM 421; another good book I highly recommend is "What Went Wrong?: Case Histories of Process Plant Disasters" by Trevor Kletz.

While not about software, it's full of good advice. The best way to avoid fire in a chemical plant is not to have anything around that can burn. But if you can't have that, the next best thing is minimization: if you only need fifty gallons of flammable material at a time, don't keep ten thousand gallons on site. "The second best choice is to control the hazard with protective equipment, preferably passive equipment, as it does not have to be switched on. As a last (but frequent) resort, we may have to depend on procedures. Thus, as a protection against fire, if we cannot use nonflammable materials, insulation (passive) is usually better than water spray turned on automatically (active), but that is usually better than water spray turned on by people (procedural)."

This is relevant to Bruce Schneier's observation about "toxic data", e.g. when OPM got hacked in 2015 and it was discovered they had kept around evidence from completed security clearance investigations. All that evidence ought to have been destroyed as soon as the clearance decision (yes/no) had been made; there was no good reason to keep it afterwards. The cache made for one-stop-shopping for anyone with an interest in blackmailing current security clearance holders. It was a perfect example of keeping around unnecessary amounts of flammable, toxic, or volatile waste material—especially in light of the fact that disposing of it properly would have been extremely cheap compared to the cost of disposing of toxic materials such as contaminated oil.

Kletz's book is full of entertaining stories, such as the time when a freeze dryer full of pork rinds detonated. (Liquid nitrogen goes in; make sure to keep stirring. Lost electrical power; stirring stops. Over the next few hours, the supercold liquid nitrogen (77 kelvins) condensed a thick layer of liquid oxygen (boiling point: 90K) from the air. Later the power came back on, stirrer starts automatically, and the resulting ton of fuel-air explosive detonates from friction.) We don't get many explosions in computer science, but this book is full of advice that transfers:

On reliability:

"The trip [a word meaning an automatic safety mechanism] had the normal failure rate for such equipment, about once in two years, so another spillage after about two years was inevitable. A reliable operator had been replaced by a less reliable trip."

On making it hard for accidents to happen:

"Isolations should not be removed until maintenance is complete. It is good practice to issue three work permits—one for inserting slip-plates (or disconnecting pipework), one for the main job, and one for removing slip-plates (or restoring connections)."

On computer programmers:

"When the manager asked the programmer to keep all variables steady when an alarm sounds, did he mean that the cooling water flow should be kept steady or that the reactor temperature should be kept steady?"

On accident reports:

• "Accident reports are not just bait to get us to read codes and standards. They tell us the important thing: what really happened."

• "Errors by managers are signposts pointing in the wrong directions."

• "(When investigating any fire or explosion, we should always ask why it occurred when it did and not at some other time.)"

On measurements:

"We should always try to measure the property we wish to know directly, rather than measuring another property from which the property we wish to know can be inferred."

On finding root causes:

"Gans et al. say that big failures usually have simple causes while marginal failures usually have complex causes. If the product bears no resemblance to design, look for something simple, like a leak of water into the plant. If the product is slightly below specification, the cause may be hard to find. Look for something that has changed, even if there is no obvious connection between the change and the fault."

Finally:

"Many of the incidents occurred because operators did not realize how fragile tanks are. They can be overpressured easily but sucked in much more easily. While most tanks are designed to withstand a gauge pressure of 8 in. of water (0.3 psi or 2 kPa), they are designed to withstand a vacuum of only 2½ in. of water (0.1 psi or 0.6 kPa). This is the hydrostatic pressure at the bottom of a cup of tea." |

|

| Failure of the Week |

From Guy Farebrother:

Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

| This Week's Cool Product |

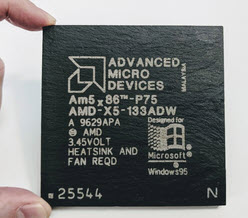

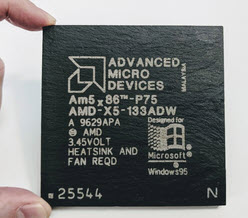

Rick Ilowite sent this. It's a series of coasters that look like processors. Here's one example:

Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

| Jobs! |

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter.

Please keep it to 100 words. There is no charge for a job ad.

|

| Joke For The Week |

These jokes are archived here.

A psychologist was studying the difference between mathematicians and engineers. He set up a test where each was allowed to walk toward a desirable object (when I learned the joke it was an attractive young woman, but I want to be inclusive here).

But ... they were told they had to first cover half of the distance, then half of the remaining distance, and so on.

The engineering immediately started walking while the mathematician just stood there chuckling to himself.

The researcher asked why he wasn't going anywhere, and he explained "any fool knows that with a progression like that you can never actually get there."

When that was pointed out to the engineer, he replied "Of course I know that, but I also know I can get close enough for all practical purposes." |

| About The Embedded Muse |

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and

contributions to me at jack@ganssle.com.

The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get

better products to market faster.

can take now to improve firmware quality and decrease development time. |