|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

|

||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. |

||||

| Quotes and Thoughts | ||||

Measure what is measurable, and make measurable that what is not. Galileo Galilei |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Caron Williams had two more book suggestions:

|

||||

| Freebies and Discounts | ||||

Courtesy of the folks at MAGICDAQ, March's giveaway is one of their Python powered USB DAQ and compatible hardware testing module. See the review later in this issue.

Muse readers can get a 10% discount (valid till the end of June) on these if they send an email to support@magicdaq.com and mention the Embedded Muse. Enter via this link. |

||||

| Frustrating Off-The-Shelf Software, and How Much We Need It... Or Do We? | ||||

A reader wrote to relate a story of how they found both Integrity and VxWorks to be unusable in their application due to bugs... bugs the vendors were not interested in fixing. They switched to Linux and happily shipped a half-million units. Have you had similar experiences using software, like OSes, from vendors? In particular:

And, to add some spice to this, Daniel Way wrote about the issues we have by not using off-the-shelf components:

Then there's this report which shows how most routers are running old, unsupported versions of Linux with unpatched security holes. It re-raises an old question: how does one support products based on complex and well-known software components? No one can support a product forever, so is using these packages inherently making your customers vulnerable? |

||||

| The Pandemic and Us | ||||

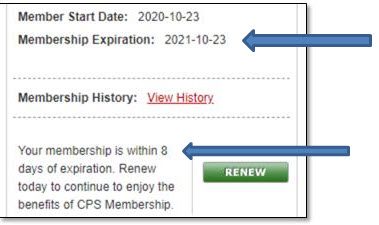

In Muse 397 last May I reported on a survey of Muse readers about how they are faring in the Sars-Cov-2 pandemic. At that point 2% of us lost their jobs. Another 2% were not working and not being paid. Only 1% were not working but continued to draw a salary. 13% were still going to the office every day, which left a whopping 82% of us working from home. How about now, a year into the pandemic? Specifically:

A year from now, what do you expect your work life to look like? Are you happy with your current work environment? Any other comments? Email me and I'll report the results. |

||||

| R&D? No Such Thing | ||||

I was reviewing some code not long ago and had the not-so-wonderful privilege of digging through a change log consisting of hundreds of entries for a single module. This was a state machine, and a rather complex beast one at that. All of the device's actions were sequenced through the state machine, and each entry consisted of the usual function pointers as well as dozens of constants that defined delays, steppings, and the like. The final, shipped, state table looked reasonable, though I wondered where the various constants came from. Digging through the history it became pretty clear: Absolute chaos. The designers had no idea what they were doing. Updates to those constants were made sometimes a half-dozen times a day. Like some high-tech prestidigitators the developers were fumbling changes and apparently just looking to see what happened with each change. The notion of a design just did not exist. This was one of too many examples I have seen of a classic project failure mode: poor science. The developers are thrust into building something before it's absolutely clear how things should work. Now, sometimes that's unavoidable. But it's always a schedule killer. And when a product goes out the door tuned to perfection for reasons no one understands it's usually a perfect example of chaos theory. Fragility. A butterfly flapping its wings in Brazil might cause a complete melt down. The better part of a half century ago we were building a device which required calibration to known standards. The cal process wound up determining coefficients of a polynomial; that polynomial was then used to solve for measured parameters. We discovered, as oh-too-many had before us, that with enough terms over a limited range you can get a polynomial to solve for pretty much anything. And with pretty much zero accuracy. A perfect calibration could be so fragile that a sample microscopically outside the calibration range gave wild results. When the science or technology behind a product is not well understood you can expect one of two results (maybe both): huge schedule delays, and/or a device that just does not work very well. We routinely use a term that should not exist: R&D. There's no such thing. There's R (research), which cannot be scheduled. It's a dive into the unknown, it's where we make mistakes and learn things. Finding the cure for cancer (schedule that!). Then there's D (development). D can be scheduled. We have a pretty darn good idea of what we're going to build and how it will work. Maybe not perfect, but there's more clarity than fog. Conflating R and D is mixing development with unknown science, and is one of my "Top Ten" reasons for project failures. |

||||

| Review of the MAGICDAQ | ||||

One of the hassles I struggle with is sampling and generating signals from my PC to different circuits on the bench. I generally wind up using a single-board computer's analog and digital inputs. This used to be simple, using mbed.com's once-nifty web compiler. Alas, that has become so complexified I've abandoned it and now use regular IDEs. That's a less than ideal solution when you want to log data to a disk file. The MAGICDAQ is a nice alternative (it's also this month's giveaway). It's a general data acquisition device seemingly targeted at benchtop automation and testing. As the picture shows it lives in a uniquely-shaped box which seems designed to be mounted in a test stand. Or, one could use it just lying on the bench.

The specs tell most of the story:

Like so much test equipment, the MAGICDAQ is controlled from a host computer over USB. It's electrically isolated from the USB, a very nice feature. As the picture shows, connections are via screw terminals. That's nice in a lab environment where you might be connecting all sorts of random circuits to it. It is controlled via Python programs, and the provided library makes this very simple. For instance, to read an analog input: # Import MagicDAQDevice object from magicdaq.api_class import MagicDAQDevice # Create daq_one object daq_one = MagicDAQDevice() # Connect to the MagicDAQ daq_one.open_daq_device() # Single ended analog input voltage measurement on pin AI0 pin_0_voltage = daq_one.read_analog_input(0) The upside to a Python interface is that it's largely platform independent, and you can make the thing do pretty much anything. I do, though, wish it had a Windows GUI interface for routine tasks when one doesn't want to write code. The vendor provides plenty of sample scripts, making the learning curve pretty short. All in all, a very nice device at an attractive price point ($185 USD). Also available is an expansion board ($235) which connects to the MAGICDAQ via a ribbon cable. That provides:

More info here. |

||||

| Failure of the Week | ||||

From Scott Rosenthal:

|

||||

| This Week's Cool Product | ||||

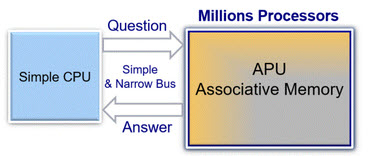

One bottleneck we sometimes face is getting stuff from memory at a rapid rate. GSI Technology has a novel solution: move processors into the memory array. Their Gemini "In-Place Associative Computing" device places millions of processors right in the SRAM array. Now, these are pretty brain-dead CPUs, capably of only simple operations. But the company claims that for some applications you can expect a two orders of magnitude speed up over a conventional CPU to RAM configuration.

Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. In honor of yesterday being pi day: 3.14% of sailors are pi-rates. The mathematician says, "Pi r squared." The baker replies, "No, pies are round. Cakes are square." |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. can take now to improve firmware quality and decrease development time. |