|

||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||

| Contents | ||||||

| Editor's Notes | ||||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar, for example, here, here and here. Jack's latest blog: Crummy Tech Journalism. |

||||||

| Quotes and Thoughts | ||||||

"Errors are not in the art, but in the artificers." Isaac Newton, Principia Mathematica |

||||||

| Tools and Tips | ||||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Nial Cooling has a pair of must-read articles about the use of mutexes here and here. Embedded Artistry (a great name!) has an excellent article about using callbacks in embedded systems. Is strncpy() safer than strcpy()? You may be a little surprised. |

||||||

| Freebies and Discounts | ||||||

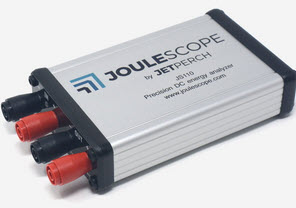

The fine folks at Joulescope are making one of their Joulescope energy meters available for the June giveaway. I reviewed it here. It samples an astonishing range from 1.5 nA to 3A, with short bursts to 10 A at 2 mSa/s.

Enter via this link. |

||||||

| MAGA: Making Assert() Great Again | ||||||

A prediction: your next firmware project will have errors. Hardly rocket science, but the implication, to me, is that wise developers will seed their code with constructs to automatically find at least some classes of these bugs. I call that process "proactive debugging." One example is the liberal use of the assert() macro, one of the most under-utilized assets that C provides. But it's rare to find much use of assert(). For those who have forgotten, assert() does nothing if the supplied parameter is TRUE. If FALSE, it does a printf() of the module name and line number. Now, that's pretty awful for many embedded systems where printf() may not exist. But it's easy enough to recode the macro to take whatever action is appropriate in your system. Generally, assert() should somehow indicate an error and then initiate some debugging action, like stopping at a breakpoint or starting a trace. Languages like Ada and Eiffel have a similar but much more powerful resource called Design by Contract. DbC, like assert(), does runtime error checking, but is also an essential part of the code's documentation. The statements make a powerful, uh, statement about what the code should be doing. We should do no less in C using assert(). For instance: void function(int arg){

assert((arg>10) && (arg<20));

... both throws an error if a bad argument is passed to the function, and tells the reader what the function expects. Consider this function: float solve_pos(float32_t a, b, c){

float32_t result;

result = (-b + sqrt(b*b - 4*a*c))/(2*a);

return result;

}

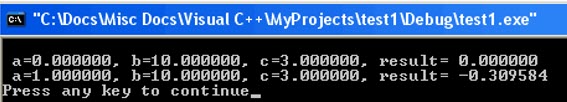

What could possibly go wrong? Well, if variable "a" were zero, that would mean a divide-by-zero, which could be catastrophic. Except, that C often ignores this error. I ran the code through Visual Studio with a=0 and got a result:

Of course, the result is bogus. What is scary is that the bogus result will propagate up the call chain, with more math being done on it at each level, until the machine decides to pump 100 kg of morphine into the patient's arm. Better, use assert(): float solve_pos(float32_t a, b, c){

float32_t result;

assert ((b*b-4*a*c) >= 0);

result = (-b + sqrt(b*b - 4*a*c))/(2*a);

assert (isfinite(result));

return result;

}

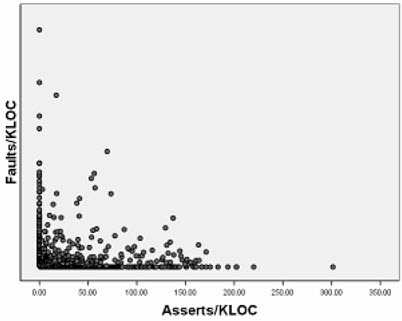

(A great argument can be made to use error-handling code instead of assert(), though it can be very hard to know what to do with a detected problem, like a divide-by-zero. And only use assert() to detect honest-to-God bugs, situations where the code is just wrong.) The first assert() looks for a==0; the second for an attempt to take the square root of a negative number, and the third looks for a division result that can't be expressed by the IEEE floating-point number standard. These are all likely programming errors. assert() is disabled when NDEBUG is defined, which means the behavior of the code may change depending on NDEBUG (e.g., assert(a=0)). In Muse 317 I describe a small change that makes this macro much safer. It has been shown that using lots of assert()s leads to better code, as the macro will find errors that testing may not. A divide-by-zero might not cause a symptom to appear, but is surely something we'd like to catch. At least one study shows assert()s lead to a small number of shipped defects:

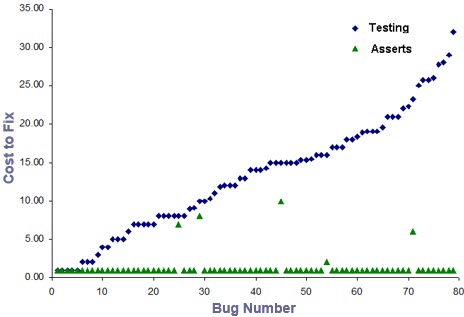

From Gunnar Kudrjavets, Nachiappan Nagappan, Thomas Ball "Assessing the Relationship between Software Assertions and Code Quality: An Empirical Investigation" And, since assert() tends to find errors near where the problem first occurs, instead of millions of cycles later when a symptom appears, it's promiscuous use greatly reduces the time needed to find a bug:

Blue bugs were found via conventional debugging; those in green were detected using assert(). Derived from L. Briand, Labiche, Y., Sun, H., "Investigating the Use of Analysis Contracts to Support Fault Isolation in Object Oriented Code" Assume your code will have errors. Seed it with proactive debugging constructs. Among the most powerful of these is assert(). |

||||||

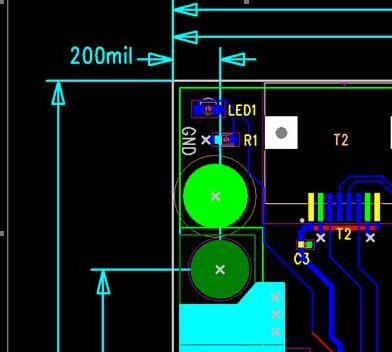

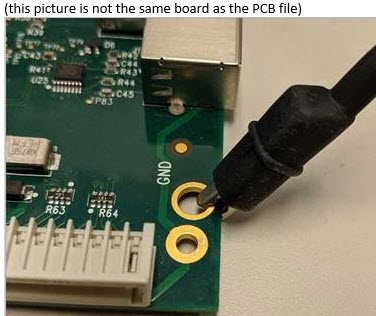

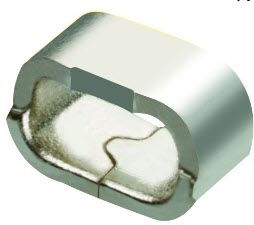

| More on Adding Ground Test Points | ||||||

Several readers had more ideas about adding test points for a scope ground connection to a PCB, after thoughts on this ran in the last Muse: Carl Van Wormer wrote:

Luca Matteini (and a couple of other people) suggested:

Chris Brown had a warning:

|

||||||

| More on Autonomous Cars and Ethics | ||||||

Scott Winder responded to a link I posted to Phil Koopman's treatise on ethics in self-driving cars:

Though I'm enthusiastic about autonomous cars, I think that it will be a long time before we see affordable and reliable level 5 autonomy. This is a very hard problem. It's probably 90% solved, but the next 10% will require 90% of the development time. As someone said: trains still crash. And they run on tracks! |

||||||

| This Week's Cool Product | ||||||

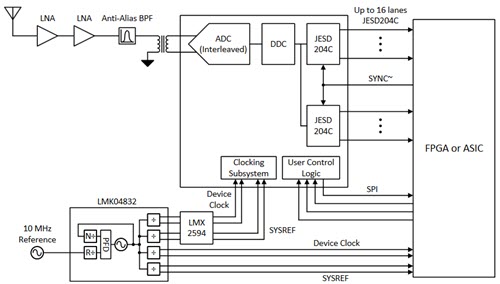

The technical press is going gaga this week over TI's new ADC12DJ5200RF ADC. This is an astonishing part that samples at 10.4 GSa/s with 12 bits of resolution. In one mode there's a timestamp feature to mark a specific acquisition. It also includes a digital down converter (DDC) which, if enabled, provides decimation. As I understand the data sheet, the DDC will also mix the signal with a numerically-controlled oscillator, rather like a superhet mixer, to isolate frequencies of interest. There are four of these oscillators making fast frequency hopping a possibility. Want to build a wide-bandwidth receiver with this part? Here's the circuit:

At $2786 each, this is a part with limited applicability. Suggestions are for oscilloscope front ends and the like. It appears to be a pre-production part at this time. Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||||

| Jobs! | ||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||

| Joke For The Week | ||||||

Note: These jokes are archived here. Dejan Durdenic sent this riff on Dallas's one-wire interface to a zero-wire interface. |

||||||

| About The Embedded Muse | ||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |