|

|

||||

You may redistribute this newsletter for noncommercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go to https://www.ganssle.com/tem-subunsub.html or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

Welcome to Muse 0x100! This is the 256th Muse, which is, of course, a very round number to those of us working in the binary space. Muse 1 went out in June of 1997, nearly 17 years ago. Over the years readers have been very supportive and kind, and I've exchanged tens of thousands of emails with you all. In Muse 17 there was a fake interview with Bjarne Stroustrup where he supposedly revealed that C++ was a joke. Quite a few readers thought the interview was real. Interestingly, he'll be at EELive! (the current name for the Embedded Systems Conference) at the end of the month. I expressed concern about Y2K in Muse 29, and sure got that one wrong. But now we have the Unix rollover to look forward to in 2038... Muse 52 covered the MISRA standard in October 2000; since then MISRA has seen two complete rewrites. It has only been in the last few years that MISRA has gained a lot of traction, which validates Leibson's Law that says it takes ten years for us to adopt disruptive technologies. Muse 181 started the regular series "Tools and Tips," where readers point out tools and ideas they love or hate. That information goes to a web page, which is downloaded three to four thousand times per month. There are now over 200 submissions on that page, and it's due for a reorganization due to its size. Please keep sending your tool suggestions. Most of the changes to the Muse over the years were a result of suggestions from the readers. More are coming. Many of you have sent links to interesting articles, tool suggestions, comments, and questions. Thanks so much! We embedded people are rather like islands, small teams of developers separately from the rest of the industry. The Muse's reason for being is to spread knowledge among us. At the current rate Muse 0x200 will appear around 2026. I plan to keep releasing them on a biweekly schedule, except for the Northern-Hemisphere summers. Please keep those emails coming! |

||||

| Quotes and Thoughts | ||||

Let us change our traditional attitude to the construction of programs: Instead of imagining that our main task is to instruct a computer what to do, let us concentrate rather on explaining to human beings what we want a computer to do. - Donald E. Knuth |

||||

| Tools and Tips | ||||

Please submit neat ideas or thoughts about tools, techniques and resources you love or hate. Chris Jones is intrigued by MegunoLink Pro:

|

||||

| The History of the Microprocessor | ||||

Nearly three years ago I wrote a four part series for the now-defunct Embedded Systems Design magazine about the history of the microprocessor. The first two parts follow; the rest will run in coming Muses. Part 1: The Birth of ElectronicsIf one generation represents twenty years, as many sources suggest, then two entire generations have been born into a world that has always had microprocessors. Two generations never knew a world where computers were rare and so expensive only large corporations or governments owned them. These same billions of people have no experience of a world where the fabric of electronics was terribly expensive and bulky, where a hand-held device could do little more than tune in AM radio stations. In November, 1971 Intel placed an ad in Electronic News introducing the 4004, the first commercially-successful microprocessor. "A micro-programmable computer on a chip!" the headline shouted. Though in my first year of college I had been fortunate to snag a job as an electronics technician, and none of the engineers I worked with believed the hype. At the time Intel's best effort had resulted in the 1103 DRAM, which stored just 1k bits of data. The leap to a computer on a chip seemed impossible. And so it turned out, as the 4004 needed a variety of extra components before it could actually do anything. But the 4004 heralded a new day in both computers and electronics. Forty years ago few people had actually seen a computer in person as they were huge and protected by acolytes of operators. Today, no one can see one, to a first approximation, as the devices have become so small. The history of the micro is really the story of electronics, which is the use of active elements (transistors, tubes, diodes, etc.) to transform signals. And the microcomputer is all about using massive quantities of active elements. But electrical devices - even radios and TV - existed long before electronics. Mother Nature was the original progenitor of electrical systems. Lightning is merely a return path in a circuit composed by clouds and the atmosphere. Ben Franklin and others in France found, in 1752, that lightning and sparks are the same stuff. Hundreds of years later kids repeat this fundamental experiment when they shuffle across a carpet and zap their unsuspecting friends and parents (the latter are usually holding something expensive and fragile at the time). Other natural circuits include the electrocytes found in electric eels. Somewhat battery-like, they are composed of thousands of individual "cells," each of which produces 0.15 volts. It's striking how the word "cell" is shared by biology and electronics, unified with particular emphasis in the electrocyte. Alessandro Volta was probably the first to understand that these organic circuits used electricity. Others, notably Luigi Galvani (after whom the galvanic cell is named) mistakenly thought there was some sort of biological fluid involved. Volta produced the first artificial battery, though some scholars think that the Persians may have invented one thousands of years earlier. About the same time others had built Leyden jars, which were early capacitors. A Leyden jar is a glass bottle with foil on the surface and an inner rod. I suspect it wasn't long before natural philosophers (proto-scientists) learned to charge the jar and zap their kids. Polymath Ben Franklin, before he got busy with forming a new country and all that, wired jars in series and called the result a "battery," from the military term, which is the first use of that word in the electrical arena. Many others contributed to the understanding of the strange effects of electricity. Joseph Henry showed that wire coiled tightly around an iron core greatly improved the electromagnet. That required insulated wire long before Digi-Key existed, so he reputedly wrapped silk ripped from his long-suffering wife's wedding dress around the bare copper. This led directly to the invention of the telegraph. Wives weren't the only to suffer in the long quest to understand electricity. In 1746 Jean-Antoine Nollet wired 200 monks in a mile-long circle and zapped them with a battery of Leyden jars. One can only imagine the reaction of the circuit of clerics, but their simultaneous jerking and no doubt not-terribly pious exclamations demonstrated that electricity moved very quickly indeed. It's hard to pin down the history of the resistor, but Georg Ohm published his findings that we now understand as Ohm's Law in 1827. So the three basic passive elements -- resistor, capacitor and inductor -- were understood at least in general form in the early 19th century. Amazingly it wasn't till 1971 that Leon Chua realized a fourth device, the memresistor, was needed to have a complete set of components, and another four decades elapsed before one was realized. Michael Faraday built the first motors in 1821, but it wasn't until the 1860s that James Maxwell figured out the details of the relationship between electricity and magnetism; 150 years later his formulas still torment electrical engineering students. Faraday's investigations into induction also resulted in his creation of the dynamo. It's somehow satisfying that this genius completed the loop, building both power consumers and power producers. None of these inventions and discoveries affected the common person until the commercialization of the telegraph. Many people contributed to that device, but Samuel Morse is the most well-known. He and Alfred Vail also critically develop a coding scheme - Morse Code - that allowed long messages to be transmitted over a single circuit, rather like modern serial data transmission. Today's Morse code resembles the original version but there are substantial differences. SOS was dit-dit-dit dit-dit dit-dit-dit instead of today's dit-dit-dit dah-dah-dah dit-dit-dit. The telegraph may have been the first killer app. Within a decade of its commercialization over 20k miles of telegraph wire had been strung in the US, and the cost to send messages followed a Moore's Law-like curve. The oceans were great barriers in these pre-radio days, but through truly heroic efforts Cyrus Field and his associates laid the first transatlantic cable in 1857. Consider the problems faced: with neither active elements nor amplifiers a wire 2000 miles long, submerged thousands of feet below the surface, had to faithfully transmit a signal. Two ships set out and met mid-ocean to splice their respective ends together. Sans GPS they relied on celestial sights to find each other. Without radio-supplied time ticks those sights were suspect (four seconds of error in time can introduce a mile of error in the position). William Thomson, later Lord Kelvin, was the technical brains behind the cable. He invented a mirror galvanometer to sense the miniscule signals originating so far away. Thomson was no ivory-tower intellect. He was an engineer (at that point in life) who got his hands dirty. He sailed on the cable-laying expeditions and innovated solutions to the problems encountered. While at a party celebrating the success, Field was notified that the cable had failed. He didn't spoil the fun with that bit of bad news. It seems a zealous engineer thought if a little voltage was good, 2000 would be better. The cable fried. This was neither the first nor the last time an engineer destroyed a perfectly functional piece of equipment in an effort to improve it. Amazingly, radio existed in those pre-electronic days. The Titanic's radio operators sent their ... --- ... with a spark gap transmitter, a very simple design that used arcing contacts to stimulate a resonant circuit. The analogy to a modern AM transmitter isn't too strained: today, we'd use a transistor switching rapidly to excite an LC network. The Titanic's LC components resonated as the spark rapidly formed, creating a low-impedance conductive path, and extinguished. The resulting emission is not much different from the EMI caused by lightning. The result was a very ugly wide-bandwidth signal... and the legacy of calling shipboard radio operators "sparks." TV, of a sort, was possible, though it's not clear if it was actually implemented. Around 1884 Paul Nipkow conceived of a spinning disk with a series of holes arranged in a spiral to scan a scene. In high school I built a Nipkow Disk, though used a photomultiplier to sense the image and send it to TTL logic that reconstructed the picture on an oscilloscope. The images were crude, but recognizable. The next killer app was the telephone, another invention with a complex and checkered history. But wait -- there's a common theme here, or even two. What moved these proto-electronic products from curiosity to wide acceptance was the notion of communications. Today it's SMS and social networking; in the 19th century it was the telegraph and telephone. But it seems that as soon as any sort of communications tech was invented, from smoke signals to the Internet, people were immediately as enamored with it as any of today's cell-phone obsessed teenagers. The other theme is that each of these technologies suffered from signal losses and noise. They all cried out for some new discovery that should amplify, shape and improve the flow of electrons. Happily, in the last couple of decades of the 1800s inventors were scrambling to perfect such a device. They just didn't know it.

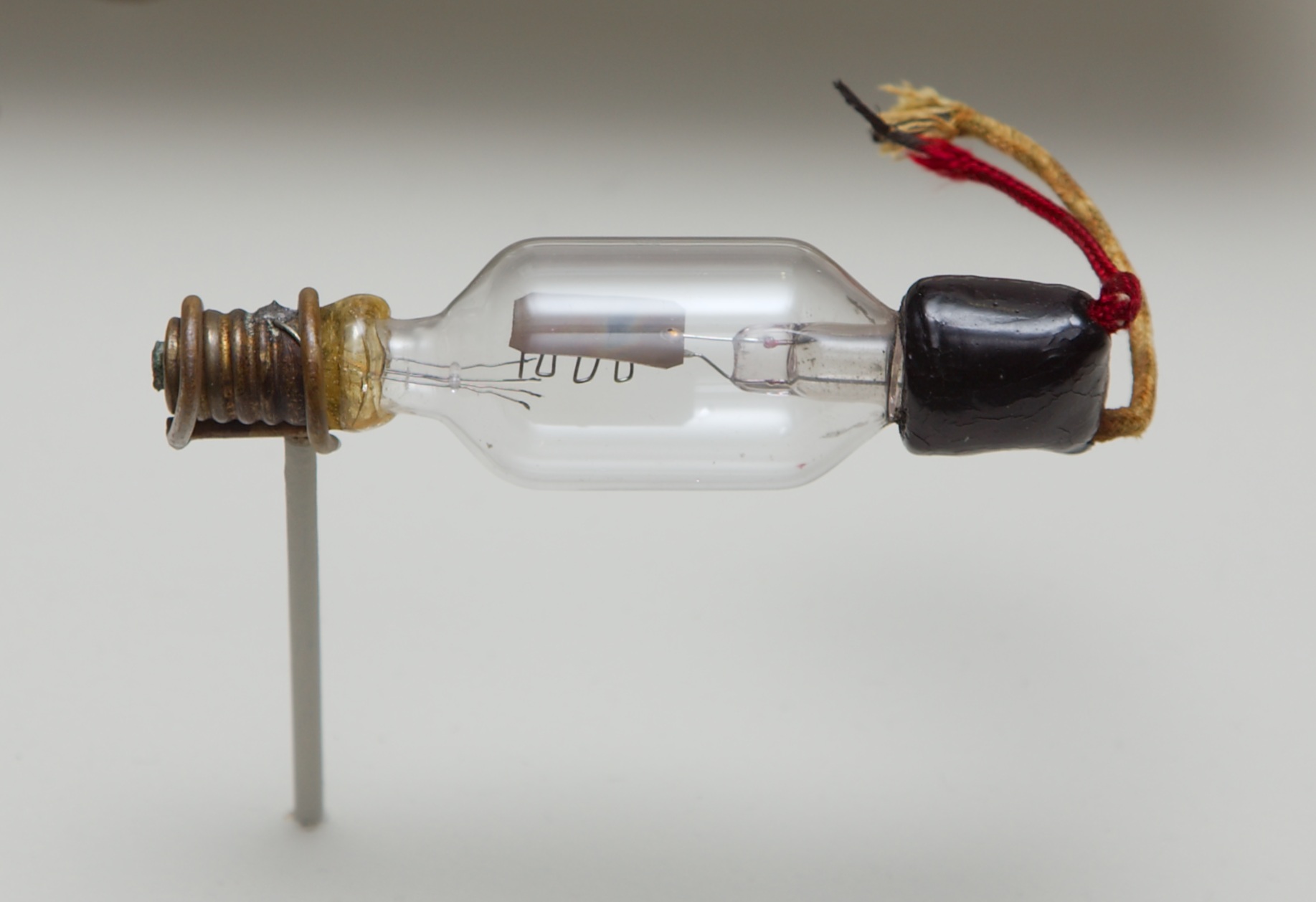

Part 2: From Light Bulbs to ComputersWhere a calculator on the ENIAC is equipped with 18,000 vacuum tubes and weighs 30 tons, computers in the future may have only 1,000 vacuum tubes and perhaps weigh 1-1/2 tons. Popular Mechanics, 1949 Thomas Edison raced other inventors to develop the first practical electric light bulb, a rather bold undertaking considering there were neither power plants nor electrical wiring to support lighting. In the early 1880s his bulbs glowed, but the glass quickly blackened. Trying to understand the effect, he inserted a third element and found that current flowed in the space between the filament and the electrode. It stopped when he reversed the polarity. Though he was clueless about what was going on - it wasn't until 1897 that J. J. Thomson discovered the electron - he filed for a patent and set the idea aside. Patent 307,031 was for the first electronic device in the United States. Edison had invented the diode. Which lay dormant for decades. True, Ambrose Fleming did revive the idea and found applications for it, but no market appeared. In the first decade of the new century Lee de Forest inserted a grid between the anode and cathode, creating what he called an Audion. With this new control element a circuit could amplify, oscillate and switch, the basic operations of electronics. Now engineers could create radios of fantastic sensitivity, send voices over tens of thousands of miles of cable, and switch ones and zeroes in microseconds. The vacuum tube was the first active element, and its invention was the beginning of electronics. Active elements are the core technology of every electronic product. The tube, the transistor, and, I believe, now the microprocessor are the active elements that transformed the world over the last century.

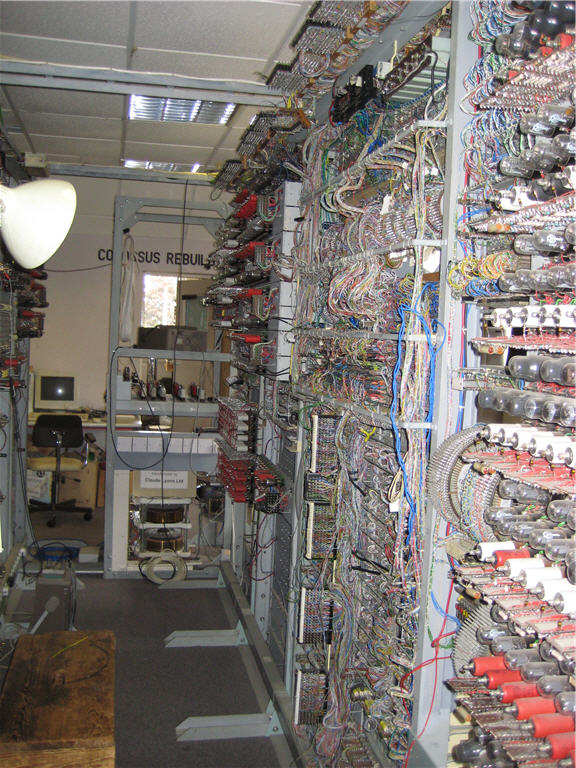

The Audion Though the tube was a stunning achievement, it was useless in isolation. De Forest did create amplifiers and other circuits using tubes. But the brilliant Edwin Armstrong was probably the most seminal early inventor of electronic circuits. Though many of his patents were challenged and credit was often given to others, Armstrong was the most prolific of the early radio designers. His intentions included both the regenerative and super-regenerative receivers, the superhetrodyne (a truly innovative approach used to this day), and FM. As radio was yet another communications technology, not unlike SMS today, demand soared as it always does for these killer apps. Western Union made the VT1, one of the first commercial tubes. In 2014 dollars they were a hundred bucks a pop. But war is good for technology; in the four years of World War I Western Electric alone produced a half million tubes for the US Army. By 1918 over a million a year were being made in the US, more than fifty times the pre-conflict numbers, and prices quickly fell. Just as cheaper semiconductors always open new markets, falling tube prices meant radios became practical consumer devices. Start an Internet publication and no one will read it until there's "content." This is hardly a new concept; radio had little appeal to consumers unless there were radio shows. The first regularly-scheduled broadcasts started in 1919. There were few listeners, but with the growth of broadcasters demand soared. RCA sold the earliest consumer superhetrodyne radio in 1924; 148,000 flew off the shelves in the very first year. By the crash in 1929 radios were common fixtures in American households, and were often the center of evening life for the family, rather like TV used to be until mobile devices became the only reason for teenagers to exist. Nearly until the start of World War II radios were about the most complex pieces of electronics available. An example is RCA's superb RBC-1 single-conversion receiver which had all of 19 tubes. But tubes wore out, they could break when subjected to a little physical stress, and they ran hot. It was felt that a system with more than a few dozen would be impractically unreliable. In the 1930s it became apparent that conflict was inevitable. Governments drove research into war needs, resulting in what I believe is one of the most important contributions to electronic digital computers, and a natural extension of radio technology: RADAR. The US Army fielded its first RADAR apparatus in 1940. The SCR-268 had 110 tubes... and it worked. At the time tubes had a lifetime of a year or so, so one would fail every few days in each RADAR set. (Set is perhaps the wrong word for a system that weighed 40,000 Kg and that required 6 operators). Over 3000 SCR-268s were produced. Ironically, Sir Henry Tizard arrived in the US from Britain with the first useful cavity magnetron the same year the SCR-268 went into production. That tube revolutionized RADAR. By war's end the 10 cm wavelength SCR-584 was in production (1700 we manufactured) using 400 vacuum tubes. The engineers at MIT's Rad Lab had shown that large electronic circuits were not only practical, they could be manufactured in quantity and survive combat conditions. Like all major inventions computers had many fathers -- and some mothers. Rooms packed with men manually performed calculations in lockstep to produce ballistics tables and the like; these gentlemen were known as "computers." But WWI pushed most of the men into uniform, so women were recruited to perform the calculations. Many mechanical machines were created by all sorts of inventive people like Babbage and Konrad Zuse. But about the same time the Rad Lab was doing its magnetron magic, what was probably the first electronic digital computer was built. The Atanasoff-Berry computer was fired up in 1942, used about 300 tubes, was not programmable, and though it did work, was quickly discarded. Meanwhile the Germans were sinking ships faster than the allies could build replacements, in the single month of June, 1942 sending 800,000 tons to the sea floor. Britain was starving and looked doomed. The allies were intercepting much of the Wehrmacht's signal traffic, but it was encrypted using a variety of cryptography machines, the Enigma being the most famous. The story of the breaking of these codes is very long and fascinating, and owes much to pre-war work done by Polish mathematicians, as well as captured secret material from two U-boats. The British set up a code-breaking operation at Bletchley Park where they built a variety of machines to aid their efforts. An electro-mechanical machine called the Heath Robinson (named after a cartoonist who drew very complex devices meant to accomplish simple tasks, a la Rube Goldberg) helped break the "Tunny" code produced by the German Lorenz ciphering machine. But the Heath Robinson was slow and cranky. Tommy Flowers realized that a fully electronic machine would be both faster and more reliable. He figured the machine would have between 1,000 and 2,000 tubes, and despite the advances being made in large electronic RADAR systems few through such a massive machine could work. But Flowers realized that a big cause of failures was the thermal shock tubes encountered on power cycles, so planned to leave his machine on all of the time. The result was Colossus, a 1,600 tube behemoth that immediately doubled the code breakers' speed. It was delivered in January of 1944. Those who were formerly hostile to Flower's idea were so astonished they ordered four more in March. A month later they were demanding a dozen. Colossus didn't break the code; instead it compared the encrypted message with another data stream to find likely settings of the encoding machine. It was probably the first programmable electronic computer. Programmable by patch cables and switches, it didn't bear much resemblance to today's stored program machines. Unlike the Atanasoff-Berry machine the Colossi were useful, and were essential to the winning of the war. Churchill strove to keep the Colossus secret and ordered that all be destroyed into pieces no bigger than a man's hand, so nearly 30 years slipped by before its story came out. Despite a dearth of drawings, though, a working replica has been constructed and is on display at the National Museum of Computing at Bletchley Park, a site on the "must visit" list for any engineer. A rope barrier isolates visitors from the machine's internals, but it's not hard to chat up the staff and get invited to walk inside the machine. That's right -- inside. These old systems were huge.

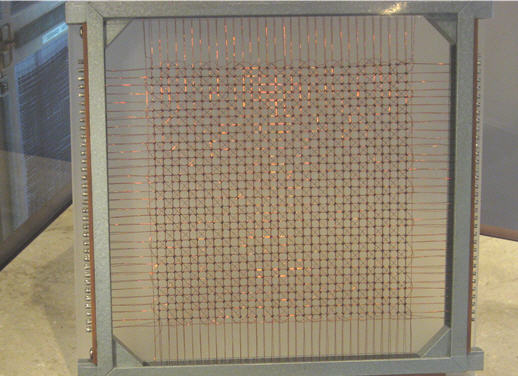

Inside the Colossus replica Meanwhile, here in the colonies John Mauchly and J. Presper Eckert were building the ENIAC, a general-purpose monster of a machine containing nearly 18,000 vacuum tubes. It weighed 30 tons, consumed 150 KW of electricity, and had five million hand-soldered joints. ENIAC's purpose was to compute artillery firing tables, which it accelerated by three orders of magnitude over other contemporary approaches. ENIAC didn't come on line until the year after the war, but due to the secrecy surrounding Colossus ENIAC long held the title of the first programmable electronic computer. It, too, used patch panels rather that a stored program, though later improvements gave it a ROM-like store. One source complained it could take "as long as three weeks to reprogram and debug a program." Those were the good old days. Despite the vast number of tubes, according to Eckert the machine suffered a failure only once every two days. That's about the reliability of my Windows machine. During construction of the ENIAC Mauchly and Eckert proposed a more advanced machine, the EDVAC. It had a von Neumann architecture (stored program), called that because John von Neumann, a consultant to the Moore school where the ENIAC was built, had written a report about EDVAC summarizing its design, and hadn't bothered to credit Mauchly or Eckert for the idea. Whether this was deliberate or a mistake (the report was never completed, and may have been circulated without von Neumann's knowledge) remains unknown, though much bitterness resulted. (In an eerily parallel case the ENIAC was the source of patent bitterness. Mauchly and Eckert had filed for a patent for the machine in 1947, but in the late 1960s Honeywell sued over its validity. John Atanasoff testified that Mauchly had appropriated ideas from the Atanasoff-Berry machine. Ultimately the court ruled that the patent was invalid. Computer historians still debate the verdict.) Meanwhile British engineers Freddie Williams and Tom Kilburn developed a CRT that could store data. The Williams tube they built was the first random access digital memory device. But how does one test such a product? The answer: build a computer around it. In 1948 the Small Scale Experimental Machine, nicknamed "The Baby," went into operation. It used three Williams tubes, one being the main store (32 words of 32 bits each) and two for registers. Though not meant as a production machine, The Baby was the first stored program electronic digital computer. It is sometimes called the Mark 1 Prototype, as the ideas were quickly folded into the Manchester Mark 1, the first practical stored-program machine. That morphed into the Ferranti Mark 1, which was the first commercial digital computer. I'd argue that the circa 1951 Whirlwind computer was the next critical development. Whirlwind was a parallel machine in a day where most computers operated in bit-serial mode to reduce the number of active elements. Though it originally used Williams tubes, these were slow, so the Whirlwind was converted to use core memory, the first time these were incorporated into a computer. Core dominated the memory industry until large semiconductor devices became available in the 70s. Early core cost about $10 per bit in 2014 dollars.

Whirlwind core memory at the Computer History Museum. Whirlwind had 16 of these 32x32 planes for a total of 16 kb of memory. Whirlwind's other important legacy is that it was a real-time machine, and it demonstrated that a computer could handle RADAR data. Whirlwind's tests convinced the Air Force that computers could be used to track and intercept cold-war enemy bombers. The government, never loathe to start huge projects, contracted with IBM and MIT to build the Semi-Automatic Ground Environment (SAGE), based on the 32 bit AN/FSQ-7 computer. SAGE was the largest computer ever constructed, each installation using over 100,000 vacuum tubes and a half acre of floor space. 26 such systems were built, and unlike so many huge programs, SAGE was delivered and used until 1983. The irony is that by the time SAGE came on-line in 1963 the Soviets' new ICBM fleet made the system mostly useless. For billions of years Mother Nature plied her electrical wiles. A couple of thousand years ago the Greeks developed theories about electricity, most of which were wrong. With the Enlightenment natural philosophers generated solid reasoning, backed up by experimental results, that exposed the true nature of electrons and their behavior. In only the last flicker of human existence has that knowledge been translated into the electronics revolution, possibly the defining characteristic of the 20th century. Coming in the next Muse: The Semiconductor Revolution. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intents of this newsletter. Please keep it to 100 words. |

||||

| Joke For The Week | ||||

Note: These jokes are archived at www.ganssle.com/jokes.htm. LR sent this: 1) Naming things. |

||||

| Advertise With Us | ||||

Advertise in The Embedded Muse! Over 23,000 embedded developers get this twice-monthly publication. . |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |