|

||||||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||||||

| Contents | ||||||||||

| Editor's Notes | ||||||||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. |

||||||||||

| Quotes and Thoughts | ||||||||||

"You will say that I am always conjuring up awful difficulties & consequences - my answer to this is it is an important part of the duty of an engineer." Robert Stephenson, the engineer of the Britannia Bridge. |

||||||||||

| Tools and Tips | ||||||||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Here's Niall Cooling's in-depth look at Arm Cortex-M Intel-Hex (ihex) files. Volatile is a critical keyword for embedded developers. Here's a short but useful article about it. Martin Glunz wrote about SSD's and speed improvements:

|

||||||||||

| Freebies and Discounts | ||||||||||

The folks at Joulescope kindly sent us a couple of their Joulescopes for this month's giveaway. The Joulescope is a great tool for monitoring energy use in IoT devices. A review is below. Enter via this link. |

||||||||||

| Saving Tools for the Long Time | ||||||||||

Readers had some more feedback about preserving toolchains. Will Cooke wrote:

Robert Ferrante is one of meany using VMs:

Ditto for Alex Barbur:

Here's Helmut Tischer's suggestions:

Martin Glunz wrote:

|

||||||||||

| Joulescope Review | ||||||||||

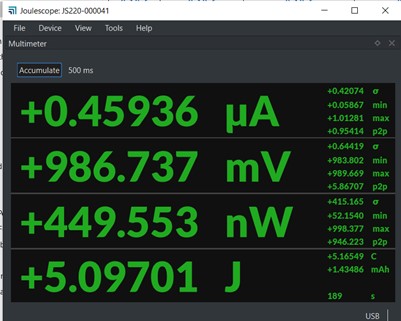

Remember the massive electrical feeds that were needed to run old-time mainframe computers? Megawatts yielded a few MFLOPs. How astonishing it is that today we can run a computer from a coin cell for months or years! So many embedded systems now run from the most minimal of power sources. But as I detail here https://www.ganssle.com/reports/ultra-low-power-design.html designing a low-power system involves far more tradeoffs and issues than most engineers realize. It’s hard to design low-power systems. That suggests that wise engineers will characterize their products by measuring actual current and energy needs. But this is not easy! Asleep, the system might consume nanoamps. Awake, that zooms to milliamps or even amps. The dynamic range of the measurement is huge. You can’t simply put a sense resistor in the power line and measure voltage drop, as the resistance will need to be very high to sense sleep currents and very small to avoid voltage drops when the system is active. Enter the Joulescope JS220, an instrument that can access a system’s power needs. Though it has a number of features, two that are critical for us are:

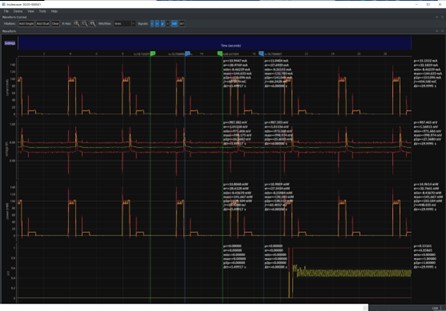

It’s a small nicely-engineered device that fits the palm of your hand, and is packaged in an elegant zippered case. It’s associated software runs under Windows, Linux and macOS. Unlike so many other tools, the user manual is very complete and well-written. The primary specs are: 300 KHz bandwidth on V and I measurements with a 16-bit ADC (15.1 ENOB). Max of +/- 15V and 3A continuous, 10A pulsed (the duty cycle, though, isn't specified). Resolution (typical) is an astonishing 0.5 nA. This is the business end of the unit. It provides terminals to monitor current and voltage: The unit also has 4 GPIOs and a trigger, all of which can be configured as inputs or outputs. These signals let you correlate device-under-test activity with the Joulescope’s display. I could write many words describing the instrument’s operation, but a couple of pictures will serve much better. Here is the unit’s “multimeter” display: As shown, the Joulescope displays current, voltage, power and energy. Too many instruments that purport to measure a DUT’s “power” actually show current. Power is volts times amps, and it’s sloppy to conflate the two. Energy is the integral of power over time. It’s nice to show power, but is perhaps not terribly important given V and I, but energy is something we can’t derive from a conventional DMM’s results. This is an important parameter. Also important are the standard deviations and min/max readings shown to the right. “p2p” is peak-to-peak, and, while nice to see, is pretty obvious from the min/max. The Joulescope also has an “oscilloscope” display: (Click on image for a bigger display) There’s a lot of info on this screen! V and I, of course, plotted over time. Statistics are displayed off to the right, including the energy (represented by the integral sign). While the multimeter display shows energy in Joules, the oscilloscope view presents energy in Coulombs. You may recall that 1 Joule is 1 Coulomb-Volt. On the bottom there’s a GPIO configured as an input. What to know your system’s current profile as it powers up an external device? The GPIO display can sync the instrument’s display to that action. Also shown are cursors with associated statistics. I can’t think of a feature to add. The Joulescope is the best tool I've tried for measuring power and energy use in an embedded system. At $999 the price is right. Engineering is all about predicting how your system will perform, building that system, and then measuring its behavior to insure it meets your predictions. Leave out the last step and it’s no longer engineering, it’s art. In my opinion, building a battery-operated embedded system without profiling its energy needs is professional malpractice. |

||||||||||

| Sine and Cosine | ||||||||||

Jean-Christophe Mathae sent an efficient algorithm for generating a sine wave series - that is, to create a sine wave, rather than calculating sin(x).

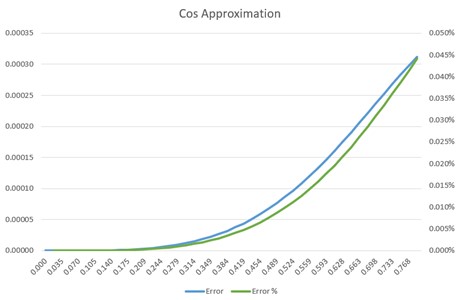

To carry the the approximation theme in Muse 456 a little further, here's one for cosine. Now, the deal with approximations is you can attain any accuracy you'd like given a small enough range of input values and a long-enough polynomial approximator. However, if you're after a fast algorithm you necessarily have to give up input range. Happily, sine and cosine are symmetrical so it's easy to do range reduction to fit an approximation that is good over a small range to the entire 0 to 2π (0 to 360°) circle. Range reduction uses these relations: sin(α) = cos(α -90) = -sin(α-180) = -cos(α-270) cos(α) = -sin(α-90) = -cos(α-180) = sin(α-270)

Here's a nifty cosine routine for the range 0 to π/4 (0 to 45°). The input is in radians: cos(α) = 1 + α*α * (-0.5 + 0.04 * α*α) Obviously, alpha squared can be computed once and reused. You can scale the coefficients and make this a very fast integer cosine. It's pretty accurate, too: |

||||||||||

| On Sending Data to the Cloud | ||||||||||

Johan Kraft had some feedback about posting debug data to the cloud:

|

||||||||||

| Failure of the Week | ||||||||||

From John Sloan:

From Emerson Beserra: Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||||||||

| Jobs! | ||||||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||||||||

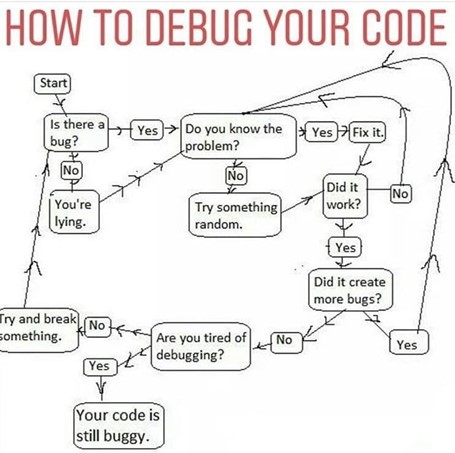

| Joke For The Week | ||||||||||

These jokes are archived here. From Steve Bresson:

|

||||||||||

| About The Embedded Muse | ||||||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |