|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. There's an interesting article about Ted Hoff, inventor of the 4004, here. |

||||

| Quotes and Thoughts | ||||

Scientists dream about doing great things. Engineers do them. James A. Michener |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past.

Steve King had an interesting point about sending debug data to the cloud:

Jakob Engblom had a contrary opinion about subscription-based tools:

Here's a nice intro to the Rust programming language. |

||||

| Freebies and Discounts | ||||

Kaiwan Billimoria kindly sent us three copies of his new book Linux Kernel Debugging for this month's contest. It's a massive tome, at 600+ pages that anyone working on Linux internals would profit from. Enter via this link. |

||||

| Math Approximations | ||||

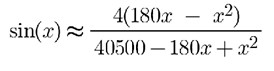

I'm fascinated by approximations. In the embedded world we often aren't running a monster machine that has built-in trig or even floating point. I've written quite a bit about floating point approximations to trig and other functions, but what happens when you need a fast sine or something similar, with limited precision? It's often possible to stick with integer math to get a pretty decent approximation. Though a lookup table is the fastest approach, those tend to eat a lot of memory. I recently stumbled across a couple of fun approximations you might find useful. First, did you know that 355/113 is very close to pi? It's 3.1415929, not far from the nearly-true value of 3.1415926. How about e, the base of the natural logs? Try 271801/99990, which is 2.718281828. Or, there's 3020/1111 which is 2.71827. Trig functions are a little more complicated, but here's a cool approximation for the sine, using degrees as the input argument: (Obviously, this would have to be normalized to your range of integers). This is valid over the range of 0 to 180 degrees. Errors from actual values are small. This graph shows the absolute errors in blue and percentage error in orange:

You can calculate 180x and x2 once, then use them twice. Cosine is just sine shifted by 90 degrees. Be warned: as mentioned, the sine approximation is accurate only to 180 degrees; after that errors are large. If you compute cos=sin(x+90) be sure x never exceeds 90, as the result won't make sense. Reduce x to 0 to 90 degrees (i.e., the first quadrant) using ideas from here. If you're working in radians and can constrain the input argument to 0 to 0.35 radians (0 to 20 degrees) then an even faster algorithm is: sin(x)=x. That's accurate to about 2% over that range. |

||||

| Fast Clocks Might Not Be Fast | ||||

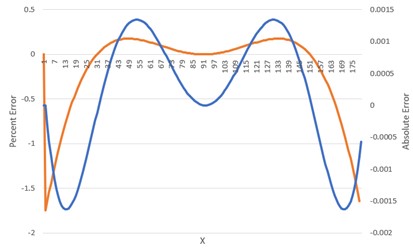

Using a fast algorithm like the one above above is one way to gain performance. Another is to beef up the hardware. But that's not always as simple as one might hope. Often doubling the clock rate of a CPU won't gain a lot of improvement. Memory is a bottleneck. Consider this warning from one MCU datasheet: A processor that executes instructions in a single cycle may require many wait states at higher clock rates. While certain aspects of performance might improve you may be very disappointed with the results. Of course, one could copy the code to fast RAM and run from there, but that comes at the cost of the additional memory. As always, read the datasheets very carefully! |

||||

| Source Controlling Tools | ||||

Steve Foschino poses a question that's worth pondering.

Any thoughts? I just put a new computer together and wound up downloading the newest versions of the tools I use. But what happens if a tool vendor goes extinct? Or discontinues the products? Or you need an Internet connection to a now-defunct company to register the product before it works? We have seen this in the past in the embedded space. Back before the dot.com meltdown there was a frenzy of acquisitions; Wind River bought a number of tool vendors, and most of those products are now long gone. (A friend who worked for one of these vendors told me he worked for 5 companies over two months without changing jobs as the businesses were acquired.) (And, to carry on the subject of speedups from the articles above, I found stunning improvements in the speed of most Windows software by using big NVMe SSDs. However, it's important to spend a few extra bucks to get PCI versions rather than the SATA parts. Disk-intensive applications benefit from using two such SSDs; one for C: and the other for D:, as the code can ping pong accesses between them. A processor with lots of cores, these SSDs, and plenty of RAM shortened one task from 4 days of compute time to two hours.) |

||||

| Failure of the Week | ||||

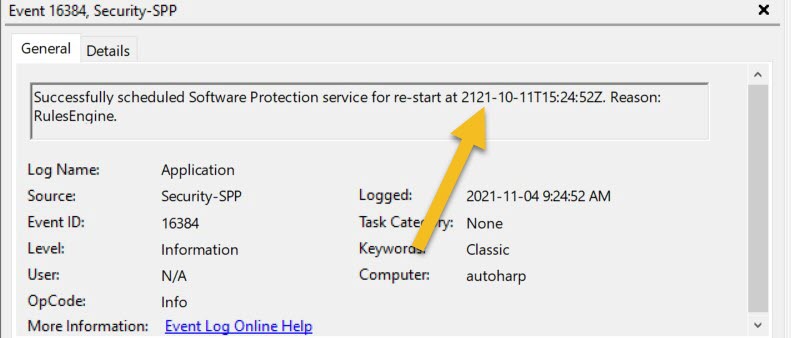

Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. From Jerry Penner:

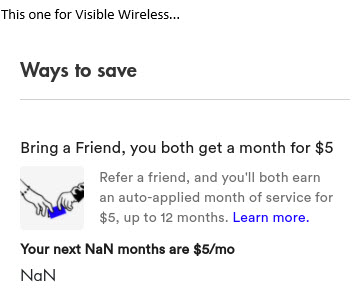

Another NaN from David Rea: |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. Harold Kraus found TI has a fun 404 message: |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |