|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. In the last issue I noted that analysts predicting MCU sales were off by a factor of two. Brian Cuthie wrote: "The other 25 billion are on order. Estimated lead time 200 wks." |

||||

| Quotes and Thoughts | ||||

It is better to be vaguely right than precisely wrong. John Maynard Keynes |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past.

The ACM has made their library from 1951 to 2000 open to all, for free. Some truly seminal papers are now available for free. Many of us were fascinated by Lissajous plots when we first started playing with oscilloscopes (using the instruments X and Y inputs to generate cool patterns). Time goes by and we wind up using just the vertical channel, defaulting to the Y time base. This article has some useful ideas about using both X and Y in real-life situations. |

||||

| Freebies and Discounts | ||||

The giveaway is on holiday for the summer. |

||||

| Shipping as a Feature - Redux | ||||

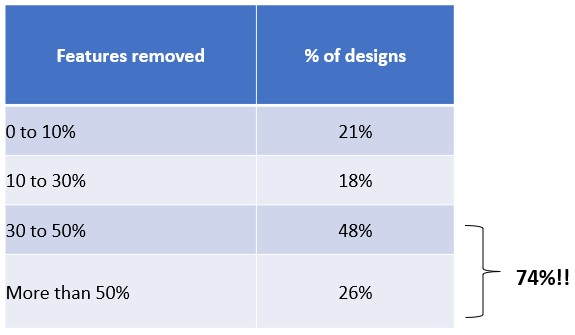

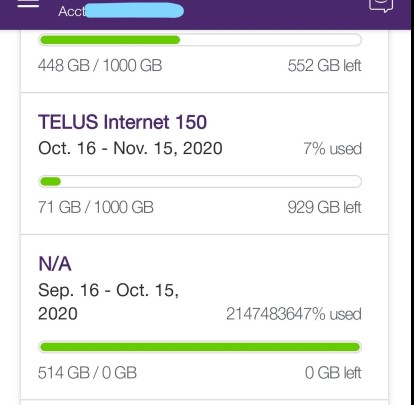

In the last issue I wrote about the oft-neglected notion that shipping is a feature, one whose importance should be understood and managed. Alas, often the ship date arrives almost as a surprise and we scramble to produce a useful product. The following data (acquired in a survey of engineers at an Embedded Systems Conference) is telling:

In other words, 74% of us admit to deleting more than 30% of the required or desired features in a mad panic to ship. Managing feature releases is generally wise, but the sad fact is that many of these deleted goodies were partly implemented. When it's time to send the device out the door we drop work on uncompleted portions of the code. Much better: manage the features aggressively, recognizing that the inexorable ship date will come, perhaps too soon. Focus work on those features needed in the initial release. In this the agile community is brilliant: The product always works. It may not do much, initially, but what it does do, it does perfectly. Features get added, but always under the umbrella of keeping a perfectly functional unit. Theoretically, it's always shippable. And this is where the agilists are sometimes wrong: the notion of a minimal viable product means we ship when the thing offers a little bit of functionality, in the hopes that early users will provide feedback that helps us improve the device. While that makes sense when prototyping, when the requirements are largely unknown, and maybe also scales into arenas like web development, in the embedded world we generally have a pretty good idea of what the thing needs to do to be marketable. Shipping half the feature set is usually not an option. I have seen too many groups embrace a minimal viable product as an excuse to not do a proper job of eliciting requirements. Requirements are hard, perhaps one of the hardest parts of our work. That's no excuse to abdicate the effort. Shipping is a feature Steve Bresson understands; he sent this in response to the article:

And Zach B wrote:

|

||||

| Where Complexity Fails Us | ||||

Engineering is about numbers. Do you specify a ±5% resistor or ±1%? Do the math! Will all of the signals arrive at the latch at the proper time? A proper analysis will reveal the truth. How hot will that part get? Crunch the numbers and pick the proper heat sink. Alas, software engineering has been somewhat resistant to such analysis. Software development fads seem more prominent than any sort of careful analysis. Certainly, in the popular press "coding"1 is depicted as an arcane art practiced by gurus using ideas unfathomable to "normal" people. Measure stuff? Do engineering analysis? No, that will crowd our style, eliminate creativity, and demystify our work. I do think, though, that in too many cases we've abdicated our responsibility as engineers to use numbers where we can. There are things we can and must measure. One example is complexity, most commonly expressed via the McCabe Cyclomatic Complexity metric. A fancy term, it merely means the number of paths through a function. One that consists of nothing more than 20 assignment statements can be traversed exactly one way, so has a complexity of one. Add a simple if and there are now two directions the code can flow, so the complexity is two. There are many reasons to measure complexity, not the least is to get a numerical view of the function's risk (spoiler: under 10 is considered low risk. Over 50: untestable.) To me, a more important fallout is that complexity tells us, in a provably-correct manner, the minimum number of tests we must perform on a function to guarantee that it works. Run five tests against a function with a complexity of ten, and, for sure, the code is not completely tested. You haven't done your job. What a stunning result! Instead of testing to exhaustion or boredom we can quantify our tests. Alas, though, it only gives us the minimum number of required tests. The max could be a much bigger number. Consider: if ((a && b) || (c && d) || (e && f))... Given that there's only two paths (the if is taken or not taken) this statement has a complexity of 2. But it is composed of a lot of elements, each of which will affect the outcome. A proper test suite needs a lot more than two tests. Here, complexity has let us down; the metric tells us nothing about how many tests to run. Thus, we need additional strategies. One of the most effective is modified condition/decision coverage (MC/DC). Another fancy term, it means making sure every possible element in a statement is tested to ensure it has an affect on the outcome. Today some tools offer code coverage: they monitor the execution of your program and tag every statement that has been executed, so you can evaluate your testing. The best offer MC/DC coverage testing. It's required by the most stringent of the avionics standards (DO-178C Level A), which is partly why airplanes, which are basically flying computers, aren't raining out of the sky. Use complexity metrics to quantify your code's quality and testing, but recognize its limitations. Augment it with coverage tools.

1 I despise the word "coding." Historically coding was the most dreary of all activities: the replacement of plain text by encrypted cipher. Low-level functionaries, or even machines, did the work. Maybe "coding" is an appropriate term for script kiddies or HTML taggers. If we are coders you can be certain that in very short order some AI will replace us. No, we in the firmware world practice2 software engineering: implementing complex ideas in software using the precepts of careful engineering. These include analysis, design, negotiating with customers, implementation and measurements of our implementations. 2 Bob Dylan got it right: "he not busy being born is busy dying". We should be forever practicing software engineering. Practice: "perform (an activity) or exercise (a skill) repeatedly or regularly in order to improve or maintain one's proficiency." Unless we're constantly striving to improve we'll be dinosaurs awaiting the comet of our destruction. |

||||

| Failure of the Week | ||||

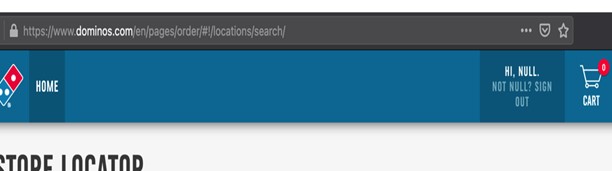

From Manuel Martinez:

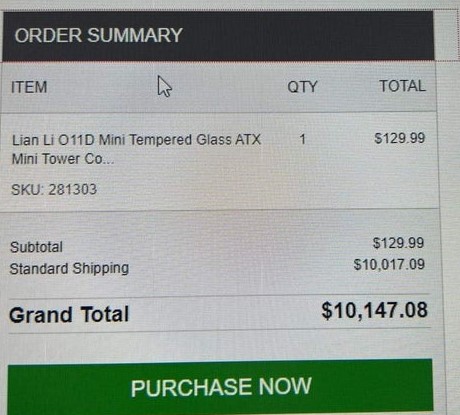

Thomas Younger sent this:

Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here.

(A fun schematic but shouldn't the output be at the junction of the final transistors' emitter and collector?) |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |