|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. |

||||

| Quotes and Thoughts | ||||

Unforeseen issues are often not unforeseeable. Jack's Law of Requirements Elicitation. |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Damian Bonicatto sent this link to a DYI printable oscilloscope probe for hard-to-probe nodes. Pretty cool! |

||||

| Freebies and Discounts | ||||

January's giveaway is a copy of Chris Hallinan's book Embedded Linux Primer. It's an excellent volume for folks working with Linux. I reviewed it here. Enter via this link. |

||||

| Defects Cluster | ||||

I had a lot of off-the-record email discussions with readers about bugs after the last Muse (see the next article for some on-record conversations). A common theme was that "we don't know much about bug rates." Actually, that isn't true. David Aikin wrote “Engineering is done with numbers. Analysis without numbers is only an opinion.” While the data is fuzzy and not as pristine as a formula from classical physics (e.g., F=MA), it does paint a picture. Here's one set of numbers:

In other words, defects cluster. A little bit of the code is responsible for most of the problems. Worse, Boehm showed that these problematic modules cost, on average, four times more to develop than their better-behaved brothers. The reason? Debugging. The average team spends about half the schedule in debugging. Makes you wonder if the other half is "bugging." I admit to having written some crummy code, software that tormented me for weeks. Every time a bug was fixed another appeared. Often you can tell when the code is so poor; the structure is creaky and embarrassing. Changing a comment seems to break things. Keep a tally of bugs in each function. It can be pretty informal. But that data will quickly and quantitatively show which are in the small batch of what is bad code. When a function histograms out of proportion to everything else, you know it needs attention. Most often that means a total rewrite. Yes, sometimes we blow it, create a monster that's impossible to tame. Likely the design is no good. But now that we understand the problem, rewriting the function will almost always result in a better solution. If we identify these early we'll save money. Remember Boehm's observation that "fixing" a module by trying to beat it into submission costs 4x good code. |

||||

| More on Requirements and Bugs | ||||

Readers had comments about the articles in the last Muse about bugs and requirements. Stuart Jobbins wrote:

Tony Ozrelic:

|

||||

| Three Rules of Requirements | ||||

Some years ago, at a conference in Mexico, I attended a talk Steve Tockey’s gave about requirements. He very ably and succinctly summed up the rules for requirements, which I’ll paraphrase here:

The last constraint shows how many so-called requirements are merely vague and useless statements that should be pruned from the requirements document. For instance, “the machine shall be fast” is not testable, and is therefore not a requirement. Neither is any unmeasurable statement about user-friendliness or maintainability. An interesting corollary is that reliability is, at the very least, a difficult concept to wrap into the requirements since “bug-free” or any other proof-of-a-negative is hard or impossible to measure. In high-reliability applications it’s common to measure the software engineering process itself, and to buttress the odds of correctness by using safe languages, avoiding unqualified SOUP, or even to use formal methods (actually, the latter is not a common practice, but is one that has gained some traction). |

||||

| Failure of the Week | ||||

Peter House sent this speedometer reading:

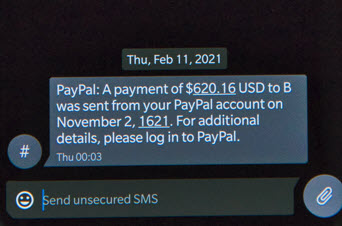

This is from Carl Palmgren. It seems Paypal has been around since the 30 Years War:

Have you submitted a Failure of the Week? I'm getting a ton of these and yours was added to the queue. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad.

|

||||

| Joke For The Week | ||||

These jokes are archived here. What do you get if you cross a mosquito with a mountain climber? |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. can take now to improve firmware quality and decrease development time. |