|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. I'll be speaking at the Embedded Online Conference, which takes place from May 17-20. The speaker lineup is pretty amazing! Sign up with promo code GANSSLE149 and wonderful things will happen like reversal of male pattern baldness, a guest spot on Teen Vogue magazine, and a boost of what JFK called "vim and vigor." It will also get you registered for $149 instead of the usual $290 fee |

||||

| Quotes and Thoughts | ||||

Submitted by Vlad Z.: "Nur ein Idiot glaubt, aus eigenen Erfahrungen zu lernen. |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. In the last issue I discussed the Common Weakness Enumeration. One of the issues the CWE identifies is excessive McCabe Cyclomatic Complexity, which is a valid concern. Unfortunately it offers no guidance about what "excessive" means, and I wrote that sometimes a big switch statement breaks the rule while being a very clear way of expressing a complex idea. A number of readers wrote that switch is the ideal way to implement a state machine. I agree... for simple state machines. Big ones get out of control pretty quickly. I generally prefer using a table defined in a structure. Each entry is one state or sub-state. It's impossible to generalize as every application will be different, but conceptually a table entry might include the next state, parameters associated with the current state, and a function pointer to the code to run that state. Code accepts events and sequences through the table. Another alternative is Miro Samek's hierarchal state machines. |

||||

| Freebies and Discounts | ||||

Thanks to Elektor this month's giveaway is a copy of Tam Hanna's book "Technical Modeling with OpenSCAD". OpenSCAD is an open source PC/Mac/Linux solid 3D modeler that seems to be increasingly popular. Tam's book is an easily accessible way to get started using this tool.

Enter via this link. |

||||

| The Schiaparelli Anomaly | ||||

Farbrizio Bernardini sent this link to the ExoMars 2016 - Schiaparelli Anomaly Inquiry. The Schiaparelli module was part of the ExoMars 2016 mission launched in 2016 which was designed to land on Mars. Alas, it crashed. (The report claims it had a "hard landing", though the vehicle was totally destroyed when it hit the planet at 150 m/s). A few highlights make interesting reading (RDA is Radar Doppler Altimeter): Because of the error in the estimated attitude that occurred at parachute inflation, the GNC Software projected the RDA range measurements with an erroneous off-vertical angle and deduced a negative altitude (cosinus of angles > 90 degrees are negative). There was no check on board of the plausibility of this altitude calculation. A sufficiently conservative uncertainty management along all simulation / analysis activities was missing. This may include methods beyond standard modelling and simulation procedures. The GNC design and verification approach was based on statistical Monte Carlo analysis, and pre-flight simulation already showed that values larger than 150deg/sec were possible. No specific worst case/stress analysis versus influential parameters (e.g. riser angle) was performed to investigate the system behaviour around this Monte Carlo case in a worst case input combination scenario. As the GNC integrated such a rate, during the whole IMU saturation persistence time, it developed a bias error on the attitude. There was no analysis of plausibility of such an attitude, which was clearly impossible, e.g. the RDA echoes were received from the surface of Mars. From the System point of view the main weaknesses of the adopted approach were the fact that insufficient FDIR analysis, "what if"/robustness analysis, and worst case analysis were performed for the most critical mission phases of the EDL. In the adopted system approach only failures or anomalies of the RDA were considered, i.e. if consistency checks of the altitude derived from slant ranges and GNC estimated attitude would fail, it was considered that it would be due to an RDA anomaly, no fear of inconsistency due to wrong attitude estimate was identified. Nevertheless, as the RDA was absolutely necessary to land, it was included in the loop without putting in question the other component of the altitude determination, the GNC attitude estimate. The presence of different measurements (accelerations, angular rates, radar altimeter measurements, time, etc.) could have been exploited for cross check purposes and to elaborate a parallel logic for degraded modes leading to a safe landing. The parallels to the 737 MAX errors are striking. It's good they did an analysis of the failure so future missions won't make the same mistakes. I suspect that future missions will make similar mistakes. |

||||

| Click Here to Kill Everybody | ||||

I am a fan of security pundit Bruce Schneier's work. However, his book Click Here to Kill Everybody left me underwhelmed. Your computer can be hacked. So can your phone. It turns out that there are computers in most of your electronics and those can be hacked. Perhaps this is news to some. But that's pretty much the entire first 100 pages. The book is more than a little hysterical. From page 62: "HP printers no longer allow you to use unauthorized print cartridges. Tomorrow, the company might require you to use only authorized paper - or refuse to print copyrighted words you haven't paid for." It's not legal in the USA to copyright a word. He suggests that we impose the death penalty on companies that collect personal information and whose databases get hacked, "death penalty" meaning having the company taken over by the government and the investors wiped out. While there's more than a little appeal in that - make the stockholders, officers and directors personally responsible for the hack - it's notoriously difficult to penetrate the corporate shield. Currently those in charge get no more than a slap on the hand. Consider Equifax: that hack released the data of 150 million Americans. Congress investigated. The only legislation they passed made it illegal for those affected to sue Equifax. That seems an awful lot like a bill of attainder to me, which is unconstitutional. Schneier mentions that many systems, like ATM, use Windows XP that has been obsolete and unsupported for decades. He rightly says this is a problem. But what's the solution? Who is going to patch it? Is a company liable for maintaining a product forever? And he touches on, but glosses over, the problems of IoT devices which may have decades-long lives. If one uses Windows or Linux or even an RTOS like FreeRTOS, who is responsible for keeping these products secure for perhaps generations? While I agree these are big problems, what is the solution? He talks about more thorough testing to find security flaws. That's a good idea... but it won't work. Test will find some problems but we'll never know if it will find them all. He writes that there's really no chance non-state actors will ever produce "super-germs that can kill millions of people." The book's copyright is 2018 so predates the pandemic. I recently finished Walter Isaacson's The Code Breaker, which is is about CRISPR gene editing. Unfortunately, it appears such super-germs are not inconceivable or particularly difficult to make. Schneier does recognize the problems and provides solutions, though he admits these are not enough. Those presented are a bewildering array that makes the problem seem completely intractable, requiring wise decisions from government, industry and us, with plenty of money allocated. None of that seems likely. Click Here to Kill Everybody might be a good book for non-techies who have not been in the middle of this battle for so long. But I suspect few of us would gain much from it, other than, perhaps, a feeling of even more hopelessness at the scale of this problem. |

||||

| Is 90% OK OK? | ||||

Miro Samek wrote:

Any thoughts? I mostly agree with Miro. Software is a very different kind of engineering artifact. If you build a bridge and a ten ton truck goes safely across, the odds are pretty good that a one ton truck will have no problem. But software fails in many more complex ways. In the example above, running with a corrupted variable might be OK. Or it could be catastrophic. There's no way of knowing. However, there are times when we want to fail into a degraded mode. Things are operating properly - in this mode - but perhaps full system functionality isn't there. This is analogous to the "limp home" mode in modern cars. Some would advocate doing a reboot and hope that the error isn't encountered again. That's a pretty good strategy in many cases. But consider a peritoneal dialysis machine, which pumps dialysate into a person's gut, waits, and then pumps it out. A reboot would presumably make the system think the process hasn't started. If the crash happened halfway through a cycle a patient with a belly full of chemicals might get even more. Probably the best solution is to go to a degraded mode that extracts the dialysate and then the machine shuts down. Or that shuts down but warns the patient that s/he needs a trip to the ER.

|

||||

| Failure of the Week | ||||

Louis Mamakos writes:

|

||||

| This Week's Cool Product | ||||

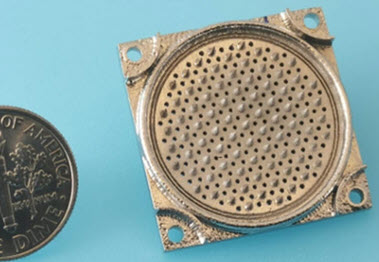

Building a cubesat? Now you can 3D print an electrospray thruster the size of a dime. There's not much thrust (dozens of micronewtons) but it's cheap... and cool!

Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. Normal people believe that if it ain't broke, don't fix it. Engineers believe that if it ain't broke, it doesn't have enough features yet. |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. can take now to improve firmware quality and decrease development time. |