|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Tip for sending me email: My email filters are super aggressive and I no longer look at the spam mailbox. If you include the phrase "embedded muse" in the subject line your email will wend its weighty way to me. In the "ya gotta be kidding me" department, entry level engineers at Lyft and some similar companies make the better part of a quarter million bucks per year. With 5+ years experience some of these companies offer packages approaching a half-mil. The first commercial microprocessor was introduced in 1971, a half-century ago. To mark this occasion the two January Muses cover the history of that crucial invention. |

||||

| Quotes and Thoughts | ||||

"The iceberg is the hazard. The risk is that a ship will run into it. One mitigation might be to paint the iceberg yellow to make it more visible. Even painted yellow there is still the residual risk that a collision might happen at night or in fog." Chris Hobbs |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Steve Branam has some advice about how one should go about getting a new job. It used to be you needed little more than a resume; today there's a lot more background info required. Regular readers know I'm an Ada fan. I get a lot of email, though, from people who think the language isn't useful on microcontrollers. That's simply wrong. Adacore has a demo that can get you running on any Cortex-M or RISC V device in minutes. |

||||

| Freebies and Discounts | ||||

Given this issue's history theme, this month's giveaway is a circa 1949 VTVM from Heathkit.

What's a VTVM? These used to be ubiquitous in labs but are unknown today. It's a vacuum tube voltmeter. Maybe you have one of those cheap Radio Shack volt-ohmmeters (VOMs). They typically have a terrible input impedance so will load circuits, sometimes giving erroneous readings. A VTVM feeds the input to the grid of a vacuum tube ("valve" to our British friends), giving the meter a very high (on the order of 10 MΩ) impedance. The meter doesn't work. I took it apart and found the power-supply capacitor is bad; probably the others need replacing, too. Surprisingly, the selenium rectifier works, but I'd replace it with a silicon diode. This would be a fun repair project. A manual with schematics is here. Enter via this link. |

||||

| The Story of the Microprocessor - Part 2 | ||||

(Part 1 of this story is here). We're on track, by 2010, for 30-gigahertz devices, 10 nanometers or less, delivering a tera-instruction of performance. Pat Gelsinger, Intel, 2002 We all know how in 1947 Shockley, Bardeen and Brattain invented the transistor, ushering in the age of semiconductors. But that common knowledge is wrong. Julius Lilienfeild patented devices that resembled field effect transistors (though they were based on metals rather than modern semiconductors) in the 1920s and 30s (he also patented the electrolytic capacitor). Indeed, the USPTO rejected early patent applications from the Bell Labs boys, citing Lilienfeild's work as prior art. Semiconductors predated Shockley et al by nearly a century. Karl Ferdinand Braun found that some crystals conducted current in only one direction in 1874. Indian scientist Jagadish Chandra Bose used crystals to detect radio waves as early as 1894, and Greenleaf Whittier Pickard developed the cat's whisker diode. Pickard examined 30,000 different materials in his quest to find the best detector, rusty scissors included. Like thousands of others, I built an AM radio using a galena cat's whisker and a coil wound on a Quaker Oats box as a kid, though by then everyone was using modern diodes.

A crystal radio - there's not much to it. As noted earlier, RADAR research during World War II made systems that used huge numbers of vacuum tubes both possible and common. But that work also led to practical silicon and germanium diodes. These mass-produced elements had a chunk of the semiconducting material that contacted a tungsten whisker, all encased in a small cylindrical cartridge. At assembly time workers tweaked a screw to adjust the contact between the silicon or germanium and the whisker. With part numbers like 1N21, these were employed in the RADAR sets built by MIT's Rad Lab and other vendors. Volume 15 of MIT's Radiation Laboratory Series, titled "Crystal Rectifiers," shows that quite a bit was understood about the physics of semiconductors during the War. The title of volume 27 tells a lot about the state of the art of computers: "Computing Mechanisms and Linkages." Early tube computers used crystal diodes. Lots of diodes: the ENIAC had 7,200, Whirlwind twice that number. I have not been able to find out anything about what types of diodes were used or the nature of the circuits, but imagine an analog with 60s-era diode-transistor logic. While engineers were building tube-based computers, a team lead by William Shockley at Bell Labs researched semiconductors. John Bardeen and Walter Brattain created the point contact transistor in 1947, but did not include Shockley's name on the patent application. Shockley, who was as irascible as he was brilliant, in a huff went off and invented the junction transistor. One wonders what wonder he would have invented had been really slighted. Point contact versions did go into production. Some early parts had a hole in the case; one would insert a tool to adjust the pressure of the wire on the germanium. So it wasn't long before the much more robust junction transistor became the dominant force in electronics. By 1953 over a million were made; four years later production increased to 29 million a year. That's exactly the same number as on single Pentium III integrated circuit in 2000. The first commercial transistor was probably the CK703, which became available in 1950 for $20 each, or $188 in today's dollars. Meanwhile tube-based computers were getting bigger, hotter and sucked ever more juice. The same University of Manchester which built the Baby and Mark 1 in 1948 and 1949 got a prototype transistorized machine going in 1953, and the full-blown model running two years later. With a 48 (some sources say 44) bit word, the prototype used only 92 transistors and 550 diodes! Even the registers were stored on drum memory, but it's still hard to imagine building a machine with so few active elements. The follow-on version used just 200 transistors and 1300 diodes, still no mean feat. (Both machines did employ tubes in the clock circuit). But tube machines were more reliable as this computer ran about an hour and a half between failures. Though deadly slow it demonstrated a market-changing feature: just 150 watts of power were needed. Compare that to the 25 KW consumed by the Mark 1. IBM built an experimental transistorized version of their 604 tube computer in 1954; the semiconductor version ate just 5% of the power needed by its thermionic brother. The first completely-transistorized commercial computer was the, well, a lot of machines vie for credit and the history is a bit murky. Certainly by the mid-50s many became available. Earlier I claimed the Whirlwind was important at least because it spawned the SAGE machines. Whirlwind also inspired MIT's first transistorized computer, the 1956 TX-0, which had Whirlwind's 18 bit word. Ken Olsen, one of DEC's founders, was responsible for the TX-0's circuit design. DEC's first computer, the PDP-1, was largely a TX-0 in a prettier box. Throughout the 60s DEC built a number of different machines with the same 18 bit word. (DEC, or Digital Equipment Corporation, was a hugely important player in the minicomputer market during the '60s and '70s. They were acquired by Compaq, which in turn merged with HP). The TX-0 was a fully parallel machine in an era where serial was common. (A serial computer works on a single bit at a time; modern parallel machines work on an entire word at once. Serial computing is slow but uses far fewer components.) Its 3600 transistors, at $200 a pop, cost about a megabuck. And all were enclosed in plug-in bottles, just like tubes, as the developers feared a high failure rate. But by 1974 after 49,000 hours of operation fewer than a dozen had failed. The official biography of the machine (RLE Technical Report No. 627) contains tantalizing hints that the TX-0 may have had 100 vacuum tubes, and the 150 volt power supplies it describes certainly aligns with vacuum tube technology. IBM's first transistorized computer was the 1958 7070. This was the beginning of the company's important 7000 series which dominated mainframes for a time. A variety of models were sold, with the 7094 for a time occupying the "fastest computer in the world" node. The 7094 used over 50,000 transistors. Operators would use another, smaller, computer to load a magnetic tape with many programs from punched cards, and then mount the tape on the 7094. We had one of these machines my first year in college. Operating systems didn't offer much in the way of security, and some of us figured out how to read the input tape and search for files with grades. The largest 7000-series machine was the 7030 "Stretch," a $100 million (in today's dollars) supercomputer that wasn't super enough. It missed its performance goals by a factor of three, and was soon withdrawn from production. Only 9 were built. The machine had a staggering 169,000 transistors on 22,000 individual printed circuit boards. Interestingly, in a paper named The Engineering Design of the Stretch Computer, the word "millimicroseconds" is used in place of "nanoseconds." While IBM cranked out their computing behemoths, small machines gained in popularity. Librascope's $16k ($118k today) LGP-21 had just 460 transistors and 300 diodes. It came out in 1963, the same year as DEC's $27k PDP-5. Two years later DEC produced the PDP-8, which was wildly successful, eventually selling some 300,000 units in many different models. Early units were assembled from hundreds of DEC's "flip chips," small PCBs that used diode-transistor logic with discrete transistors. A typical flip chip implemented three two-input NAND gates. Later PDP-8s used ICs; the entire CPU was eventually implemented on a single integrated circuit.

A PDP-8. The cabinet on top holds hundreds of flip chips.

One of DEC's flip-chips. The board has just 9 transistors and some discrete components. But woah! Time to go back a little. Just think of the cost and complexity of the Stretch. Can you imagine wiring up 169,000 transistors? Thankfully Jack Kilby and Robert Noyce independently invented the IC in 1958/9. The IC was so superior to individual transistors that soon they formed the basis of most commercial computers. Actually, that last clause is not correct. ICs were hard to get. The nation was going to the moon, and by 1963 the Apollo Guidance Computer used 60% of all of the ICs produced in the US, with per-unit costs ranging from $12 to $77 ($88 to $570 today) depending on the quantity ordered. One source claims that the Apollo and Minuteman programs together consumed 95% of domestic IC production.

Jack Kilby's first IC. Every source I've found claims that all of the ICs in the Apollo computer were identical: 2800 dual three-input NOR gates, using three transistors per gate. But the schematics show two kinds of NOR gates, "regular" versions and "expander" gates. The market for computers remained relatively small till the PDP-8 brought prices to a more reasonable level, but the match of minis and ICs caused costs to plummet. By the late 60s everyone was building computers. Xerox. Raytheon (their 704 was possibly the ugliest computer ever built). Interdata. Multidata. Computer Automation. General Automation. Varian. SDS. Xerox. A complete list would fill a page. Minis created a new niche: the embedded system, though that name didn't surface for many years. Labs found that a small machine was perfect for controlling instrumentation, and you'd often find a rack with a built-in mini that was part of an experimenter's equipment. The PDP-8/E was typical. Introduced in 1970, this 12 bit machine cost $6,500 ($38k today). Instead of hundreds of flip chips the machine used a few large PCBs with gobs of ICs to cut down on interconnects. Circuit density was just awful compared to today. The technology of the time was small scale ICs, each of which contained a couple of flip flops or a few gates, and medium scale integration. An example of the latter is the 74181 ALU which performed simple math and logic on a pair of four bit operands. Amazingly, TI still sells the military version of this part. It was used in many minicomputers, such as Data General's Nova line and DEC's seminal PDP-11. The PDP-11 debuted in 1970 for about $11k with 4k words of core memory. Those who wanted a hard disk shelled out more: a 512KB disk with controller ran an extra $14k ($82k today). Today a terabyte disk drive runs about $50. If it had been possible to build such a drive in 1970, the cost would have been on the order of $160 billion.

The PDP-11. Experienced programmers were immediately smitten with the PDP-11's rich set of addressing modes and completely orthogonal instruction set. Most prior, and too many subsequent, ISAs were constrained by the costs and complexity of the hardware, and were awkward and full of special cases. A decade later IBM incensed many by selecting the 8088, whose instruction set was a mess, over the orthogonal 68000 which in many ways imitated the PDP-11. Around 1990 I traded a case of beer for a PDP-11/70 which filled three tall racks, but eventually was unable to even give it away. Minicomputers were used in embedded systems even into the 80s. We put a PDP-11 in a steel mill in 1983. It was sealed in an explosion-proof cabinet and interacted with Z80 microprocessors. The installers had for reasons unknown left a hole in the top of the cabinet. A window in the steel door let operators see the machine's controls and displays. I got a panicked 3 AM call one morning - someone had cut a water line in the ceiling. Not only were the computer's lights showing through the window - so was the water level. All of the electronics was submerged. Data General was probably the second most successful mini vendor. Their Nova was a 16 bit design introduced a year before the PDP-11, and it was a pretty typical machine in that the instruction set was designed to keep the hardware costs down. A bare-bones unit with no memory ran about $4k - lots less than DEC's offerings. In fact, early versions used a single four-bit 74181 ALU with data fed through it a nibble at a time. The circuit boards were 15" x 15", just enormous, populated with a sea of mostly 14 and 16 pin DIP packages. The boards were two layers, and often had hand-strung wires where the layout people couldn't get a track across the board. The Nova was peculiar as it could only address 32 KB. Bit 15, if set, meant the data was an indirect address (in modern parlance, a pointer). It was possible to cause the thing to indirect forever.

A board from a Data General Nova computer. Before minis few computers had a production run of even 100 (IBM's 360 was a notable exception). Some minicomputers, though, had were manufactured in the tens of thousands. Those quantities would look laughable when the microprocessor started the modern era of electronics. Microprocessors Change the WorldI have always wished that my computer would be as easy to use as my telephone. My wish has come true. I no longer know how to use my telephone.- Bjarne Stroustrup Everyone knows how Intel invented the computer on a chip in 1971, introducing the 4004 in an ad in a November issue of Electronic News. But everyone might be wrong. TI filed for a patent for a "computing systems CPU" on August 31 of that same year. It was awarded in 1973 and eventually Intel had to pay licensing fees. It's not clear when they had a functioning version of the TMS1000, but at the time TI engineers thought little of the 4004, dismissing it as "just a calculator chip" since it had been targeted to Busicom's calculators. Ironically the HP-35 calculator later used a version of the TMS1000. But the history is even murkier. In part 1 of this story I explained that the existence of the Colossus machine was secret for almost three decades after the war, so ENIAC was incorrectly credited with being the first useful electronic digital computer. A similar parallel haunts the first microprocessor. Grumman had contracted with Garrett AiResearch to build a chipset for the F-14A's Central Air Data Computer. Parts were delivered in 1970, and not a few historians credit the six-chips comprising the MP944 as being the first microprocessor. But the chips were secret until they were declassified in 1998. Others argue that the multi-chip MP944 shouldn't get priority over the 4004, as the latter's entire CPU did fit into a single bit of silicon. In 1969 Four-Phase Systems built the 24 bit AL1, which used multiple chips segmented into 8 bit hunks, not unlike a bit-slice processor. In a patent dispute a quarter-century later proof was presented that one could implement a complete 8 bit microprocessor using just one of these chips. The battle was settled out of court, which did not settle the issue of the first micro. Then there's Pico Electronics in Glenrothes, Scotland, which partnered with General Instruments (whose processor products were later spun off into Microchip) to build a calculator chip called the PICO1. That part reputedly debuted in 1970, and had the CPU as well as ROM and RAM on a single chip. Clearly the microprocessor was an idea whose time had come. Japanese company Busicom wanted Intel to produce a dozen chips that would power a new printing calculator, but Intel was a memory company. Ted Hoff realized that a design with a general-purpose processor would consume gobs of RAM and ROM. Thus the 4004 was born.

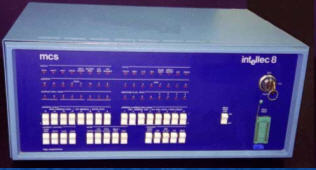

The 4004 microprocessor. It was a four-bit machine packing 2300 transistors into a 16 pin package. Why 16 pins? Because that was the only package Intel could produce at the time. Today fabrication folk are wrestling with the 7 nanometer and even 5 nm process nodes. The 4004 used 10,000 nm geometry. The chip itself cost about $1100 in today's dollars, or about half a buck per transistor. Best Buy currently lists some laptops for about $200, or a few microcents per transistor. And that's ignoring the keyboard, display and all of the rest of the components and software that goes with the laptop. Though Busicom did sell some 100,000 4004-powered calculators, the part's real legacy was the birth of the age of embedded systems and the dawn of a new era of electronic design. Before the microprocessor it was absurd to consider adding a computer to a product; now it's hard to think of anything electronic without embedded intelligence. At first even Intel didn't understand the new age they had created. In 1952 Harold Aiken figured a half-dozen mainframes would be all the country needed, and in 1971 Intel's marketing people estimated total demand for embedded micros at 2000 chips per year. Federico Faggin used one in the 4004's production tester, which was perhaps the first microcomputer-based embedded system. About the same time the company built the first EPROM and it wasn't long before they slapped a microprocessor into the EPROM burners. It quickly became clear that these chips might have some use after all. Indeed, Ted Hoff had one of his engineers build a video game - Space War - using the 4004, though management felt games were goofy applications with no market. In parallel with the 4004's development Intel had been working with Datapoint on a computer, and in early 1970 Ted Hoff and Stanley Mazor started work on what would become the 8008 processor. 1970 was not a good year for technology; as the Apollo program wound down many engineers lost their jobs, some pumping gas to keep the families fed. (Before microprocessors automated the pumps gas stations had legions of attendants who filled the tank and checked the oil. They even washed windows.) Datapoint was struggling, and eventually dropped Intel's design. In April, 1972, just months after releasing the 4004, Intel announced the 8008, the first 8 bit microprocessor. It had 3500 transistors and cost $2200 in 2021 dollars. This 18 pin part was also constrained by the packages the company knew how to build, so it multiplexed data and addresses over the same connections. Typical development platforms were an Intellec 8 (a general-purpose 8008-based computer) connected to a TTY. One would laboriously put the binary instructions for a tiny bootloader into memory by toggling front-panel switches. That is, just to get the thing to boot meant hundreds of switch flips. The bootloader would suck in a better loader from the TTY's 10 character-per-second paper tape reader. Then, the second loader read the text editor's tape. After entering the source code the user punched a source tape, and read in the assembler. The assembler read the source tape - three times - and punched an object tape. Load the linker, again through the tape reader. Load the object tapes, and finally the linker punched a binary. It took us three days to assemble and link a 4KB binary program. When things worked. Once I spent hours typing up a source file, entered the command to punch the source tape, and the editor punched the entire program backwards: the last character came out first. Needless to say, debugging meant patching in binary instructions with only a very occasional re-build.

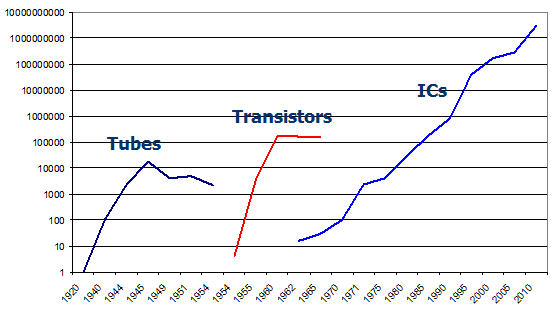

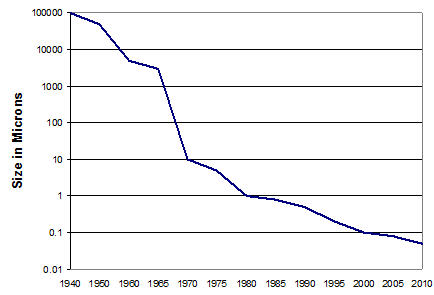

An Intellec 8. The world had changed. Where I worked we had been building a huge instrument that had an embedded minicomputer. The 8008 version was a tenth the price, a tenth the size, and had a market hundreds of times bigger. It wasn't long before the personal computer came out. In 1973 at least four 8008-based computers targeted to hobbyists appeared: The MCM-70, the R2E Micral, the Scelbi-8H, and the Mark-8. The latter was designed by Jon Titus, who tells me the prototype worked the first time he turned it on. The next year Radio Electronics published an article about the Mark-8, and several hundred circuit boards were sold. People were hungry for computers. "Hundreds of boards" means most of the planet's billions were still computer-free. I was struck by how much things have changed when the PC in my woodworking shop died. I bought a used Pentium PC for $60. The seller had a garage with pallets stacked high with Dells, maybe more than all of the personal computers in the world in 1973. And why have a PC in a woodworking shop? Because we live in the country where radio stations are very weak. Instead I get their web broadcasts. So this story, which started with the invention of radio, circles back on itself. I use many billions of transistors to emulate a four-tube radio. By the mid-70s the history of the microprocessor becomes a mad jumble of product introductions by dozens of companies. A couple are especially notable. Intel's 8080 was a greatly improved version of the 8008. The part was immediately popular, but so were many similar processors from other vendors. The 8080, though, spawned the first really successful personal computer, the Altair 8800. This 1975 machine used a motherboard into which various cards were inserted. One was the processor and associated circuits. Others could hold memory boards, communications boards, etc. Offered in kit form for $1800 (in today's dollars), memory was optional. 1KB of RAM was $700. MITS expected to sell 800 a year but were flooded with orders for 1000 in the first month. Computers are useless without software, and not much existed for that machine. A couple of kids from New England got a copy of the 8080's datasheet and wrote a simulator that ran on a PDP-10 mainframe. Using that, they wrote and tested a Basic interpreter. One flew to MITS to demonstrate the code, which worked the very first time it was tried on real hardware. Bill Gates and Paul Allen later managed to sell a bit of software for other brands of PCs. The 8080 required three different power supplies (+5, -5 and +12) as well as a two-phase clock. A startup named Zilog improved the 8080's instruction set considerably and went to a single-supply, single-clock design. Their Z80 hugely simplified the circuits needed to support a microprocessor, and was used in a stunning number of embedded systems as well as personal machines, like Radio Shack's TRS-80. CP/M ran most of the Z80 machines. It was the inspiration for the x86's DOS. But processors were expensive. The 8080 debuted at $400 ($1700 today) just for the chip. Then MOS Technology introduced the 6501 at a strategic price of $20 (some sources say $25, but I remember buying one for twenty bucks) in 1974. The response? Motorola sued, since the pinout was identical to their 6800. A new version with scrambled pins quickly followed, and the 6502 helped launch a little startup named Apple. Other vendors were forced to lower their prices. The result was that cheap computers meant lots of computers. Cut costs more and volumes explode. Active ElementsIn this article I've portrayed the history of the electronics industry as a story of the growth in use of active elements. For decades no product had more than a few tubes. Because of RADAR between 1935 and 1944 some electronic devices employed hundreds. Computers drove the numbers to the thousands. In the 50s SAGE had 100,000 per machine. Just 6 years later the Stretch squeezed in 170,000 of the new active element, the transistor. We embedded folk whose families are fed by Moore's Law know what has happened: some micros today contain 3 billion transistors on a square centimeter of silicon; memory parts are even denser. A very capable 32 bit microcontroller (that is, the CPU, memory and all of the needed I/O) costs under $0.50, not bad compared to the millions of dollars needed for a machine just a few decades ago. That half-buck microcontroller is probably a million times faster than ENIAC. But how often do we stand back and think about the implications of this change? Active elements have shrunk in length by about a factor of a million, but an IC is a two-dimensional structure so the effective shrink is more like a trillion. The cost per GFLOP has fallen by a factor of about 10 billion since 1945.

Growth of active elements in electronics. Note the log scale..

Size of active elements. Also a log scale. It's claimed the iPad 2 has about the compute capability of the Cray 2, 1985's leading supercomputer. The Cray cost $35 million; the iPad goes for $500. Apple's product runs 10 hours on a charge; the Cray needed 150 KW and liquid Flourinert cooling. My best estimate pegs an iPhone (including memory) at several hundred billion transistors. If we built one using the ENIAC's active element technology the phone would be about the size of 170 Vertical Assembly Buildings (the largest single-story building in the world). That would certainly discourage texting while driving. Weight? 2500 Nimitz-class aircraft carriers. And what a power hog! Figure over a terawatt, requiring all of the output of 500 of Olkiluoto power plants (the largest nuclear plant in the world). An ENIAC-technology iPhone would run a cool $60 trillion, about half the GDP of the entire world. And that's before AT&T's monthly data plan charges. (One AT&T plan amortizes a phone at $36/month; it would take 140 million years to pay off the ENIAC-style device). Without the microprocessor there would be no Google. No Amazon. No Wikipedia, no web, even. To fly somewhere you'd call a travel agent, on a dumb phone. The TSA would hand-search you... uh, they still do. Cars would get 20 MPG. No smart thermostats, no remote controls, no HDTV. Slide rules instead of calculators. Vinyl disks, not MP3s. Instead of an iPad you'd have a pad of paper. CAT scans, MRIs, PET scanners and most of modern medicine wouldn't exist. Software engineering would be a minor profession practiced by a relative few. Accelerating TechGenus Homo appeared about 2 million years ago. Perhaps our first invention was the control of fire; barbecuing started around 400,000 BCE. For almost all of those millennia Homo was a hunter-gatherer, until the appearance of agriculture 10,000 years ago. After another 4k laps around the sun some genius created the wheel, and early writing came around 3,000 BCE. Though Gutenberg invented the printing press in the 15th century, most people were illiterate until the industrial revolution. That was about the time when natural philosophers started investigating electricity. In 1866 it cost $100 to send a ten word telegram through Cyrus Field's transatlantic cable. A nice middle-class house ran $1000. That was before the invention of the phonograph. The only entertainment in the average home was the music the family made themselves. One of my great-grandfathers died in the 1930s, just a year after electricity came to his farm. My grandparents were born towards the close of the 19th century. They lived much of their lives in the pre-electronic era. When probing for some family history my late grandmother told me that, yes, growing up in Manhattan she actually knew someone, across town, who had a telephone. That phone was surely a crude device, connected through a manual patch panel at the exchange, using no amplifiers or other active components. It probably used the same carbon transmitter Edison invented in 1877. My parents grew up with tube radios but no other electronics. I was born before a transistorized computer had been built. As a kid we had one Heathkit tube amplifier my dad built for a record turntable. In college all of the engineering students used slide rules exclusively, just as my dad had a generation earlier at MIT. The 40,000 students on campus all shared access to a single mainframe. But my kids were required to own a laptop as they entered college, and they have grown up never knowing a life without cell phones or any of the other marvels enabled by microprocessors that we take for granted. The history of electronics spans just a flicker of the human experience. In a century we've gone from products with a single tube to those with hundreds of billions of transistors. The future is inconceivable to us, but surely the astounding will be commonplace. As it is today. Thanks to Stan Mazor and Jon Titus for their correspondence and background information. |

||||

| Failure of the Week | ||||

A lot of readers are sending in entries for the Failure of the Week. Thanks! Keep 'em coming. I add each one to the queue so it may be some weeks or months before I run yours. Currently there are about 30 waiting to go. From Andy Kirby: Energy Smart Meter remote display, supplied by EDF Energy. I am not looking forward to my next Gas bill. Occasionally when the daily usage resets, it resets to £99999 instead of zero and stays stuck there all day until the next daily reset. I suspect there is a timing collision between the daily reset routine and the incoming reading that causes this. There's millions of these units in use around the UK...

|

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. I shot a query into the Net. A posted message called me rotten A lawyer sent me private mail One netter thought it was a hoax: Each day I scan each Subject line |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |