Happy Beethoven's birthday!

Some HP SSDs "stop running after the 32,768-hour mark." The citation is from an article in Electronic Design. The language is a bit muddy; I expect they fail as the time rolls over from 32,767 to 32,768 hours. Bugs are all-too-common but consider this: That's almost four years. No reasonable amount of testing would uncover the problem. While test is critical, it's inadequate. Without access to the code it's impossible to be certain, but I suspect even achieving 100% test coverage would not have picked up the problem.

Test is just one of many filters needed to ensure correct code.

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes.

Attendees have blogged about the seminar, for example, here

.I'll present the Better Firmware Faster seminar in Melbourne and Perth, Australia February 20 and 26th. All are invited. More info here. As I noted in a recent blog, I'm cutting back significantly on travel, so this will be my last trip to Australia. It's a beautiful country with great people, but a long way from my homestead in Maryland. |

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past.

Mat Bennion raised one of the issues we hear about from time to time with the use of C:

|

Someone recently suggested to me that a good practise is to create a profusion of meaningful data types so that a static analyser can spot errors more easily. Imagine types such as tempC_t, tempF_t, tempK_t (for degrees Celsius, Fahrenheit and Kelvin): try to add two variables of different temperature types and you get a warning. Sounds great, but I can see downsides: you've created a whole new source of arguments in code reviews over the spelling and number of types (e.g. do we need types for each temperature, or just types that distinguish between temperature and pressure?); you need functions (or macros or suppression of static analyser warnings) to convert between types. I know that engineers will relish having arguments about whether Kelvin and Celsius should be directly mixable. It also risks general proliferation and confusion: on a large project, people will unintentionally create duplicate names (e.g. tempDegC_t, which is then not compatible with tempC_t). What do you or other readers think of this approach?

The benefits may depend on how low-level the code is: when writing device drivers, the most important thing to me is knowing the raw data type, so I just use simple Hungarian notation. When writing C#, the focus is on high-level types – I really don't care how the IPAddress class represents an IP address. |

In a time when so many articles in the trade press are thinly-disguised press releases, it's important to acknowledge those pushing back against this sort of uber-marketing. Bill Schweber's articles in Electronic Design consistently provide information of use to engineers. They typically describe how some component or circuit works, like this week's excellent piece that describes flyback circuits. |

It's believed, until the recent 737-MAX crashes, that no one had ever lost a life in a commercial aircraft due to a software problem. Why is this?

No one really knows. All commercial aircraft software in the USA is crafted to DO-178C. Is it the use of this standard, or is it a safety culture that is willing to embrace the burdensome regs?

Regardless, few industries regularly achieve the high quality of code done to DO-178C. Harold Kraus sent in the following about the importance of requirements in avionics.

But first, a couple of definitions for those unfamiliar with DO-178C:

- DO-254: The avionics standard for electronic hardware

- DER: Designated Engineering Representative - an individual who may approve, or recommend that the FAA approves, the technical data produced.

Here's Harold's write-up:

|

What DO-178C wants from Reviews:

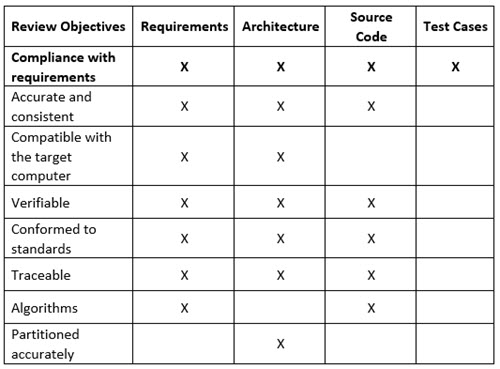

DO-178C describes three forms of verification; review, test, and analysis. The subject discussed here is review; review involves human judgment as opposed to mechanical testing and mathematical analysis.

A folk model of a review is to read through a document and look for grammatical errors and such; but, in DO-178C (and DO-254), all reviews for certification credit must be requirements-based (as are all tests and analyses).

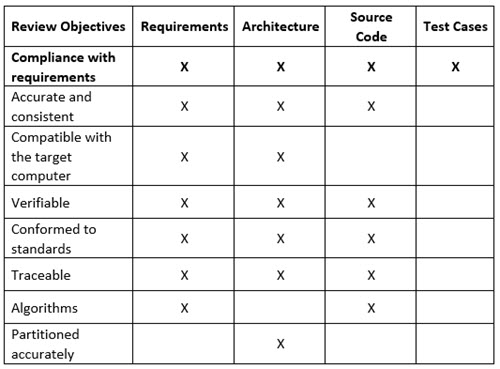

Review is a DO-178C process, and all processes have objectives defined within the document and tabularized in Annex A. The following table collects all of the objectives that DO-178C (Section 6) says that reviews of Requirements, Architecture, Source Code, and Test Cases should accomplish. In each of the rows following Compliance with requirements, insert "Requirements are …" or "… to requirements", and you get the full sense of the review objectives.

Grammar!? Heh! Fo-ormatting!? Heh! A reviewer craves not these things. An embedded software's strength flows from the Requirements!

The DO-178C Review is not about reading through the Word document looking for sp, ww, caps, etc. This is expected to happen as a matter of course. What matters is flow-down of traceable requirements in development -- you review requirements, source code, and test cases to prove that it happens.

My first DER told me, "You don't review reviews." The primary focus of DO-178C review is verifying requirements, and review reports do not define or implement requirements, they are the verification. (Yes, you do review test results for correctness and for explanation of all of the software's discrepancies during the requirements-based tests.)

FWIW, One avionics company calls/called these DO-178C reviews "Technical Reviews", which emphases the focus is on technical correctness (as opposed to (just) grammar), so they explicitly enforce technical qualification in the assignment of participants to the review. Another avionics company calls these DO-178C reviews "Peer Reviews" to enforce that the reviewers have the same technical competency as that required of the author (many also insist that the code walkthrough reviewers be peer programmers). To this peer restriction, a DER would add that peer seniority is required for DO-178C Independence Objectives; there is no Independence when the reviewer has lower or higher technical or administrative seniority than the author. |

| Benchmarking Firmware Teams |

How does your team compare to others in the industry? Is it world-class, or sub-par?

Benchmarking is the practice of comparing some aspect of a business with other companies in the same industry. For instance, a business might average 46 days to collect a receivable. What does that mean? If the industry average is 35 days, 46 is nothing to brag about. If 80% of their competitors do worse, then 46 is a great number. It's likely going to be hard to gain much improvement.

I've advocated benchmarking firmware teams for many years. Alas, it's hard to get good numbers, and the standard deviations are high. But we do have some data. In my Better Firmware Faster class I stress the importance of engineering with numbers. As David Aikin said: "Engineering is done with numbers. Analysis without numbers is only an opinion."

For instance, most teams average a 5 to 10% defect rate after getting a clean compile before any testing starts. Worst-in-class engineers generate 250 defects per KLOC. There's no measurable difference between C and C++. So for a 10KLOC project figure on chasing 500 to 1,000 problems.

Ever wonder why projects run late? Clearly these numbers are abysmal. Cut them by half and the schedule will improve dramatically.

Ada teams are about an order of magnitude better, and SPARK developers crush even Ada.

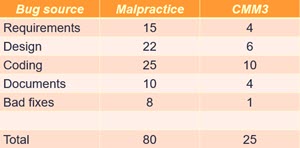

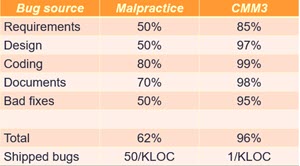

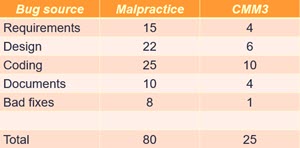

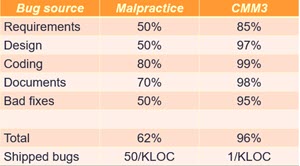

Where do bugs come from? This table shows defects/KLOC for a poorly-performing team, and one rated at Capability Maturity Level 3, considered a pretty disciplined outfit:

And here's where defects are removed:

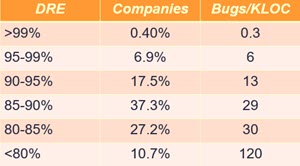

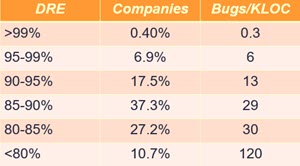

Defect Removal Efficiency (DRE) is one of those tortured terms academics love. It's merely the percentage of defects removed during engineering and up to 90 days post-initial delivery. You'd hope for 100%. Here's where the industry stands. "Bugs/KLOC" is the number of shipped bugs per thousand lines of code:

Poor quality teams tend to rely mostly on test to find defects. Capers Jones identifies over 60 ways to minimize these, though no one does or should use all of those. I view this as a series of filters, each stage removing some percentage of problems. It has been shown that test, by itself, typically finds only 50%.

For more numbers Capers Jones' books are a great resource. The sadly-defunct Crosstalk Magazine was also a valuable resource.

How does your team measure up?

|

| Rust Redux |

Muse 387's comments about the Rust programming language generated a lot of discussion. Nick P's thoughts were useful:

|

Hey Jack, I just wanted to add a few things about the borrow checker. The errors it catches at compile time, temporal errors with pointers and concurrency, are the kinds that are hardest to debug. Makes fighting with it to prevent them more worthwhile. Previously, the way to do get its guarantees with C code was using a tool such as Microsoft's VCC with separation logic. Early reports said verification experts averaged 2loc *per day* in productivity. So, just using Rust with learned heuristics for battling the borrow checker is a massive improvement for anyone wanting those guarantees. That's proven out by piles of Rust libraries that borrow-check vs number of C libraries with formal proofs of same properties. :)

Although I don't use it myself, here's some quick notes and links I got from Rust's community. Many people report Entity-Component System design reduces battles with borrow checker on top of its own benefits. Rust does support reference counting, too, if borrow checker is too much trouble. Rust can also do unsafe. Like Ada, it's safe-by-default with ability to turn it off where necessary (eg low-level or max-performance routines). Unsafe stuff can be wrapped with type-safe functions with checks on input. Macros can reduce boilerplate. Finally, there's a link with a pile of embedded stuff.

https://mmstick.keybase.pub/managing-memory-in-rust-ecs/

https://github.com/rust-embedded/awesome-embedded-rust

Note: Tock RTOS might especially interest you embedded folks given it mixes your interests with Rust's benefits. |

Tock is an RTOS written in Rust. The Case for Writing a Kernel in Rust is a worthwhile paper about how Rust is a good choice for RTOS work, though I stand by my thoughts in Muse 387. |

| More on R&D |

A number of people responded to last issue's comments on R&D.

Charles Manning wrote:

|

I agree with your general principles here, but, yes I think it is possible to do R&D in some circumstances.

It is possible to kick off with a product development where the science is already "good enough" to achieve a minimum viable product, but hope to make the product even better by doing a parallel bit of research.

If you do this then it is best to do the "Plan B" (MVP) development first, then the "Plan A" (stretch goal) development. This way you can meet your product development goals even if the research goes into the weeds.

If the research takes too long then you still have a product and can either abandon the research or push out a future FW release.

What does generally fail is that many project teams will try do the "Plan A" development first. When they realise it is not going to deliver, then in a mad panic they try bend the failed "Plan A" into "Plan B" - something that generally fails. |

From Mike Davis:

|

Re: "Of R&D" my career has more instances of a lesser version of the R vs. D problem you described. Most recently, my Product Manager (who defines product requirements at my company) often asks for new features that are radically different and therefore not well-supported in current code. So while I can see the possibility of doing it, schedule estimates are necessarily broad. Not as inherently un-schedulable as "research", but much less schedulable than variations of a theme that already exists in the codebase.

Per my previous response to "Honesty in Scheduling", I feel obliged to honestly tell him that's "an act of creation" and it'll take longer and the end date less certain than he'd like. I use that phrase to avoid preconceived notions that come with "research", even though that's what I end up doing: researching what he really wants, which often feels as open-ended as curing cancer. 😉> I also try to offer him a variation of his new feature that's more in line with existing code, and therefore more quickly and surely implemented. We're rarely happy with the outcome, but that's the nature of compromise.

My overarching point here, is that in the context of scheduling, R vs. D is not a sharp line but a gradient. As professionals, I feel we need to be conscious of where a given task lies on that gradient in order to provide honest schedule estimates, including confidence and risk. |

Rob Milne commented:

|

"Have you ever had a project disaster because you were doing R and the same time as D?"

Yup. I managed a project like that and it was painful to watch one of my firmware engineers get the blame for the shortcomings of an experimental in-house sensor that our researchers claimed to be superior to the tried and true sensor that was externally sourced. No matter how the developer tried to filter/fit the data or control the environment the test results were inconsistent and unsatisfactory. It was disillusioning to witness upper management using the failure of the project against the developer in performance reviews. |

Emil Imrith had a suggestion:

|

I agree about R&D. It is just not realistic. Development is a totally different mindset. This confusion of R and D as sequence or parallel process has caused me [and I believe to many] enormous waste of resources (money, time, you name it!). I think the Idea behind R and D (R&D) as one process is to calm investors' anxiety that a team is developing something soon to be commercialized and create revenue fast.

That's why in my opinion D is paired with R.

What about if we come up with R&P ( Research and Prototyping) instead. At least that has been my personal approach against R&D paradigms. |

|

| Jobs! |

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter.

Please keep it to 100 words. There is no charge for a job ad. |

| Joke For The Week |

These jokes are archived here.

bug, n: A son of a glitch. |

| About The Embedded Muse |

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and

contributions to me at jack@ganssle.com.

The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get

better products to market faster.

|

|