|

||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

|

Over 400 companies and more than 7000 engineers have benefited from my Better Firmware Faster seminar held on-site, at their companies. Want to crank up your productivity and decrease shipped bugs? Spend a day with me learning how to debug your development processes. Attendees have blogged about the seminar, for example, here and here. I'll present the Better Firmware Faster seminar in Melbourne and Perth, Australia February 20 and 26th. All are invited. More info here. As I noted in a recent blog, I'm cutting back significantly on travel, so this will be my last trip to Australia. Arm has released a free e-book of interest to people integrating Cortex-M parts into their SoCs: System-on-Chip Design with Arm Cortex-M Processors. Be careful what you read. Renesas's new S1JA SoC is based on the Cortex-M23 and appears to be a heck of a part, with just about every peripheral you can imagine. But a writeup on embedded.com reads, in part: "The microcontrollers' ultra-low power extends battery life for battery-operated portable and battery backup applications. The software standby mode consumes only 500 nA to enable 20-year battery-operated applications that spend extended periods in sleep mode." Unless the "battery" is a radioisotope generator the 20-year claim exceeds the shelf-life of the batteries used in portable electronics. Getting years of life from a battery is a hard problem with many subtle issues. For more info see this (though that paper focuses on the CR2032 cell, the concepts scale to most battery types). |

||||

| Quotes and Thoughts | ||||

"It is better to define your system up front to minimize errors, rather than producing a bunch of code that then has to be corrected with patches on patches" Margaret Hamilton of Apollo Guidance Computer fame. |

||||

| Tools and Tips | ||||

|

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. Thor wrote:

|

||||

| Freebies and Discounts | ||||

This month's giveaway is a copy of Embedded Systems World-Class Designs.

Enter via this link. |

||||

| The Rust Programming Language | ||||

I'm getting a lot of email about the Rust language, in particular, in using Rust on embedded projects. There's a lot to like about the language. It superficially looks like C/C++. Here's an example factorial program, from the Wikipedia entry: fn factorial(i: u64) -> u64

{

let mut acc = 1;

for num in 2..=i

{

acc *= num;

}

acc

}

Where's the return argument? In Rust, if there's no return statement the last expression is the return value. I find that strangely appealing. The "let" keyword reminds me of the bad old days of Dartmouth BASIC. Rust is used in embedded development, and the main site has a sub-page about this, with examples using a Cortex M processor. While C is a great language for low-level programming and embedded development, it lacks modern features like strong typing (and so much more) that helps create correct programs. The SPARK people call this "correctness by construction." Ada, SPARK, and to a lesser extent Rust enforce rules which lead to safer code. Studies (e.g., Software Static Code Analysis Lessons Learned, Andy German, QinetiQ Ltd., Nov 2003 Crosstalk) show programs in Ada and SPARK have only a fraction of the defects found using C. To my knowledge there's no such data about Rust, but it seems reasonable that Rust code will be less error-prone than C code. I have seen no data on Rust's efficiency (in size and speed), bus suspect it's similar to C. However, as fascinating as it is to learn new languages, there's one place where Rust fails. It's evolving at a rapid pace, and there is no ANSI standard. That's generally not much of a problem in web development or for small embedded projects. But many embedded systems have lives of years and decades. In the railroad industry support has to be guaranteed for 30 years. What will Rust look like a decade or two hence? Will you be able to get a compiler that handles the old dialect? If you have hundreds of thousands of lines of Rust legacy code, reuse might be impossible if all of that is coded using a long-obsolete version. C made its way into the embedded space in the 1980s. Lots of compilers abounded. None were compatible. Remember Manx C? That decade was a veritable Babel of incompatible Cs which was resolved only with the advent of standardization under ANSI C 89/90. As technologists we want the best possible solution. Yet sometimes that has to take second place to business realities. Every engineering effort involves some amount of risk, but we do want to minimize that risk, especially if it could have devastating long-term consequences. Megabucks invested in developing code that can no longer be compiled is a risk that is not, in my opinion, worth taking. I look forward to the day when Rust joins the stable of standardized languages, and have no doubt we'll see that happen in the next decade or so. Standardization efforts move slowly. ANSI spent six years working on the first C standard. |

||||

| Encoder Bouncing | ||||

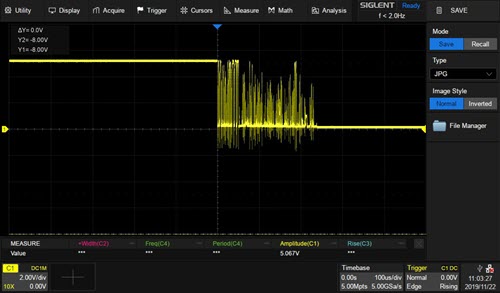

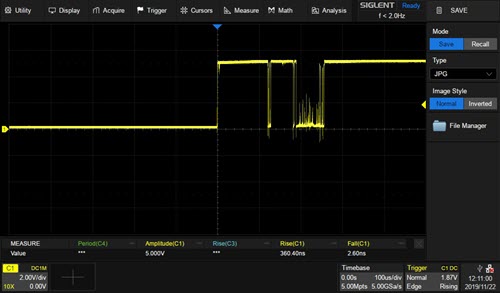

Recent Muses (TEM 384 and TEM 385) had discussions about encoders, which engendered quite a bit of interesting email correspondence. I decided to look at bounce characteristics of a few models. I picked low-cost devices as these are common in controls (e.g., radio volume controls). My focus was (mostly) on mechanical encoders, as it's reasonable to assume these will have significant bounce issues. Bourns's PEC11R-4215F mechanical encoder gives 24 PPR (pulses per revolution). The datasheet specs 2 ms bounce though that is footnoted "Devices are tested using standard noise reduction filters." It's not clear to me if the 2 ms was with or without the filter, but the unit behaved much better than specified. With no filter, this is the worst bouncing I saw:

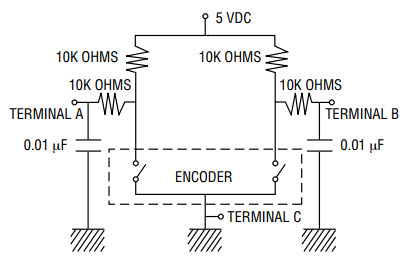

That's pretty ugly, but the bouncing lasted only about 250 µs. The datasheet recommends this circuit to clean up the noise:

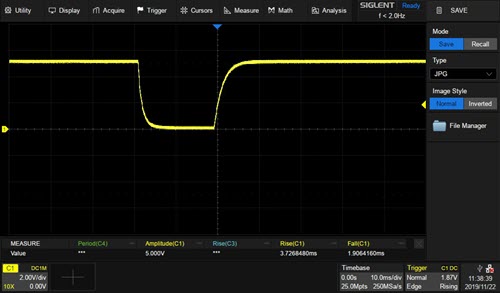

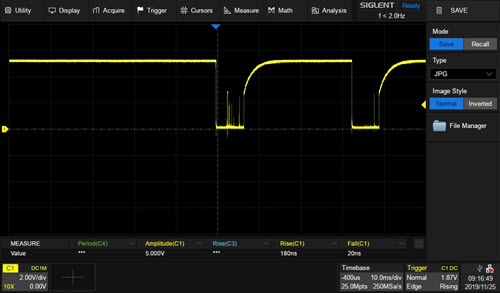

With it, I saw no bouncing. The resulting waveform gives rise and fall times greater than expected from the RC time constant (3.7 ms rise, 1.9 ms fall); presumably there's some internal resistance:

CTS's 288V232R161B2 mechanical encoder gives 4 PPR. 5 ms bounce is specified. No filter circuit is recommended. The worst bounce I saw was about 300 µs.:

The recommended filter circuit is identical to the one Bourns suggests (see above). With it, well, the results were not great:

The scope is set to 10 ms/division. Some of those supposedly-filtered zits exceed 2V. Even a Schmitt trigger, like the 74HCT14, will pass these on as valid logic signals. At least the bounces are very narrow. One could write some code that rejects pulses shorter than a ms or so. Just to put this data in perspective, I also tried an optical encoder, expecting clean outputs. And indeed, there was no bouncing. This is the Grayhill 62AG11-L0-P, which sports 32 PPR:

Note the slow rise and fall times, which I saw at times exceeding 8 ms. That is better, however, than the rated 30 ms worst-case numbers. I presume this is an artifact of the optical interrupter swinging into the light beam. Be sure to figure this into your max rotation speed. Assuming 30 ms rise time, and another 30 ms fall time, with 32 PPR the max speed will be under 0.5 rotations/second. I recently rented a Toyota; the radio was almost unusable as turning the tuning knob caused wild and unpredicable results. A clockwise rotation often increased the frequency, as expected, but sometimes the the tuner jumped down or crazily up. One wonders if the engineers used an encoder yet didn't anticipate the bouncing. For ideas about debouncing, see this. |

||||

| Of R&D | ||||

Research and Development. R&D. It's the lifeblood of tech companies, and it's what we engineers do all day, every day. Nonsense. There's no such thing as R&D. There's R, and there's D, and the two are completely separate activities. Research is all about discovering new things. It's the science that ultimately enables the products we build, the metaphorical man-behind-the-curtain pulling the levers to control the machines we create. Research might also involve discovering new algorithms, like new ways to smooth signals or compress data. "New" might mean new to us but not to the world. So we research an idea or a need, and then switch to development mode. The result of research is an approach that one can then implement. Development is taking known ideas and using them to build products. That's the bulk of an engineer's work. We transform an algorithm to something physical, like converting a CRC algorithm to C code, to VHDL inside an FPGA, or to logic components. One of my top ten reasons for failed projects is "bad science," or the inability to separate R from D. When a company starts building a product before really understanding what is being measured the schedule is doomed. Start coding an algorithm without it being sharply defined and, at best, you'll wander aimlessly till, with luck, settling on an approach that works. Research simply can't be scheduled. If you don't believe that, please develop a (realistic) schedule for discovering the cure for cancer. You might be able to guesstimate simple research schedules, like doing a search for a known algorithm, but even that is, in my experience, very difficult to estimate. The first "Eureka" is often followed by disappointment when a little experimenting reveals some fatal flaw, requiring more research to find a better approach. Yet I constantly see teams conflating R and D, leading inevitably to late or failed projects. Sure, there are some projects that necessarily pursue R and D in parallel. But those care rarely be scheduled with any accuracy. What do you think? Have you ever had a project disaster because you were doing R and the same time as D? |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad. |

||||

| Joke For The Week | ||||

These jokes are archived here. Standards, n.: The principles we use to reject other people's code. |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |