|

||||||||||

You may redistribute this newsletter for non-commercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go here or drop Jack an email. |

||||||||||

| Contents | ||||||||||

| Editor's Notes | ||||||||||

|

Wouldn't you want to be in the top tier of developers? That is part of what my one-day Better Firmware Faster seminar is all about: giving your team the tools they need to operate at a measurably world-class level, producing code with far fewer bugs in less time. It's fast-paced, fun, and uniquely covers the issues faced by embedded developers. Information here shows how your team can benefit by having this seminar presented at your facility. Thanks to everyone for filling out the salary survey. Due to travel I probably won't have the results for a few weeks, so am planning to publish those in April. |

||||||||||

| Quotes and Thoughts | ||||||||||

"The history of the Internet shows that industry treats security as something that might be added later should the product garner a market base and in response to customer demands." - Hilarie Orman, IEEE Computer, October 2017, in an article provocatively titled "You Let That In?" |

||||||||||

| Tools and Tips | ||||||||||

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. |

||||||||||

| Freebies and Discounts | ||||||||||

This month's giveaway is a copy of Jean Labrosse's excellent book "uC/OS-III, The Real-Time Kernel for the Kinetis ARM Cortex-M4."

It will close at the end of March, 2018. Enter via this link. |

||||||||||

| A Fire Code for Software? | ||||||||||

This week's quote (above) reminds me of the 1980 MGM Grand Hotel fire, in which 85 people were killed and 650 injured. The hotel didn't have sprinklers, as those would have added $200,000 to construction costs. Lawsuit payouts and reconstruction costs eventually totaled over $400 million. Fire protection is like security: the benefits aren't felt until disaster strikes.

MGM Grand Hotel burning Fires like at the MGM were once common occurrences. Sweeping fires are today so unusual that the once dreaded word conflagration sounds quaint to our modern ears. Yet in 19th century America a city-burning blaze consumed much of a downtown area nearly every year. Fire has been mankind's friend and foe since long before Homo sapiens or even Neanderthals existed. Researchers suspect proto-humans domesticated it some 790,000 years ago. No doubt in the early days small tragedies - burns and such - accompanied this new tool. As civilization dawned, and then the industrial revolution drove workers off the farm, closely-packed houses and buildings erupted into conflagration with heartrending frequency. In 1835 a fire in lower Manhattan destroyed warehouses and banks, the losses bankrupting essentially every fire insurance company in the city. The same area burned again in 1845. Half of Charleston, SC burned in 1838. During the 1840s fire destroyed parts of Albany, Nantucket, Pittsburgh, and St. Louis. The next decade saw Chollicothe, OH, St. Louis (again), Philadelphia and San Francisco consumed by flames. Much of my hometown of Baltimore burned in 1904. San Francisco was hit again during the 1906 earthquake; that fire incinerated 4 square miles and is considered one of the world's worst fires. Mrs. O' Leary's cow may or may not have started the Great Chicago Fire that took 300 lives in 1871 and left some 90,000 homeless. The largely wooden city had received only 2.5 inches of rain all summer so turned into a raging inferno advancing nearly as fast as people could flee. But Chicago wasn't the only Midwestern dry spot; on the very same day an underreported fire in Peshtigo, WI killed over 1000.

Great Chicago fire A year later Boston burned, destroying 8% of the capitol of Massachusetts. 1889 saw the same part of Boston again ablaze. Theaters succumbed to the flames with great regularity. Painted scrims, ropes, costumes, and bits of wood all littered the typical stage while a tobacco-smoking audience packed the buildings. In Europe and America 500 fires left theaters in ruins between 1750 and 1877. Some burned more than once: New York's oft-smoldering Bowery Theatre was rebuilt 5 times. The historical record sheds little light on city-dwellers' astonishing acceptance for repeated blazes. By the 1860s fireproof buildings were well understood though rarely constructed. Owners refused to pay the slight cost differential. At the time only an architect could build a fireproof building because such a structure used somewhat intricate ironwork which required carefully measured drawings. Few developers then consulted architects, preferring instead to just toss an edifice up using a back-of-the-envelope design. Sound familiar? Crude sprinklers came into being in the first years of the 19th century yet it wasn't till 1885 that New York law required their use in theaters. But even those regulations were weak, reading "as the inspector shall direct." Inspectors' wallets fattened as corruptness flourished. People continued to perish in horrific blazes. The 1890 invention of the modern sprinkler reduced the cost of a fire to just 7% of that incurred in a building without the devices. As many as 150 theaters had them by 1905. Yet, as mentioned, nearly a century later the MGM Grand Hotel didn't have sprinklers. Though fire marshals had insisted that sprinklers be installed in the casino and hotel, local law didn't require them. The local law was changed the following year. Fire codes evolved in a sporadic fashion. Before the Civil War only tenements in New York were required to have any level of fireproofing. But the New York Times made a ruckus over an 1860 tenement fire that eventually helped change the law to mandate fire escapes for some - not many - buildings. A fire at New York's Conway's Theatre in 1876 killed nearly 300 people and led to a more comprehensive building code in 1885. 13 years after the Great Fire, Chicago finally adopted the first of many new codes. This legislation by catastrophe wasn't proactive enough to ensure the public safety. Consider the 1903 Iroquois theater fire in Chicago. Shortly before it opened, Captain Patrick Jennings of the local fire department made a routine inspection and found grave code violations. There were no sprinklers, no exit signs, no fire alarms. Standpipes weren't connected. Yet officials on the take allowed the theater to open. A month after the first performance 600 people were killed in a fast moving fire. All of the doors were designed to open inwards. Hundreds died in the crush at the exits. Actor Eddie Foy saved uncounted lives as he calmed the crowd from the stage. Ironically, he and Mrs. O' Leary had been neighbors; as a teenager he barely escaped the 1871 fire. Afterwards a commission found fault with all parties, including the fire department: "They seemed to be under the impression that they were required only to fight flames and appeared surprised that their department was expected by the public to take every precaution to prevent fire from starting." I'll get back to that statement towards the end of this article. Carl Prinzler had tickets for the performance but the press of business kept him away. He was so upset at the needless loss of life that he worked with Henry DuPont to invent the panic bar lock now almost universally used on doors in public spaces. Fast forward 83 years. Dateline San Juan, 1986. 97 died in a blaze at the coincidently-named DuPont Plaza hotel; 55 of those were found in a mass at an inward opening door. In 1981 48 people were lost in a Dublin disco fire because the Prinzler/DuPont panic bars were chained shut. In 1942 nearly 500 were killed in yet another fire at Boston's Coconut Grove nightclub, 100 of those were found piled up in front of inward opening doors. Others died constrained by chained panic bars. Many jurisdictions did learn important lessons from the Iroquois disaster but took too long to implement changes. Schools, for instance, modified buildings to speed escape and started holding fire drills. Yet 5 years after Iroquois a fire in Cleveland took the lives of 171 children and two teachers. The exit doors? They opened inwards. Changes to fire codes came slowly and enforcement lagged. But the power of the press and public outrage should never be underestimated. The 1911 fire at New York's Triangle Shirtwaist Company was a seminal event in the history of codes. Flames swept through the company's facility on the 8th, 9th and 10th floors. Ladders weren't tall enough and the fire department couldn't fight it from the ground. 141 workers were killed; bodies plummeting to the ground eerily presaged 9-11.

Triangle Shirtwaist fire But at this point in American history reform groups had taken up the cause of worker protections. Lawmakers saw the issue as good politics. Demonstrations, editorials and activism in this worker-friendly political environment led to many fire code changes. Though you'd think insurance companies would work for safer buildings they had little interest in reducing fires or mortality. CEOs simply increased premiums to cover escalating losses. In the late 1800s mill owners struggling to contain costs established the Associated Factory Mutual Fire Insurance Companies, an amalgamated non-profit owned by the policyholders. It offered far lower rates for mills made to a standard, much safer, design. The AFM created the National Board of Fire Underwriters to investigate fires and recommend better construction practices and designs. 1905 saw the first release of their Building Code. 6,700 copies of the first edition were distributed. Never static, it evolved as more was learned. Amendments made to the code after the Triangle fire, for instance, improved mechanisms to help people egress a burning building. MIT-trained electrician William Merrill convinced other insurance companies to form a lab to analyze the causes of electrical fires. Incorporated in 1901 as the Underwriters' Laboratories, UL still sets safety standards and certifies products. Our response to fires, collapsing buildings and the threats from other perils of industrialized life all seem to follow a similar pattern. At first there's an uneasy truce with the hazard. Inventors then create technologies to mitigate the problem, such as fire extinguishers and sprinklers. Sporadic but ineffective regulation starts to appear. Trade groups scientifically study the threat and learn reasonable responses. The press weighs in, as pundits castigate corrupt officials or investigative reporters seek a journalistic scoop. Finally, governments legislate standards. Always far from perfect, they do grow to accommodate better understanding of the problem. Which brings us to software. Though computer programs aren't as yet as dangerous as fire, flaws can destroy businesses, elections and even kill. Faulty car code has killed and injured passengers. Software errors in radiotherapy devices have maimed and taken lives. Some jets can't fly without overarching software control. You can't open a newspaper without reading about the latest security breach, which often affects tens or hundreds of millions. Why is there no fire code for software? In the USA the feds currently mandate standards for some firmware. In Europe regulations are coming into place for data security. There are some documented processes for developing better code. But most of us play in a wildly-unregulated and unconstrained space. Firmware is at a point in time metaphorically equivalent to the fire industry in 1860. We have sporadic but mostly ineffective regulation. The press occasionally warms to a software crisis but, there's little furor over the state of the art. Rest assured there will be a fire code for software. As more life- and mission-critical applications appear, as firmware dominates every aspect of our lives, bugs cause some horrible disasters, the public will no longer tolerate errors and crashes. For better or worse, our representatives will see the issue as good politics. Legislation by catastrophe drove the fire codes, and will drive the software codes. Just as certain software technologies lead to better code, the technology of fireproofing was well understood long before ordinances required their use. The will to employ these techniques lagged, as they do for software today. There's a lot of snake oil pedaled for miracle software cures. Common sense isn't one of them. I have visited CMM level 5 companies (the highest level of certification, one that costs megabucks and many years to achieve) where too many of the engineers had never heard of peer reviews. These are required at level 3 and above. Clearly the leaders were perverting what is a fairly reasonable, though heavyweight, approach to software engineering. Such behavior stinks of criminal negligence. It's like bribing the fire marshal. I quoted the Iroquois fire's report earlier. Here's that sentence again, with a few parallels to our business in parenthesis: "They (the software community) seemed to be under the impression that they were required only to fight flames (bugs) and appeared surprised that their department was expected by the public to take every precaution (inspections, careful design, encapsulation, and so much more) to prevent fire (errors) from starting." I collect software disasters, and have files bulging with examples that all show similar patterns. Inadequate testing, uninspected code, shortcutting the design phase, lousy exception handlers and insane schedules are responsible for many of the crashes. We all know these things, yet seem unable to benefit from this knowledge. I hope it doesn't take us 790,000 years to institute better procedures and processes for building great firmware. Do you want fire codes for software? The techie and libertarian in me screams "never!" But perhaps that's the wrong question. Instead ask "do I want conflagrations? Software disasters, people killed or maimed by my code, systems inoperable, customers angry?" No software engineering methodology will solve all of our woes. But continuing to adhere to ad hoc, chaotic processes guarantees we'll continue to ship buggy code. When researching this a firefighter left me with this chilling thought: "I actually find bad software even more dangerous than fire, as people are already afraid of fire, but trust all software."

|

||||||||||

| More on Bugs and Errors | ||||||||||

Many readers responded to the article "Is It a Bug or an Error?" in the last issue. Benjamin Noack sent a link to an article about how the Space Shuttle's code was written. Michael Covington wrote:

Sergio Caprile commented on dyslexia and programming:

Ray Keefe contributed:

From Rod Main:

Rob Aberg tied comments about Ada/SPARK to the bug discussion:

|

||||||||||

| This Week's Cool Product | ||||||||||

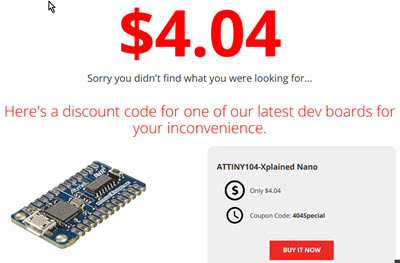

Microchip has an interesting new part called the ATSAMA5D27C-D1G-CU System in Package (SiP). Near as I can tell it's a Cortex A5 with DDR2 memory integrated onto it. That's important because laying out high-speed interconnects on a PCB can be difficult. Now, that's about all I can find out, since (as of this writing) the link provided gives a 404 error. But this is the classiest 404 I've ever seen - they offer a $4.04 discount on an ATTINY board for the inconvenience of being directed to a missing page!

Kudos, Microchip! Note: This section is about something I personally find cool, interesting or important and want to pass along to readers. It is not influenced by vendors. |

||||||||||

| Jobs! | ||||||||||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intent of this newsletter. Please keep it to 100 words. There is no charge for a job ad.

|

||||||||||

| Joke For The Week | ||||||||||

Note: These jokes are archived at www.ganssle.com/jokes.htm. You might be an engineer if:

|

||||||||||

| Advertise With Us | ||||||||||

Advertise in The Embedded Muse! Over 27,000 embedded developers get this twice-monthly publication. . |

||||||||||

| About The Embedded Muse | ||||||||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |