|

|

||||

You may redistribute this newsletter for noncommercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go to https://www.ganssle.com/tem-subunsub.html or drop Jack an email. |

||||

| Contents | ||||

| Editor's Notes | ||||

Are you happy with your bug rates? If not, what are you doing about it? Are you asked to do more with less? Deliver faster, with more features? What action are you taking to achieve those goals? In fact it IS possible to accurately schedule a project, meet the deadline, and drastically reduce bugs. Learn how at my Better Firmware Faster class, presented at your facility. See https://www.ganssle.com/onsite.htm . |

||||

| Quotes and Thoughts | ||||

"PHP is a minor evil perpetrated and created by

incompetent amateurs, whereas Perl is a great and insidious

evil, perpetrated by skilled but perverted professionals." -

Jon Ribbens |

||||

| Tools and Tips | ||||

Please submit clever ideas or thoughts about tools, techniques and resources you love or hate. Here are the tool reviews submitted in the past. |

||||

| Salary Survey Results | ||||

The results are in. You can access the results here. The three winners of my book, The Art of Designing Embedded Systems, are Josh Jordan, Tony Williamitis and Jeff Dombach. Thanks to everyone for participating. |

||||

| A Plea to Compiler Vendors | ||||

We still think of a compiler as a translator, something that converts a C function to object code, when in fact it could - and should - be much more. Embedded systems have their own unique demands, many of which have no parallel in the infotech world. I'm constantly astonished that the language products we buy largely don't recognize these differences. Budding programmers in college course learn to write correct code; they rarely learn to write fast code till confronted with an application rife with performance problems. Yet embedded is the home of real-time. A useful system must be both correct and fast enough; neither attribute is adequate by itself. The sad fact is that the state of compiler technology today demands we write our code, cross compile it, and then run it on the real target hardware before we get the slightest clue about how well it will perform. If things are too slow, we can only make nearly random changes to the source and try again, hoping we're tricking the tool into generating better code. Embodied in the wizardry of the compiler are all of the tricks and techniques it uses to create object code. Please, Mr. Compiler Vendor, remove the mystery from your tool! Give us feedback about how our real time code will perform. Give us a compiler listing file that tags each line of code with expected execution times. Is that floating point multiply going to take a microsecond or a thousand times that? Tell me! Though the tool doesn't know how many times a loop will iterate, it surely has a pretty good idea of the cost per iteration. Tell me! Even decades ago assemblers produced listings that gave the number of T-states per instruction. The developer could then sum these and multiply by number of passes through a loop. Why don't C compilers do the same sort of thing? When a statement's execution time might cover a range of values depending on the nature of the data, give me a range of times. Better yet, give me the range with some insight, like "for small values of x expect twice the execution time". When the code invokes a vendor-supplied runtime function, highlight that call in red in an assembler window. If I click on it, pop up a pseudo-code description of the routine that includes performance data. Clearly, some runtime functions are very data-dependent. A string concatenate's performance is proportional to the size of the strings - give me the formula so I can decide up-front, before starting test, how it will perform in the time domain. I'm a passionate believer in the benefit of code inspections, yet even the best inspection process is stymied by real time issues. "Jeez, this code sure looks slow" is hardly helpful and just not quantitative enough for effective decision-making. Better: bring the compiler output that displays time per statement. The vendors will complain that without detailed knowledge of our target environment it's impossible to convert T-states into time. Clock rate, wait states, and the like all profoundly influence CPU performance. But today we see an ever-increasing coupling between all of our tools. A lot of compiler/linker/locator packages practically auto-generate the setup code for chip selects, wait states and the like. We can - and often already do - provide this information. Timing is getting more complex. Modern 32 bit MCUs have tremendously-complex busses that make it tough to understand performance issues. The tools are already processor-aware; let us feed them some setup data and then they should spit out execution times. We can then diddle waits versus clock speed and see the impact long before building target hardware. Pipelines and prefetchers further cloud the issue, and add some uncertainty to the time-per-instruction. The compiler can still give a pretty reasonable estimate of performance. Very rarely do we look for the last one percent of accuracy when assessing the time aspects of a system. Give me data accurate to 20% or so and then I can make intelligent decisions about algorithm selection and implementation. A truly helpful compiler might even tag areas of concern. A tool that told us "This sure is an expensive construct; try xxxx" would no longer be a simple code translator; it's now an intelligent assistant, an extension of my thinking process, that helps me deal with the critical time domain of my system very efficiently. Obviously this sort of time information won't be exactly accurate, since no function is an island. Even with perfect timing data for each function interrupts and multitasking will skew real-world results. But it would be useful, painting a picture of what we can expect. Compilers help us with many procedural programming issues, catching syntactical errors as well as warning us about iffy pointer use and other subtle problem areas. They should be equally as helpful about real time issues. |

||||

| The History of the Microprocessor - Part 3 | ||||

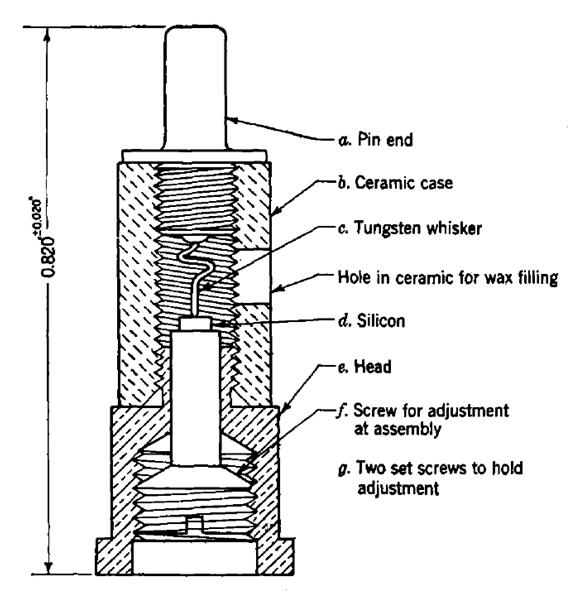

a href="https://www.ganssle.com/tem/tem256.html">Last issue I ran parts one and two of my history of the microprocessor. Here's part 3 of 4. The Semiconductor RevolutionWe're on track, by 2010, for 30-gigahertz devices, 10 nanometers or less, delivering a tera-instruction of performance. Pat Gelsinger, Intel, 2002 We all know how in 1947 Shockley, Bardeen and Brattain invented the transistor, ushering in the age of semiconductors. But that common knowledge is wrong. Julius Lilienfeild patented devices that resembled field effect transistors (though they were based on metals rather than modern semiconductors) in the 1920s and 30s (he also patented the electrolytic capacitor). Indeed, the USPTO rejected early patent applications from the Bell Labs boys, citing Lilienfeild’s work as prior art. Semiconductors predated Shockley et al by nearly a century. Karl Ferdinand Braun found that some crystals conducted current in only one direction in 1874. Indian scientist Jagadish Chandra Bose used crystals to detect radio waves as early as 1894, and Greenleaf Whittier Pickard developed the cat’s whisker diode. Pickard examined 30,000 different materials in his quest to find the best detector, rusty scissors included. Like thousands of others, I built an AM radio using a galena cat’s whisker and a coil wound on a Quaker Oats box as a kid, though by then everyone was using modern diodes. As I noted last issue, RADAR research during World War II made systems that used huge numbers of vacuum tubes both possible and common. But that work also led to practical silicon and germanium diodes. These mass-produced elements had a chunk of the semiconducting material that contacted a tungsten whisker, all encased in a small cylindrical cartridge. At assembly time workers tweaked a screw to adjust the contact between the silicon or germanium and the whisker. With part numbers like 1N21, these were employed in the RADAR sets built by MIT’s Rad Lab and other vendors. Volume 15 of MIT’s Radiation Laboratory Series, titled "Crystal Rectifiers," shows that quite a bit was understood about the physics of semiconductors during the War. The title of volume 27 tells a lot about the state of the art of computers: "Computing Mechanisms and Linkages."

A crystal rectifier circa 1943. From volume 15 of MIT’s Radiation Laboratory Series. It’s a bit under an inch long. Early tube computers used crystal diodes. Lots of diodes: the ENIAC had 7,200, Whirlwind twice that number. I have not been able to find out anything about what types of diodes were used or the nature of the circuits, but imagine something like 60s-era diode-transistor logic. While engineers were building tube-based computers, a team lead by William Shockley at Bell Labs researched semiconductors. John Bardeen and Walter Brattain created the point contact transistor in 1947, but did not include Shockley's name on the patent application. Shockley, who was as irascible as he was brilliant, in a huff went off and invented the junction transistor. One wonders what wonder he would have invented had be been really slighted. Point contact versions did go into production. Some early parts had a hole in the case; one would insert a tool to adjust the pressure of the wire on the germanium. So it wasn't long before the much more robust junction transistor became the dominant force in electronics. By 1953 over a million were made; four years later production increased to 29 million. That’s exactly the same number as a single Pentium III used in 2000. The first commercial part was probably the CK703, which became available in 1950 for $20 each, or $188 in today’s dollars. Imagine that... two hundred bucks for a single transistor. A typical smart phone would have 20 trillion dollars worth of transistors in it. Meanwhile tube-based computers were getting bigger, hotter and sucked ever more juice. The same University of Manchester which built the Baby and Mark 1 in 1948 and 1949 got a prototype transistorized machine going in 1953, and the full-blown model running two years later. With a 48 (some sources say 44) bit word, the prototype used only 92 transistors and 550 diodes! Even the registers were stored on drum memory, but it’s still hard to imagine building a machine with so few active elements. The follow-on version used just 200 transistors and 1300 diodes, still no mean feat. (Both machines did employ tubes in the clock circuit). But tube machines were more reliable as this computer ran about an hour and a half between failures. Though deadly slow it demonstrated a market-changing feature: just 150 watts of power were needed. Compare that to the 25 KW consumed by the Mark 1. IBM built an experimental transistorized version of their 604 tube computer in 1954; the semiconductor version ate just 5% of the power needed by its thermionic brother. (The 604 was more calculator than computer). The first completely-transistorized commercial computer was the, well, a lot of machines vie for credit and the history is a bit murky. Certainly by the mid-50s many became available. Last month I claimed the Whirlwind was important at least because it spawned the SAGE machines. Whirlwind also inspired MIT’s first transistorized computer, the 1956 TX-0, which had Whirlwind's 18 bit word. Ken Olsen, one of DEC's founders, was responsible for the TX-0's circuit design. DEC's first computer, the PDP-1, was largely a TX-0 in a prettier box. Throughout the 60s DEC built a number of different machines with the same 18 bit word. The TX-0 was a fully parallel machine in an era where serial was common. (A serial computer processed a single bit at a time through the ALU). Its 3600 transistors, at $200 a pop, cost about a megabuck. And all were enclosed in plug-in bottles, just like tubes, as the developers feared a high failure rate. But by 1974 after 49,000 hours of operation fewer than a dozen had failed. The official biography of the machine (RLE Technical Report No. 627) contains tantalizing hints that the TX-0 may have had 100 vacuum tubes, and the 150 volt power supplies it describes certainly aligns with vacuum tube technology. IBM's first transistorized computer was the 1958 7070. This was the beginning of the company's important 7000 series which dominated mainframes for a time. A variety of models were sold, with the 7094 for a time occupying the "fastest computer in the world" node. The 7094 used over 50,000 transistors. Operators would use another, smaller, computer to load a magnetic tape with many programs from punched cards, and then mount the tape on the 7094. We had one of these machines my first year in college. Operating systems didn't offer much in the way of security, and we learned to read the input tape and search for files with grades. The largest 7000-series machine was the 7030 "Stretch," a $100 million (in today's dollars) supercomputer that wasn't super enough. It missed its performance goals by a factor of three, and was soon withdrawn from production. Only 9 were built. The machine had a staggering 169,000 transistors on 22,000 individual printed circuit boards. Interestingly, in a paper named The Engineering Design of the Stretch Computer, the word "millimicroseconds" is used in place of "nanoseconds."

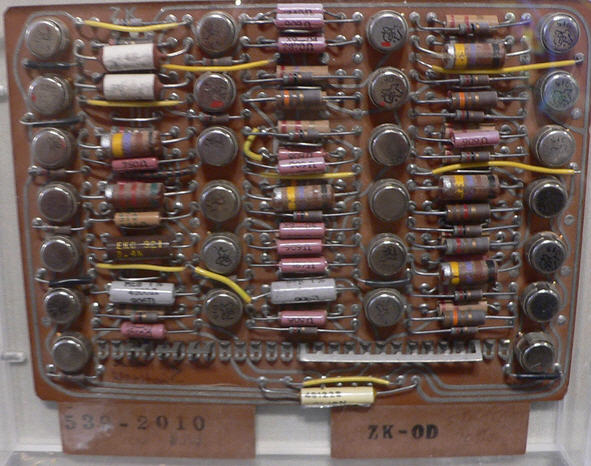

Circuit board from the 7030. It appears to have 26 transistors. While IBM cranked out their computing behemoths, small machines gained in popularity. Librascope's $16k ($118k today) LGP-21 had just 460 transistors and 300 diodes, and came out in 1963, the same year as DEC's $27k PDP-5. Two years later DEC produced the first minicomputer, the PDP-8, which was wildly successful, eventually selling some 300,000 units in many different models. Early units were assembled from hundreds of DEC's "flip chips," small PCBs that used diode-transistor logic with discrete transistors. A typical flip chip implemented three two input NAND gates. Later PDP-8s used ICs; the entire CPU was eventually implemented on a single integrated circuit. But woah! Time to go back a little. Just think of the cost and complexity of the Stretch. Can you imagine wiring up 169,000 transistors? Thankfully Jack Kilby and Robert Noyce independently invented the IC in 1958/9. The IC was so superior to individual transistors that soon they formed the basis of most commercial computers. Actually, that last clause is not correct. ICs were hard to get. The nation was going to the moon, and by 1963 the Apollo Guidance Computer used 60% of all of the ICs produced in the US, with per-unit costs ranging from $12 to $77 ($88 to $570 today) depending on the quantity ordered. One source claims that the Apollo and Minuteman programs together consumed 95% of domestic IC production. Every source I've found claims that all of the ICs in the Apollo computer were identical: 2800 dual three input NOR gates, using three transistors per gate. But the schematics show two kinds of NOR gates, "regular" versions and "expander" gates. The market for computers remained relatively small till the PDP-8 brought prices to a more reasonable level, but the match of minis and ICs caused costs to plummet. By the late 60s everyone was building computers. Xerox. Raytheon (their 704 was possibly the ugliest computer ever built). Interdata. Multidata. Computer Automation. General Automation. Varian. SDS. Xerox. A complete list would fill a page. Minis created a new niche: the embedded system, though that name didn't surface for many years. Labs found that a small machine was perfect for controlling instrumentation, and you’d often find a rack with a built-in mini that was part of an experimenter's equipment. The PDP-8/E was typical. Introduced in 1970, this 12 bit machine cost $6,500 ($38k today). Instead of hundreds of flip chips the machine used a few large PCBs with gobs of ICs to cut down on interconnects. Circuit density was just awful compared to today. The technology of the time was small scale ICs which contained a couple of flip flops or a few gates, and medium scale integration. An example of the latter is the 74181 ALU which performed simple math and logic on a pair of four bit operands. Amazingly, TI still sells the military version of this part. It was used in many minicomputers, such as Data General's Nova line and DEC's seminal PDP-11. The PDP-11 debuted in 1970 for about $11k with 4k words of core memory. Those who wanted a hard disk shelled out more: a 256KW disk with controller ran an extra $14k ($82k today). Today's $59 terabyte drive would have cost the best part of $100 million. Experienced programmers were immediately smitten with the PDP-11's rich set of addressing modes and completely orthogonal instruction set. Most prior, and too many subsequent, ISAs were constrained by the costs and complexity of the hardware, and were awkward and full of special cases. A decade later IBM incensed many by selecting the 8088, whose instruction set was a mess, over the orthogonal 68000 which in many ways imitated the PDP-11. Around 1990 I traded a case of beer for a PDP-11/70, but eventually was unable to even give it away. Minicomputers were used in embedded systems even into the 80s. We put a PDP-11 in a steel mill in 1983. It was sealed in an explosion-proof cabinet and interacted with Z80 processors. The installers had for reasons unknown left a hole in the top of the cabinet. A window in the steel door let operators see the machine’s controls and displays. I got a panicked 3 AM call one morning – someone had cut a water line in the ceiling. Not only were the computer's lights showing through the window – so was the water level. All of the electronics was submerged. I immediately told them the warranty was void, but over the course of weeks they dried out the boards and got it working again. I mentioned Data General: they were probably the second most successful mini vendor. Their Nova was a 16 bit design introduced a year before the PDP-11, and it was a pretty typical machine in that the instruction set was designed to keep the hardware costs down. A bare-bones unit with no memory ran about $4k – lots less than DEC's offerings. In fact, early versions used a single 74181 ALU with data fed through it a nibble at a time. The circuit boards were 15" x 15", just enormous, populated with a sea of mostly 14 and 16 pin DIP packages. The boards were two layers, and often had hand-strung wires where the layout people couldn't get a track across the board. The Nova was a 16 bit machine, but peculiar as it could only address 32 KB. Bit 15, if set, meant the data was an indirect address (in modern parlance, a pointer). It was possible to cause the thing to indirect forever. Before minis few computers had a production run of even 100 (IBM’s 360 was a notable exception). Some minicomputers, though, had were manufactured in the tens of thousands. Those quantities would look laughable when the microprocessor started the modern era of electronics. Next installment: Microprocessors Change the World. |

||||

| Jobs! | ||||

Let me know if you’re hiring embedded

engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intents of this newsletter.

Please keep it to 100 words. |

||||

| Joke For The Week | ||||

Note: These jokes are archived at www.ganssle.com/jokes.htm. If the odds are a million to one against something bad occurring, chances are 50-50 it will. |

||||

| Advertise With Us | ||||

Advertise in The Embedded Muse! Over 23,000 embedded developers get this twice-monthly publication. . |

||||

| About The Embedded Muse | ||||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |