|

|

|||

You may redistribute this newsletter for noncommercial purposes. For commercial use contact jack@ganssle.com. To subscribe or unsubscribe go to https://www.ganssle.com/tem-subunsub.html or drop Jack an email. |

|||

| Contents | |||

| Editor's Notes | |||

Did you know it IS possible to create accurate schedules? Or that most projects consume 50% of the development time in debug and test, and that it’s not hard to slash that number drastically? Or that we know how to manage the quantitative relationship between complexity and bugs? Learn this and far more at my Better Firmware Faster class, presented at your facility. See https://www.ganssle.com/onsite.htm . Last year was the 40th anniversary of the introduction of the first commercially-successful microprocessor. I did a write up about the history of the micro for Embedded Systems Design, and have recently consolidated and updated it here. I've done the same for an article about the nuances of scope and logic analyzer probes. Probes are not wires; they have a lot of electrical effects that can cause your circuit to malfunction. |

|||

| Quotes and Thoughts | |||

“Data is like garbage. You had better know what you are going to do with it before you collect it.” -Mark Twain |

|||

| Tools and Tips | |||

Please submit neat ideas or thoughts about tools, techniques and resources you love or hate. Dave Kellogg had some thoughts about Royce Muchmore’s point about keeping Internet Links (favorites) somewhere “in the cloud”: Generalizing Royce’s problem statement somewhat, I’ve tried various mechanisms to store various tidbits and points of information. When I find something interesting, either on the web or elsewhere, I’d like a “knowledge base” to store that in (so I can locate it relatively easily at some later time). Presently, I have a directory that contains a huge number of Word files. For each factoid I encounter, I create a new file containing the link, and perhaps a short synopsis. Word is convenient (vs text), because I can insert graphics, tables, text, links, etc. as needed. I name the file with a string of keywords that I associate with the topic. To later “mine” the knowledge base, I search on the file name (or within the file contents) using FileLocator Pro (www.mythicsoft.com, $39). FileLocator supports regular expressions for searching both file names and file content. It also allows searching within a previous search’s results. If a search returns two or more similar “hits”, I try to take the time to manually merge (if they are redundant). But like Royce, I’m somewhat unhappy with the approach, because there is no hierarchy or structure the knowledge. I suppose I could use a directory tree to group related items, and search the entire tree. For cloud-based access, perhaps this mechanism could be extended to store the files on something like Google drive, etc. I’m interested in knowing of any better lightweight solutions for recording and searching a growing personal knowledge-base. |

|||

| What I'm Reading | |||

The Perfect Process Storm. An interesting take on merging different processes, and the importance of metrics.Hackers have gotten into industrial HVAC systems. Security isn't easy, but some of these vulnerabilities are ridiculous. Insourcing - jobs are coming back from overseas.Intel's CTO thinks Moore's Law will hold for at least 8 to 10 years.Will robots destroy the working class? I've been predicting this for quite some time.A billion here, a billion there, pretty soon you're talking a failed software program. |

|||

| Analog Discovery | |||

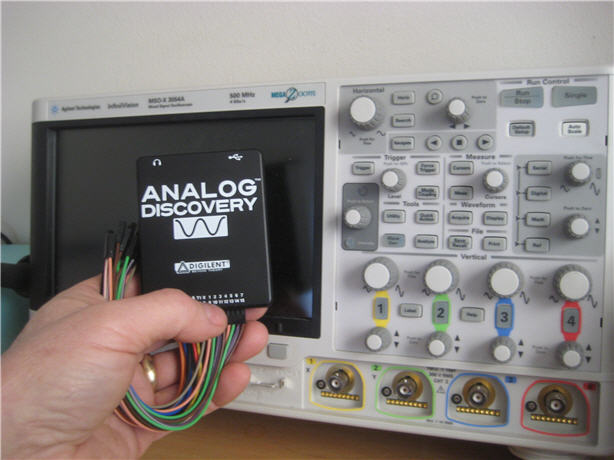

In Muse 232 Steve Fairhead mentioned Digilent's Analog Discovery USB oscilloscope. The folks there kindly sent me one and I've been playing with it.The claimed specs are impressive: 100 MSPS, dual channels with 14 bit A/D converters for $199 ($99 for students). It's also a logic analyzer with 16 digital inputs. But there's more. The product includes two arbitrary-waveform generators and 16 digital I/Os that can be a pattern generator.

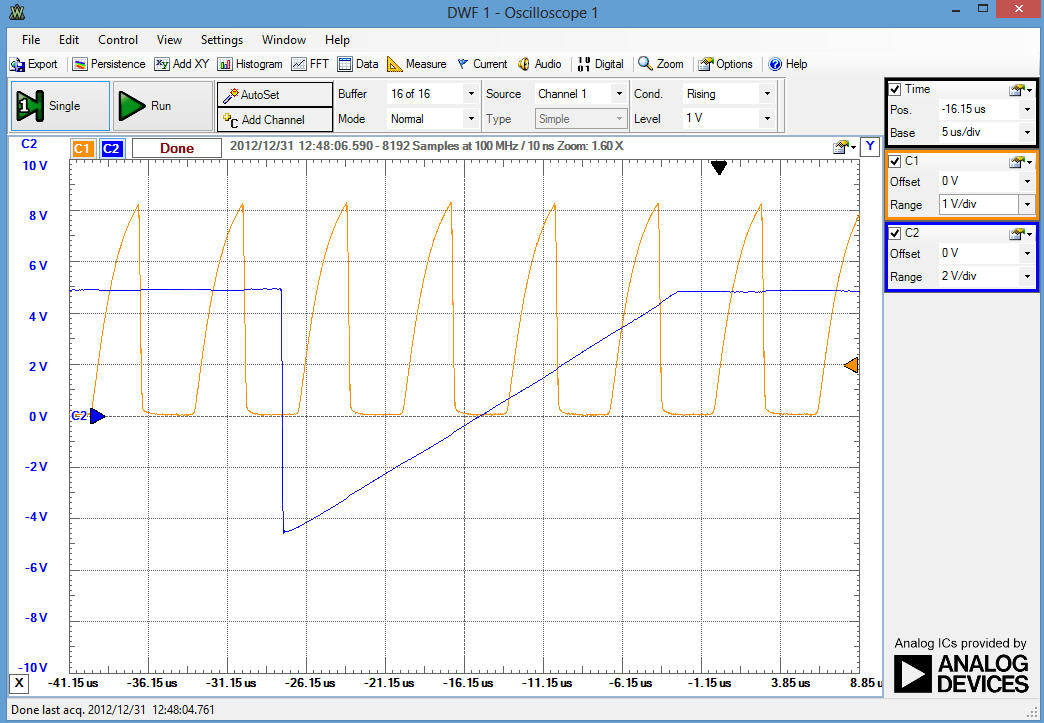

The 100 MSPS rating really got my attention. But on closer examination the analog bandwidth is 5 MHz. Sampling is therefore much higher than needed to avoid Nyquist problems. 5 MHz is typical for these low-cost USB oscilloscopes, and the "probes" (just wires from a header) would never support faster signals. The UI is a program called "Waveforms" which seems to be a generic driver for many of Digilent's products. There's a launcher screen which fires off instrument-specific applications, like one for the scope, and another for the waveform generator. All of the instrument applications are quite intuitive and easy to use. That said, and perhaps this is in part due a long life using conventional bench test equipment, I find clicking around to configure a scope setting a poor substitute for twisting a knob or pressing a button. But that's part of the tradeoff with all USB instruments.

On the other hand, Waveforms properly shapes the scope display to fill the application's window, no matter how big you make it. So it's easy to get an enormous display. And if you want a tiny window it cleverly drops the controls and other information in order to keep as much scope data displayed as possible. With a 16k buffer for each channel the scope can collect far more information than it can display. Navigating within the data is easy: just drag the horizontal or vertical axis.The max sweep rate is 10 ns/div, which places datapoints a full division apart. Though there is a dot-only mode, if you tell the system to connect the dots the software does some sort of tricky curve fit. The result is a beautiful curve. Analog bandwidth is rated at 5 MHz. I found that the displayed amplitude was within 5% of the correct value all the way to 9 MHz. At 10 MHz it's off by 10% and rapidly gets worse. But that's a lot better than the spec. One thing I like about my Agilent MSO-3054A mixed-signal scope is the huge number of automatic measurements it can make. The Analog Discovery's set is quite rich as well. I counted 38. Measurements are calculations done on the scope data, including obvious things like peak-peak voltage and frequency, as well overshoot and rise/fall time. It includes quite a range of triggering modes, including the ability to start the sweep on a pulse's width. And there's an interesting hysteresis filter as well. The device is a mixed signal scope in a novel way. I couldn't figure out how to put digital inputs on the scope screen (as a normal MSO does), but an alternative is to open both the logic analyzer application and the scope, and then put the windows next to each other. Like any MSO, the unit will cross trigger. At first I was disappointed with its responsiveness. It turns out there's an "acquisition delay" setting in the options menu; set that to its lowest value and there is no noticeable delay in updates when the signal changes.Then there's the audio view. The help file says "The audio view can play the data as sound." Pretty cool! The idea of multi-sensory troubleshooting appeals. But I could not get it to work. The documents were silent on this, but I suspect one has to plug headphones into a jack on the unit. But the connector isn't documented.An FFT mode is included which works pretty well, though I found the controls awkward and unfamiliar. If you've ever used a spectrum analyzer you'll be disappointed. None of these scopes have the RF front end needed to get decent FFT results. Of course, a real spectrum analyzer can rival a luxury car in cost.

The digital pattern generator can crank out signals up to 50 MHz, and it will even support custom patterns loaded from a .CSV file. It's very versatile and works well. But the fly leads that serve as connectors really aren't up to the task of transmitting data at that rate. A 50 MHz square wave looked very much like a sine wave on my Agilent. The analog waveform generator is limited to 20 KHz. It will create sine, ramp, triangle and custom waveforms. Each can be tuned in various ways: phase, amplitude, frequency, offset and the like.There are a few things I didn't like. The probes, as mentioned, are very weak. And though each is a different color it would be nice if they followed a standard, like the resistor color code. The autoset feature (which sets up "optimum" scope settings) is very slow (7 seconds in my setup) and results in settings I didn't care for. The latter is true, though, with every autoset I've ever used. Helpful tips pop up when mousing around. Too much so. They get in the way of reading the measurements.Both scope inputs are differential, which is a very useful feature. If all you need is single-ended be sure to ground the negative input. My test rig had a 20Kohm impedance, and with the negative input dangling the actual signal got mauled. I have no idea why.The documentation is via a help file, and is incomplete. The bottom line is that for $199, and especially at $99 for students, this is a great product. You're not going to do ARM development with it, but it's decent for a lot of analog work and for dealing with slow microcontrollers. The pattern and waveform generators are a real boon for running experiments. |

|||

| Zeal and the Developer | |||

A recent blog entry by Chip Overclock caught my attention. He talks about young folks selecting university majors. Some do it for money. Others for their zeal. His advice to aspiring matriculators: To be happy in any profession, you have to be successful at it. To be successful, you have to be proficient at it. To be proficient at it, you have to have spent thousands of hours practicing at it, no matter what your natural skill at it may be. You have to passionate about it, otherwise you'll never spend enough time at practice. You have to love it so much, you can't imagine not doing it. That's a pretty good basis for selecting a career. My daughter will graduate in this Spring with a degree in dance (and a minor in something vaguely more marketable). We struggled with her choice at first. But dance has been her passion since she was four. How could we not be supportive? Few of her high school friends had any fervor for anything other than their cell phones; we had to admire her dedication. A wise friend taught me that one needs two things from a career: money and fun. Either one alone is not enough. For me, engineering has always had those twin attributes. Enough money to make a decent living, and tons of fun. |

|||

| The End of 8/16 Bits? | |||

Dave Kellogg has been thinking that the collapse in the price of 32 bit microcontrollers will kill off smaller processors: There are a several considerations that seem to be left out of the typical "will 8-bit/16-bit CPUs die" discussion. First, there is increased coding productivity with 32-bit processors.I've noticed that a lot of the 16-bit C code I write would be simpler and more robust if I could always use 32-bit math without concern for the extra overhead. For instance, depending on my numeric scaling, I often need to consider if a 16-bit add might overflow, particularly for intermediate results. But when using a 32-bit processor, this entire class of problems can (mostly) be ignored. With 32-bits, there are fewer places that overflow can occur, and hence fewer opportunities for bugs. This results in less time to write the code, as well as less time to test (and debug and fix).For large programs (>64KB) without a 32-bit address space, the normal solution is various segmentation or paging schemes. But the extra complication in linker scripts, inter-page calls, etc. costs a lot of additional effort that is not directed at solving the underlying embedded problem. This costs even more engineering labor dollars, and can run into additional man-weeks of otherwise avoidable effort (which has a negative effect on time-to-market).Although not inherently part of the 8-bit vs 32-bit comparison, debugging support must also be considered. Newer 32-bit MPUs nearly always have on-chip debug capabilities (breakpoint, trace, etc). Older 8-bit parts rarely have the same level of debug capability. So debugging on an 8-bit or 16-bit processor often takes much longer, due to lack of visibility into the CPU.Assume that the burdened cost of a software engineer is $100/hour. Suppose that going to 32-bits would reduce labor costs by 3%. ie, save 3 days ($2,400) on a 3 month project. Then assuming a 32-bit cost penalty of 10 cents (which I suspect is too high, see next point), the 32-bit is cost effective if the lifetime volume is 24,000 units or less. Secondly, the relative cost penalty of 32-bits is decreasing.Today we have 50-cent 32-bit MPUs. So the cost penalty of using 32-bit instead of 8-bit or 16-bit processors cannot exceed the ceiling of 50 cents. Considering the relatively fixed costs of packaging, distribution, etc., I doubt if the actual cost penalty for 32-bits could possibly be more than, say, 30 cents. In reality, I suspect that even a 10-cent cost penalty is too high. This makes sense when you consider how little of a MPU's die is actually dedicated to the CPU. Yes, an 8-bit CPU may take only 25% of the space of a 32-bit CPU. But if the 32-bit CPU is only 10% of the overall chip, then the potential reduction by using an 8-bit CPU instead (with the same memories and peripherals) is only 75% * 10% = 7.5%. 7.5% of a 50-cent part is less than 4 cents. As memories grow and peripherals get more powerful, this penalty for the cost of a 32-bit CPU will continue to shrink. Third, there is the cost of obsolescence. Industrial embedded products are often in production for a decade or more. The last thing I do is pick an older part for a new design. Instead, I pick a part that is no more than a year or two into its life cycle. It is a pure waste of a company's resources to do unneeded product refreshes only due to obsolescence reasons. Generally, most of newer parts are in the 32-bit world. They are also built using more recent, smaller design geometries, and hence are more cost effective than older parts. Finally, there is the power consideration.In recent years and into the foreseeable future, power consumption is and will be king. This is true for both a smart phone and a cheap musical greeting card. Consider a battery-powered application, where the vast majority of the time the MPU is sleeping. The power consumed will be roughly proportional to the amount of time that the CPU is actually awake. But when the CPU is awake, its associated memories, clocks, etc. must also be powered. This is true for both an 8-bit and a 32-bit CPU. So the actual fraction of increased power consumed by an 32-bit processor (vs an 8-bit) is moderated by the overhead of the power for the supporting circuits and memories. So a 32-bit CPU is less than four times as memory hungry as an 8-biter.As compared to an 8-biter, a 32-bit CPU typically requires shorter instruction sequences (sometimes much shorter) to implement a given block of C code. This is particularly true when the application requires 32-bit math. So typically less power per computation is required by a 32-bit CPU. As a software engineer with today's choices, I have a very hard time justifying an 8-bit or 16-bit part in any new design. 32-bit is simply less painful, and is often cheaper in the overall life cycle, too. It does seem, though, that with time Moore's Law will drive 32 bit prices down to somewhere just above the cost of the packaging and the ARM tax. The only difference from an 8/16 bitter will be that tax. This delta will be important only for very high volume products. |

|||

| Yet More on Stacks | |||

Tom Evans has some more thoughts on stacks. Processors such as the MPC860 have a debug unit on board. This has program and data breakpoint registers that are most often used by an attached debug pod, but are also available to the software when the pod isn't connected. I've programmed these so that one data breakpoint rendered the bottom 64k of memory as "no access" to trap null pointers and null pointer accesses to large structures. On top of that I arranged the code, fixed data, stack, variable data and BSS sections, with the second data breakpoint set so that everything below the stack is read only. That catches stack underruns and anything that writes to the code or fixed data. |

|||

| Jobs! | |||

Let me know if you’re hiring embedded engineers. No recruiters please, and I reserve the right to edit ads to fit the format and intents of this newsletter. Please keep it to 100 words.

|

|||

| Joke For The Week | |||

Note: These jokes are archived at www.ganssle.com/jokes.htm. |

|||

| Advertise With Us | |||

Advertise in The Embedded Muse! Over 23,000 embedded developers get this twice-monthly publication. . |

|||

| About The Embedded Muse | |||

The Embedded Muse is Jack Ganssle's newsletter. Send complaints, comments, and contributions to me at jack@ganssle.com. The Embedded Muse is supported by The Ganssle Group, whose mission is to help embedded folks get better products to market faster. |

The Analog Discovery compared to a bench scope.

The Analog Discovery compared to a bench scope. Waveforms' scope user interface

Waveforms' scope user interface